Uk ai principles foundation models – UK AI Principles: Foundation Models sets the stage for this exploration, delving into the ethical and practical considerations of these powerful AI systems. The UK government has established a set of principles to guide the responsible development and deployment of AI, and these principles are particularly relevant when it comes to foundation models.

These models, capable of learning from vast amounts of data and performing a wide range of tasks, raise unique challenges that demand careful attention to ethical considerations.

This post will examine how the UK AI principles apply to foundation models, exploring the potential benefits and risks associated with their use. We’ll also delve into the existing regulatory framework, ongoing research initiatives, and the broader societal implications of these transformative technologies.

UK AI Principles

The UK government has established a set of AI principles to guide the responsible development and deployment of artificial intelligence (AI) systems. These principles are intended to ensure that AI is used in a way that benefits society, promotes fairness, and protects individuals.

They are particularly relevant to the development and use of foundation models, which are powerful AI systems capable of learning from vast amounts of data and performing a wide range of tasks.

Foundation Models and the UK AI Principles

Foundation models are a type of AI system that has gained significant attention due to their potential to revolutionize various industries. They are trained on massive datasets and can perform tasks across multiple domains, including natural language processing, image recognition, and code generation.

However, their development and deployment raise ethical concerns that need to be addressed. The UK AI principles provide a framework for navigating these challenges.

- Fairness:Foundation models should be developed and deployed in a way that is fair and unbiased. This means ensuring that they do not perpetuate or exacerbate existing societal inequalities. For instance, a foundation model used for recruitment should not discriminate against certain groups based on factors like gender or ethnicity.

- Transparency:The workings of foundation models should be transparent and understandable. This includes providing clear explanations of how they make decisions and what data they are trained on. Transparency helps build trust and accountability in AI systems.

- Accountability:There should be clear accountability for the development, deployment, and use of foundation models. This means identifying who is responsible for the decisions made by these systems and ensuring that they can be held accountable for any potential harm.

- Safety and Security:Foundation models should be developed and deployed in a way that prioritizes safety and security. This includes mitigating risks of bias, discrimination, and unintended consequences. It also involves ensuring that these models are protected from malicious attacks and misuse.

Ethical Considerations

The UK AI principles highlight the importance of ethical considerations in the development and deployment of foundation models. These considerations include:

- Bias and Discrimination:Foundation models are trained on massive datasets, which may contain biases that can be reflected in their outputs. This can lead to discrimination against certain groups. For example, a foundation model used for loan applications might discriminate against individuals based on their race or gender if the training data reflects existing societal biases.

- Privacy and Data Protection:Foundation models often rely on large amounts of personal data. It is crucial to ensure that this data is collected, used, and stored in a way that respects individuals’ privacy and complies with data protection regulations.

- Job Displacement:The widespread adoption of foundation models could potentially lead to job displacement in certain sectors. It is important to consider the economic and social impacts of these technologies and develop strategies to mitigate potential negative consequences.

- Misuse and Malicious Use:Foundation models can be misused for malicious purposes, such as creating deepfakes or spreading misinformation. It is essential to develop safeguards to prevent such misuse and ensure that these technologies are used responsibly.

Foundation Models

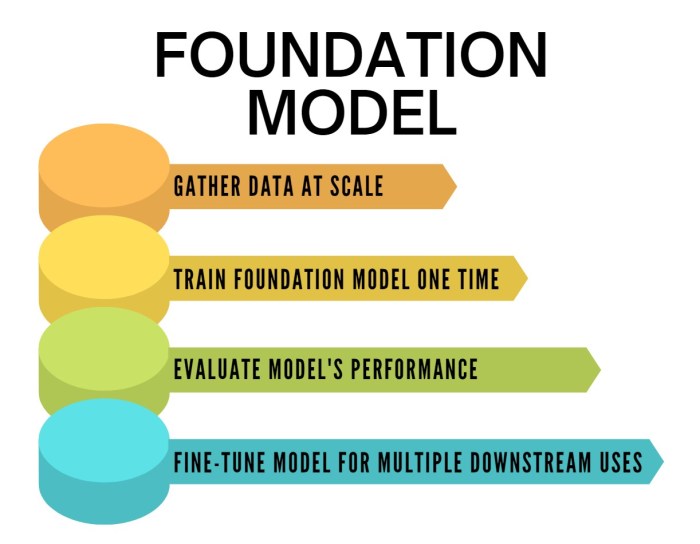

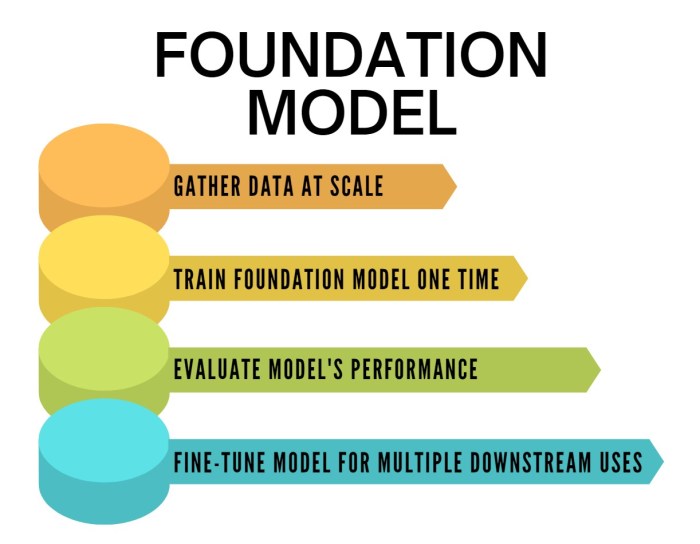

Foundation models are a new breed of AI systems that are transforming the landscape of artificial intelligence. They are large-scale, pre-trained models that can be adapted to perform a wide range of tasks, from language translation to image generation. They are trained on massive datasets and are capable of learning complex patterns and relationships within the data.

This allows them to perform tasks that were previously impossible for traditional AI systems.

Capabilities of Foundation Models

Foundation models possess a remarkable set of capabilities, exceeding the limitations of traditional AI systems. These capabilities stem from their ability to learn and adapt from vast datasets, enabling them to perform tasks with a level of sophistication and flexibility that was previously unattainable.

- Generalization:Foundation models can generalize to new tasks and domains beyond their initial training data. This allows them to be applied to a wide range of problems without requiring extensive retraining.

- Multi-Modality:They can process and understand different types of data, including text, images, audio, and video. This enables them to perform complex tasks that require integrating information from multiple sources.

- Zero-Shot Learning:Foundation models can perform tasks they have never been explicitly trained for, by leveraging their understanding of the underlying data patterns.

- Few-Shot Learning:They can learn new tasks with minimal training data, making them highly adaptable and efficient.

Limitations of Foundation Models

Despite their impressive capabilities, foundation models also have limitations that are important to consider. These limitations are inherent to their design and training process, and understanding them is crucial for responsible and ethical development and deployment.

- Bias:Foundation models can inherit biases present in the training data, leading to unfair or discriminatory outcomes. This is a critical concern, especially in applications that involve decision-making about individuals.

- Explainability:The complex inner workings of foundation models can make it difficult to understand why they make certain predictions. This lack of transparency can hinder trust and accountability.

- Safety and Security:Foundation models can be vulnerable to adversarial attacks, where malicious actors manipulate the model’s input to generate harmful outputs. This raises concerns about the potential for misuse and the need for robust security measures.

- Computational Cost:Training and deploying foundation models require significant computational resources, making them expensive and potentially inaccessible to smaller organizations or researchers.

Key Characteristics of Foundation Models

Foundation models are distinct from traditional AI systems in several key aspects. These characteristics highlight their unique capabilities and the challenges associated with their development and deployment.

- Scale:Foundation models are trained on massive datasets, often containing billions of data points. This scale allows them to learn complex patterns and relationships that are beyond the reach of traditional AI systems.

- Pre-training:They are pre-trained on a general-purpose dataset, allowing them to learn a broad range of knowledge and skills. This pre-training process lays the foundation for their ability to adapt to specific tasks.

- Fine-tuning:Foundation models can be fine-tuned for specific tasks by adapting their parameters to new datasets. This allows them to be customized for different applications without requiring extensive retraining from scratch.

Impact of Foundation Models in the UK

Foundation models, with their remarkable capabilities in understanding and generating human-like text, have the potential to revolutionize various sectors in the UK. These models, trained on vast datasets, are capable of performing complex tasks like translation, summarization, and code generation, opening up exciting possibilities for businesses and individuals alike.

Benefits of Foundation Models for UK Sectors

Foundation models can bring significant benefits to diverse sectors in the UK. Here are some key areas where these models can make a positive impact:

- Healthcare:Foundation models can be used to analyze medical records, assist in diagnosis, and develop personalized treatment plans. For example, the NHS is exploring the use of these models to improve patient care by automating administrative tasks and providing more accurate diagnoses.

- Finance:In the financial sector, foundation models can be employed for fraud detection, risk assessment, and personalized financial advice. These models can analyze vast amounts of data to identify patterns and anomalies, improving efficiency and accuracy in financial operations.

- Education:Foundation models can enhance the learning experience by providing personalized tutoring, generating educational content, and translating materials into different languages. These models can also be used to assess student progress and provide customized feedback.

- Manufacturing:In manufacturing, foundation models can optimize production processes, predict equipment failures, and improve quality control. By analyzing data from sensors and machines, these models can identify areas for improvement and increase overall efficiency.

- Government:Foundation models can be used by the government to automate tasks, improve communication with citizens, and analyze data for policy development. For instance, the UK government is exploring the use of these models to improve the efficiency of public services and enhance citizen engagement.

Challenges and Risks of Deploying Foundation Models in the UK

While the potential benefits of foundation models are significant, it is essential to acknowledge the challenges and risks associated with their deployment.

- Bias and Fairness:Foundation models are trained on massive datasets, which may contain biases that can lead to unfair or discriminatory outcomes. It is crucial to ensure that these models are trained on diverse and representative data to mitigate bias and promote fairness.

- Privacy and Security:The use of foundation models raises concerns about privacy and security, as these models may process sensitive data. It is essential to implement robust data protection measures and ensure compliance with relevant regulations to safeguard user privacy.

- Transparency and Explainability:The decision-making process of foundation models can be complex and opaque, making it challenging to understand how these models arrive at their conclusions. It is crucial to develop methods for explaining the reasoning behind model outputs to ensure transparency and accountability.

- Job Displacement:The automation capabilities of foundation models may lead to job displacement in certain sectors. It is important to address this concern by investing in training and upskilling programs to prepare the workforce for the changing job landscape.

- Misuse and Malicious Intent:Foundation models can be misused for malicious purposes, such as generating fake news or creating deepfakes. It is essential to develop safeguards and ethical guidelines to prevent the misuse of these models.

Examples of Foundation Model Applications in UK Industries

Foundation models are already being used in various industries within the UK, demonstrating their real-world impact.

You also can understand valuable knowledge by exploring europes throwing billions at quantum computers will it pay off.

- Healthcare:The NHS is collaborating with Google Health to use AI, including foundation models, to analyze medical images and predict patient outcomes, improving diagnosis and treatment.

- Finance:HSBC is using foundation models to detect fraud and improve risk assessment, leveraging the models’ ability to analyze large datasets and identify patterns.

- Education:The University of Oxford is using foundation models to personalize learning experiences, providing tailored content and feedback to students, and improving their engagement and understanding.

- Manufacturing:Rolls-Royce is using foundation models to optimize engine design and predict maintenance needs, improving efficiency and reducing downtime in their manufacturing processes.

- Government:The UK government is using foundation models to improve public services, such as automating tasks in the Department for Work and Pensions and analyzing data to develop better policies.

Governance and Regulation of Foundation Models

Foundation models, with their vast capabilities and potential impact, demand careful consideration of governance and regulation. The UK is at the forefront of developing ethical and responsible AI principles, and these principles are crucial for ensuring the safe and beneficial deployment of foundation models.

Existing Regulatory Framework and its Applicability

The UK has a robust regulatory framework for AI, encompassing various laws and guidelines. The key legislation relevant to foundation models includes the Data Protection Act 2018 (DPA), the Equality Act 2010, and the Consumer Rights Act 2015. These laws address data privacy, non-discrimination, and consumer protection, all of which are essential considerations for foundation model development and deployment.

However, the existing framework may require adaptation or expansion to address the unique characteristics of foundation models. For example, the DPA’s focus on personal data might not fully capture the potential risks associated with the use of sensitive information in training and deploying these models.

Similarly, the Equality Act’s emphasis on discrimination based on protected characteristics may not adequately address the potential for bias in foundation model outputs.

Areas for Adaptation or Expansion of Regulations

The following areas warrant specific attention in adapting or expanding the regulatory framework for foundation models:

- Data Governance:Clarifying the legal framework for data used in training foundation models, particularly regarding sensitive information, data ownership, and data access. This includes addressing potential conflicts with the DPA’s principles of data minimization and purpose limitation.

- Transparency and Explainability:Establishing clear requirements for transparency and explainability in the development and deployment of foundation models. This includes ensuring users understand the model’s limitations, biases, and decision-making processes.

- Algorithmic Bias and Fairness:Developing mechanisms to mitigate bias and promote fairness in foundation model outputs. This involves addressing potential biases introduced during data collection, model training, and deployment, and ensuring the models do not perpetuate existing social inequalities.

- Accountability and Liability:Defining clear lines of accountability and liability for the development, deployment, and use of foundation models. This includes addressing potential harms caused by model outputs and establishing mechanisms for redress.

- Safety and Security:Ensuring the safety and security of foundation models, particularly in terms of preventing malicious use and ensuring robustness against adversarial attacks. This includes establishing standards for model testing, validation, and monitoring.

Governance Mechanisms for Responsible Development and Deployment

To promote the responsible development and deployment of foundation models, the UK could consider implementing various governance mechanisms:

- Independent Oversight Body:Establishing an independent oversight body responsible for monitoring the development and deployment of foundation models, ensuring compliance with ethical and legal standards. This body could provide guidance, conduct audits, and investigate potential violations.

- Code of Conduct:Developing a voluntary code of conduct for organizations developing and deploying foundation models. This code could Artikel ethical principles, best practices, and accountability measures, promoting responsible AI development.

- Public Consultation and Engagement:Fostering public dialogue and engagement on the implications of foundation models. This includes providing opportunities for public input on regulatory frameworks, ethical guidelines, and potential risks and benefits.

- Research and Innovation:Supporting research and innovation in areas related to responsible AI development, including bias detection and mitigation, explainability, and safety and security.

- International Collaboration:Collaborating with other countries and international organizations to develop global standards and best practices for foundation model governance.

Research and Development in UK AI

The UK has established itself as a global leader in artificial intelligence (AI) research and development. The country boasts a thriving ecosystem of universities, research institutions, and technology companies actively engaged in pushing the boundaries of AI innovation. The UK’s commitment to AI research is evident in its strategic initiatives and substantial investments, aimed at nurturing talent, fostering collaboration, and driving technological advancements.

Research Initiatives in Foundation Models

The UK is home to several prominent research initiatives focused on advancing foundation models. These initiatives bring together researchers, developers, and industry partners to explore the potential of foundation models across various domains.

- The Alan Turing Institute, the UK’s national institute for data science and artificial intelligence, is actively involved in research related to foundation models. The institute is conducting research on the development of new foundation models, exploring their applications in various fields, and investigating the ethical and societal implications of these powerful AI systems.

- The University of Oxfordis a leading center for AI research, with a strong focus on developing and deploying foundation models. The university’s researchers are working on improving the efficiency, robustness, and explainability of foundation models, and exploring their potential for addressing real-world challenges in areas such as healthcare, finance, and climate change.

- DeepMind, a subsidiary of Alphabet Inc., is a renowned AI research company based in London. DeepMind has made significant contributions to the development of foundation models, particularly in the areas of natural language processing and computer vision. The company’s research has led to breakthroughs in language translation, image recognition, and game playing, demonstrating the transformative power of foundation models.

Key Research Areas in Foundation Models

The UK’s AI research community is actively exploring several key research areas related to foundation models. These areas hold significant potential for advancing the capabilities of foundation models and driving their adoption in various sectors.

- Explainability and Interpretability: Researchers are working on developing methods to understand and interpret the decision-making processes of foundation models. This is crucial for building trust and ensuring responsible use of these complex systems.

- Data Efficiency and Transfer Learning: The development of foundation models often requires vast amounts of training data. Researchers are exploring techniques to improve the efficiency of training foundation models, enabling them to learn effectively from smaller datasets and transfer knowledge across different tasks.

- Safety and Security: As foundation models become more powerful, it is essential to ensure their safety and security. Researchers are investigating methods to mitigate risks associated with malicious use, bias, and unintended consequences of these systems.

- Ethical Considerations: The development and deployment of foundation models raise significant ethical concerns. Researchers are exploring frameworks for responsible AI development and addressing issues related to bias, fairness, privacy, and accountability.

Role of Academia, Industry, and Government

The advancement of AI research and development in the UK is a collaborative effort involving academia, industry, and government. Each stakeholder plays a crucial role in fostering innovation and ensuring the responsible development and deployment of AI technologies.

- Academia: Universities and research institutions provide a fertile ground for fundamental AI research. They are responsible for training the next generation of AI researchers, developing cutting-edge algorithms, and exploring the theoretical foundations of AI.

- Industry: Technology companies are driving the practical applications of AI, developing and deploying foundation models for real-world use cases. They are also investing heavily in AI research and development, collaborating with academia to translate research into commercial products and services.

- Government: The UK government plays a vital role in supporting AI research and development through funding, policy initiatives, and regulatory frameworks. The government is committed to fostering a thriving AI ecosystem, attracting talent, and ensuring the ethical and responsible development of AI technologies.

Societal Implications of Foundation Models

Foundation models, with their remarkable capabilities, hold the potential to reshape various aspects of UK society. Their impact extends beyond technological advancements, reaching into the realms of employment, privacy, and social equity. Understanding these implications is crucial for harnessing the benefits of foundation models while mitigating potential risks.

Impact on Employment

Foundation models are expected to automate tasks currently performed by humans, leading to both opportunities and challenges in the UK labor market. * Job Displacement:Some roles, particularly those involving repetitive tasks, may be automated by foundation models, potentially leading to job displacement.

This requires proactive measures to reskill and upskill the workforce to adapt to evolving job demands.

Job Creation

Foundation models will also create new job opportunities in areas such as AI development, data science, and AI ethics. These roles will require specialized skills and expertise in AI technologies.

Augmentation

Foundation models can augment human capabilities, enabling workers to perform tasks more efficiently and effectively. This can lead to increased productivity and job satisfaction.

Privacy Concerns

The use of foundation models raises significant privacy concerns, as they often rely on vast datasets that may include personal information.* Data Security:Ensuring the security of personal data used to train foundation models is paramount. Robust security measures and data anonymization techniques are crucial to protect individuals’ privacy.

Transparency

Transparency in data collection and usage practices is essential for building trust. Users should be informed about how their data is being used and have control over their data.

Regulation

Clear regulations and guidelines are needed to address privacy concerns associated with foundation models. These regulations should strike a balance between innovation and individual rights.

Social Equity

Foundation models have the potential to exacerbate existing societal inequalities if not developed and deployed responsibly.* Bias:Foundation models trained on biased data can perpetuate and amplify existing biases, leading to unfair outcomes for certain groups. It is essential to develop methods for detecting and mitigating bias in training data.

Access

Ensuring equitable access to the benefits of foundation models is crucial. This includes providing resources and training to underserved communities to help them participate in the AI economy.

Fairness

Foundation models should be designed and deployed in a way that promotes fairness and equity. This requires careful consideration of the potential impacts on different groups and the development of mechanisms to ensure fair outcomes.

Ethical Considerations

Ethical considerations are paramount in the development and deployment of foundation models.* Transparency:Transparency is crucial for building trust in AI systems. Users should understand how foundation models work, their limitations, and the data used to train them.

Accountability

Clear lines of accountability are needed for decisions made by foundation models. This includes identifying the responsible parties for potential harms caused by AI systems.

Human Oversight

Human oversight is essential to ensure that foundation models are used ethically and responsibly. This includes establishing clear guidelines for human intervention in AI decision-making.

Strategies for Mitigating Risks and Maximizing Benefits, Uk ai principles foundation models

Several strategies can be employed to mitigate the potential risks and maximize the benefits of foundation models for UK society.* Public Engagement:Engaging the public in discussions about the societal implications of foundation models is crucial for building trust and ensuring responsible development and deployment.

Collaboration

Collaboration between researchers, industry leaders, policymakers, and civil society is essential for addressing the complex challenges posed by foundation models.

Regulation

Clear and comprehensive regulations are needed to govern the development, deployment, and use of foundation models. These regulations should prioritize ethical considerations, fairness, and transparency.

Education and Training

Investing in education and training programs to equip the workforce with the skills needed to work with and develop foundation models is essential.

International Collaboration on Foundation Models: Uk Ai Principles Foundation Models

Foundation models are a powerful new technology with the potential to revolutionize many industries. However, they also present significant challenges, such as bias, fairness, and safety. To address these challenges and ensure the responsible development and deployment of foundation models, international collaboration is crucial.International collaboration can help to share best practices, develop common standards, and coordinate research efforts.

It can also help to build trust and understanding between different countries and stakeholders.

The UK’s Role in Global AI Governance

The UK has been a leading voice in the global debate on AI governance. It has played a key role in shaping the OECD AI Principles, which provide a framework for responsible AI development and use. The UK has also established the AI Council, a group of experts that advises the government on AI policy.The UK government has also launched several initiatives to promote responsible AI, including the AI Sector Deal, which aims to make the UK a global leader in AI research and development.

The UK is also a member of the Global Partnership on Artificial Intelligence (GPAI), an international initiative that aims to promote responsible and human-centered AI.

Potential Areas for International Collaboration

There are many potential areas for international collaboration in the development and deployment of foundation models. These include:

- Developing common standards for data governance and privacy. This would help to ensure that foundation models are trained on data that is collected and used responsibly. It would also help to protect the privacy of individuals whose data is used to train these models.

- Sharing best practices for model evaluation and testing. This would help to ensure that foundation models are reliable and accurate. It would also help to identify and mitigate potential biases in these models.

- Coordinating research efforts. This would help to accelerate progress in the development of foundation models. It would also help to ensure that research is conducted in a responsible and ethical manner.

- Developing mechanisms for international cooperation on AI safety. This would help to address the potential risks associated with the development and deployment of powerful AI systems.

International collaboration is essential to address the challenges and opportunities presented by foundation models. By working together, countries can ensure that these powerful technologies are developed and deployed in a way that benefits all of humanity.