Global ai safety commitment echo eu risk approach – Global AI Safety Commitment Echoes EU’s Risk Approach, marking a significant shift in how we approach the development and deployment of artificial intelligence. This commitment, alongside the EU’s proposed AI Act, signifies a growing global consensus on the need for responsible AI governance.

The commitment Artikels a set of principles that aim to ensure AI is developed and used in a way that benefits humanity, while the EU’s risk-based approach categorizes AI systems based on their potential harm, implementing stricter regulations for high-risk applications.

This convergence of thought reflects a shared understanding that AI’s potential is immense, but so are its risks, necessitating a collaborative and proactive approach to ensure its responsible development.

The Global AI Safety Commitment and the EU’s AI Act represent two key pieces of a larger puzzle aimed at shaping the future of AI. Both initiatives highlight the importance of ethical considerations, transparency, and accountability in AI development. The EU’s Act, with its focus on risk mitigation, complements the commitment’s overarching principles, creating a comprehensive framework for responsible AI.

By aligning their efforts, these initiatives aim to foster a global landscape where AI innovation thrives within a framework of ethical and safety standards.

The EU’s Risk-Based Approach to AI

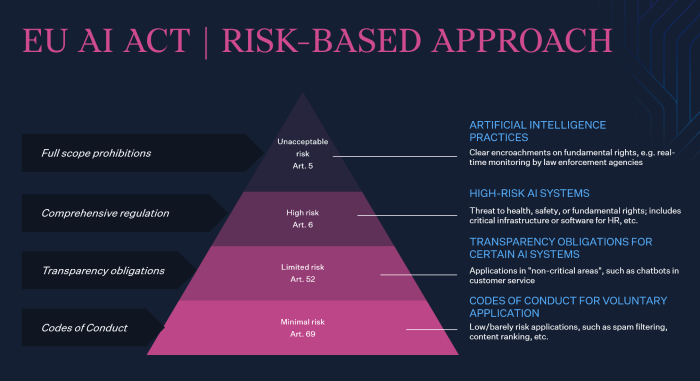

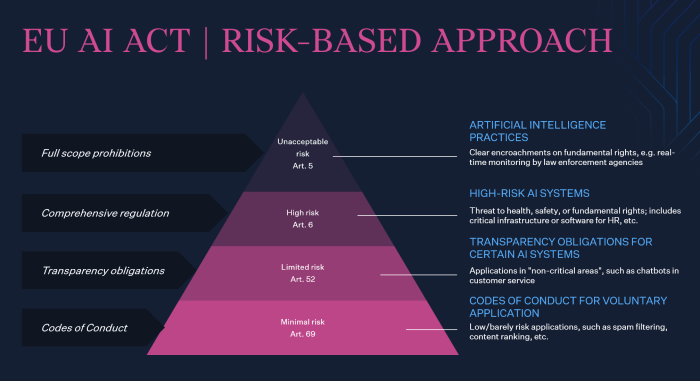

The European Union (EU) is at the forefront of regulating artificial intelligence (AI) with its proposed AI Act, which adopts a risk-based approach to address the potential harms and promote responsible AI development and deployment. This approach categorizes AI systems based on their level of risk and prescribes specific requirements for each category.

Risk Categories Defined by the EU AI Act

The EU AI Act categorizes AI systems into four risk categories: unacceptable risk, high risk, limited risk, and minimal risk. This categorization is crucial for determining the level of regulatory scrutiny and control applied to each system.

- Unacceptable Risk AI Systems:These systems are considered a clear threat to safety, fundamental rights, and public order. They are prohibited under the Act. Examples include AI systems that manipulate human behavior to exploit vulnerabilities, social scoring systems used for social control, and AI-powered facial recognition systems used for real-time identification in public spaces without sufficient safeguards.

- High-Risk AI Systems:These systems pose a significant risk to safety, health, fundamental rights, or the environment. The Act imposes strict requirements on these systems, including rigorous risk assessments, data governance, transparency, human oversight, and conformity assessment. Examples include AI systems used in critical infrastructure, healthcare, education, law enforcement, and recruitment.

- Limited Risk AI Systems:These systems pose a limited risk to individuals or society. The Act requires transparency and information requirements for these systems. Examples include AI-powered chatbots used for customer service or AI-driven recommendation systems used in e-commerce.

- Minimal Risk AI Systems:These systems pose minimal risk to individuals or society and are subject to minimal regulatory oversight. Examples include AI systems used for entertainment or games.

Requirements for High-Risk AI Systems

The EU AI Act imposes specific requirements for high-risk AI systems to mitigate potential risks and ensure responsible development and deployment.

- Risk Assessment:Developers of high-risk AI systems must conduct comprehensive risk assessments to identify and analyze potential risks. This assessment should cover the entire lifecycle of the system, from design and development to deployment and monitoring.

- Data Governance:The Act emphasizes the importance of data quality, integrity, and security. Developers must ensure that the data used to train and operate high-risk AI systems is accurate, relevant, and free from bias. They must also implement robust data security measures to protect sensitive information.

- Transparency and Explainability:High-risk AI systems must be designed and developed to be transparent and explainable. Users should be informed about the system’s purpose, how it works, and its limitations. The Act encourages the use of techniques that allow for the explanation of AI decisions, enabling users to understand the rationale behind the system’s output.

- Human Oversight:The Act emphasizes the importance of human oversight in AI systems. Developers must ensure that humans are involved in critical decision-making processes and can intervene when necessary. This ensures that AI systems operate within ethical and legal boundaries and that human values are preserved.

Learn about more about the process of newcleo uk nuclear startup to build its first factory in france in the field.

- Conformity Assessment:Before deploying high-risk AI systems, developers must undergo a conformity assessment process to demonstrate that their systems meet the requirements of the Act. This process involves independent third-party evaluation and certification to ensure the system’s safety, reliability, and compliance with ethical and legal standards.

Comparison with Other Regulatory Frameworks for AI

The EU AI Act’s risk-based approach distinguishes it from other regulatory frameworks for AI. While some countries have adopted more general AI regulations, the EU’s approach is more specific and targeted, focusing on high-risk AI systems and addressing potential harms directly.

- United States:The US has a more fragmented approach to AI regulation, with different agencies focusing on specific aspects of AI, such as privacy, cybersecurity, and antitrust. While there is no comprehensive federal AI legislation, the US government is exploring various policy options.

- China:China has adopted a more centralized approach to AI regulation, with the government issuing guidelines and standards for AI development and deployment. The focus is on promoting AI innovation while addressing ethical and societal concerns.

- Canada:Canada has adopted a principles-based approach to AI regulation, focusing on ethical guidelines and best practices. The government is also exploring more specific regulatory measures for high-risk AI systems.

Alignment of the Global AI Safety Commitment with the EU’s Risk-Based Approach

The Global AI Safety Commitment and the EU’s risk-based approach to AI regulation, while stemming from different origins, exhibit substantial alignment in their overarching goals and methodologies. This convergence presents opportunities for synergy and cooperation, while also highlighting potential challenges and areas of divergence.

Areas of Alignment

The Global AI Safety Commitment and the EU’s risk-based approach share a common objective: to ensure that AI development and deployment are conducted in a safe, ethical, and responsible manner. This shared goal is reflected in the specific areas of alignment:

- Risk Assessment and Mitigation:Both initiatives emphasize the importance of identifying and mitigating potential risks associated with AI systems. The EU’s risk-based approach categorizes AI systems based on their potential risk levels, while the Global AI Safety Commitment calls for robust safety research and development to address potential harms.

- Transparency and Explainability:Both initiatives recognize the need for transparency and explainability in AI systems. The EU’s regulation requires developers to provide information about the functioning of high-risk AI systems, while the Global AI Safety Commitment advocates for research and development of explainable AI systems.

- Human Oversight and Control:Both frameworks prioritize human oversight and control over AI systems. The EU’s regulation mandates human oversight for high-risk AI systems, while the Global AI Safety Commitment emphasizes the importance of human-centered design and development of AI systems.

Potential Synergies

The alignment between the two initiatives presents opportunities for synergistic collaboration:

- Shared Research and Development:Both initiatives can benefit from joint research and development efforts, particularly in areas like safety testing, risk assessment methodologies, and explainable AI.

- Best Practice Sharing:The EU’s experience in implementing its risk-based approach can inform the development of best practices for the Global AI Safety Commitment. Conversely, the Global AI Safety Commitment’s focus on safety research can contribute valuable insights to the EU’s regulatory framework.

- International Cooperation:Collaboration between the EU and proponents of the Global AI Safety Commitment can foster international cooperation and promote the adoption of common standards for responsible AI development and deployment.

Challenges in Aligning the Commitment with the EU’s Regulatory Framework

Despite the areas of alignment, challenges exist in aligning the Global AI Safety Commitment with the EU’s regulatory framework:

- Scope and Applicability:The Global AI Safety Commitment is a voluntary commitment, while the EU’s regulation is legally binding. This difference in scope and applicability could lead to inconsistencies in the implementation of safety standards.

- Enforcement Mechanisms:The Global AI Safety Commitment lacks specific enforcement mechanisms, while the EU’s regulation includes provisions for fines and other sanctions. This difference in enforcement mechanisms could lead to variations in the effectiveness of safety measures.

- Flexibility and Adaptability:The EU’s regulatory framework is designed to be flexible and adaptable to evolving technologies. The Global AI Safety Commitment, as a statement of principles, may require updates to keep pace with the rapid advancements in AI.

Potential Areas of Conflict or Divergence

While the two initiatives share common goals, potential areas of conflict or divergence exist:

- Definition of “High-Risk” AI:The EU’s regulation defines “high-risk” AI systems based on specific criteria, while the Global AI Safety Commitment adopts a broader approach to safety considerations. This difference in scope could lead to disagreements about which AI systems require the most stringent safety measures.

- Focus on Safety vs. Innovation:The Global AI Safety Commitment emphasizes safety research and development, while the EU’s regulation seeks to balance safety with innovation. This difference in focus could lead to conflicting priorities in the development and deployment of AI systems.

- Data Governance:The EU’s General Data Protection Regulation (GDPR) imposes stringent data protection requirements, while the Global AI Safety Commitment does not explicitly address data governance. This difference in approach could lead to challenges in reconciling data protection requirements with the development and deployment of AI systems.

Implications for AI Development and Deployment

The Global AI Safety Commitment and the EU’s AI Act represent significant efforts to shape the future of artificial intelligence. These initiatives aim to ensure responsible development and deployment of AI, addressing concerns related to safety, ethics, and societal impact.

The implications of these frameworks extend to various aspects of AI, influencing research, development, and deployment practices.

Impact on AI Research and Development

The commitment and the act encourage research and development that prioritize safety, robustness, and ethical considerations. They emphasize the need for AI systems to be transparent, accountable, and aligned with human values. This shift in focus may lead to:

- Increased funding and resources for research in AI safety, robustness, and explainability.

- Development of new methodologies and tools for assessing and mitigating AI risks.

- Greater collaboration between researchers, policymakers, and industry to address AI safety challenges.

Influence on Deployment of AI Systems, Global ai safety commitment echo eu risk approach

The commitment and the act introduce regulatory frameworks that aim to ensure the responsible deployment of AI systems. They define risk categories for AI applications and establish requirements for risk assessment, mitigation, and oversight. This can influence:

- The adoption of AI systems in various sectors, as companies navigate compliance requirements.

- The development of robust governance structures for AI systems, including mechanisms for monitoring and auditing.

- The establishment of ethical guidelines and best practices for AI deployment.

Examples of Impact on Specific AI Applications

The commitment and the act can impact specific AI applications in various ways. For example:

- Autonomous vehicles:The commitment and the act may require rigorous testing and validation of self-driving systems, ensuring safety and accountability in real-world scenarios.

- Healthcare AI:The deployment of AI in healthcare might be subject to stricter regulations, ensuring data privacy, algorithmic fairness, and patient safety.

- Facial recognition:The act may restrict the use of facial recognition technology in certain contexts, particularly those that raise privacy concerns.

Economic and Social Implications

The commitment and the act can have significant economic and social implications. They may:

- Promote innovation:By fostering a responsible AI ecosystem, these initiatives can encourage investment and innovation in areas that prioritize safety and ethics.

- Create new jobs:The development and deployment of safe and ethical AI systems may create new job opportunities in areas like AI safety engineering and ethical AI auditing.

- Address societal concerns:By addressing concerns about bias, discrimination, and job displacement, these initiatives can promote a more equitable and inclusive use of AI.

Challenges and Opportunities: Global Ai Safety Commitment Echo Eu Risk Approach

The Global AI Safety Commitment and the EU’s AI Act represent significant steps towards responsible AI development and deployment. However, implementing these initiatives presents both challenges and opportunities that need careful consideration. This section will explore these aspects, examining the potential hurdles and the positive implications for advancing AI in a safe and ethical manner.

Challenges in Implementation

Implementing the Global AI Safety Commitment and the EU’s AI Act involves navigating various challenges. These include:

- Defining and Measuring AI Safety:Establishing clear and universally accepted definitions of AI safety is crucial for effective implementation. This involves considering various aspects, such as AI system robustness, fairness, transparency, and accountability. Moreover, developing reliable metrics to assess and measure these aspects is essential for monitoring progress and ensuring compliance.

- Ensuring Global Collaboration and Consistency:Achieving effective global AI safety governance requires strong international cooperation. This involves aligning different regulatory frameworks, promoting interoperability between national and regional AI regulations, and fostering knowledge sharing and best practices. Ensuring consistency in AI safety standards across various jurisdictions is vital to avoid regulatory fragmentation and facilitate responsible AI development globally.

- Balancing Innovation and Regulation:Striking a balance between promoting AI innovation and implementing effective safety regulations is crucial. Overly restrictive regulations could stifle innovation and hinder the development of beneficial AI applications. On the other hand, inadequate regulation could lead to unintended consequences and undermine public trust in AI.

Finding the right balance requires careful consideration of the specific risks associated with different AI applications and the potential benefits they offer.

- Addressing Ethical Concerns:AI systems can raise ethical concerns related to bias, discrimination, privacy, and job displacement. Implementing the Global AI Safety Commitment and the EU’s AI Act requires addressing these concerns proactively. This involves developing ethical frameworks for AI development, ensuring fairness and transparency in algorithms, and mitigating potential negative societal impacts.

- Enforcing Compliance and Accountability:Effective enforcement mechanisms are essential for ensuring compliance with the Global AI Safety Commitment and the EU’s AI Act. This involves establishing clear penalties for violations, creating robust monitoring and auditing systems, and ensuring transparency in the decision-making process. Accountability mechanisms are also crucial for holding developers and deployers responsible for the safety and ethical implications of their AI systems.

Opportunities for Advancing Responsible AI

The Global AI Safety Commitment and the EU’s AI Act present significant opportunities for advancing responsible AI development. These include:

- Promoting Trust and Public Acceptance:By establishing clear safety and ethical standards, these initiatives can foster public trust in AI and encourage its responsible adoption. This can lead to greater societal acceptance of AI technologies and their potential benefits.

- Driving Innovation in AI Safety:The commitment and the act can stimulate research and development in AI safety technologies and best practices. This can lead to advancements in areas such as robustness, fairness, transparency, and accountability, contributing to the development of more reliable and ethical AI systems.

- Creating a Level Playing Field for AI Development:By setting global standards for AI safety, these initiatives can create a level playing field for AI development across different regions and industries. This can foster healthy competition and encourage responsible innovation in the global AI landscape.

- Enhancing International Collaboration:The Global AI Safety Commitment and the EU’s AI Act can facilitate greater international collaboration in AI research, development, and deployment. This can lead to the sharing of best practices, the development of common standards, and the creation of joint initiatives for advancing responsible AI.

- Addressing Societal Challenges:By promoting responsible AI development, these initiatives can help address various societal challenges, such as healthcare, climate change, and poverty. This can lead to the development of AI-powered solutions that contribute to a more sustainable and equitable future.

Potential Solutions to Address Challenges

Addressing the challenges associated with implementing the Global AI Safety Commitment and the EU’s AI Act requires a multi-faceted approach. Some potential solutions include:

- Establishing International AI Safety Organizations:Creating international organizations dedicated to AI safety can facilitate collaboration, research, and standard setting. These organizations could bring together experts from various fields, including AI, ethics, law, and policy, to develop best practices and guidelines for responsible AI development.

- Developing Global AI Safety Certification Programs:Implementing certification programs for AI systems can provide assurance of their safety and ethical compliance. These programs could involve independent audits and assessments based on predefined standards and guidelines. Successful certification could enhance public trust in AI and create incentives for developers to prioritize safety and ethical considerations.

- Investing in AI Safety Research and Development:Increased investment in AI safety research and development is crucial for addressing the challenges of robust, fair, and transparent AI. This could involve funding research projects, developing new tools and techniques, and supporting the development of AI safety expertise.

- Promoting Public Education and Awareness:Raising public awareness about AI safety and ethical considerations is essential for fostering informed discussion and building public trust. This could involve educational campaigns, public forums, and online resources that explain the potential benefits and risks of AI technologies.

- Encouraging Responsible AI Innovation:Governments and industry stakeholders should incentivize the development of AI technologies that prioritize safety, fairness, and ethical considerations. This could involve funding programs, tax incentives, and public procurement policies that favor responsible AI solutions.