Driverless cars pedestrian detection age race biases – it’s a topic that sparks both excitement and concern. As we inch closer to a future where self-driving vehicles navigate our streets, a critical question emerges: are these systems fair and unbiased?

The technology behind pedestrian detection is incredibly complex, and while it promises to enhance safety, it also raises the possibility of perpetuating societal biases.

Imagine a scenario where a driverless car fails to recognize a pedestrian due to their age or race. This isn’t just a theoretical problem; research suggests that biases can creep into machine learning algorithms, potentially leading to real-world consequences. This isn’t just a technological challenge, it’s an ethical one, demanding a thoughtful and proactive approach to ensure that driverless cars are truly safe for everyone.

The Challenge of Pedestrian Detection in Driverless Cars: Driverless Cars Pedestrian Detection Age Race Biases

Ensuring the safety of pedestrians is paramount in the development of driverless cars. Pedestrian detection systems are crucial components of these vehicles, tasked with identifying and tracking pedestrians in real-time. However, these systems face numerous challenges, making their development and deployment a complex undertaking.

Technical Complexities of Pedestrian Detection

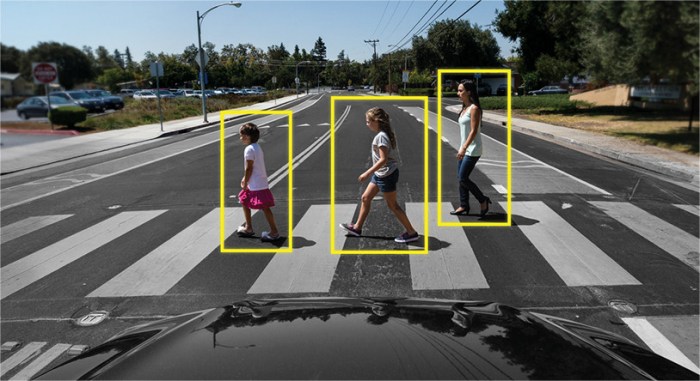

Pedestrian detection systems rely on a combination of computer vision algorithms and sensor data to identify pedestrians. These systems typically involve multiple steps:

- Image Acquisition:Cameras and other sensors capture images or video footage of the surrounding environment.

- Object Detection:Algorithms analyze the captured data to identify potential objects, including pedestrians.

- Feature Extraction:Features like shape, size, and movement patterns are extracted from the identified objects.

- Classification:The extracted features are compared to a database of known pedestrian characteristics to classify the object as a pedestrian or another entity.

- Tracking:Once a pedestrian is detected, the system tracks its movement and position over time to predict its trajectory.

The complexity arises from the need to handle various factors, including:

- Variability in Pedestrian Appearance:Pedestrians come in different shapes, sizes, and clothing styles, making it challenging for the system to consistently identify them.

- Occlusion:Pedestrians can be partially or fully obscured by other objects, such as vehicles or trees, making detection difficult.

- Lighting Conditions:Different lighting conditions, including darkness, glare, and shadows, can significantly affect the performance of the system.

- Weather:Rain, snow, fog, and other weather conditions can impair visibility and make pedestrian detection more challenging.

Factors Influencing Accuracy

Several factors can influence the accuracy of pedestrian detection systems, including:

- Sensor Quality:The quality of the sensors used for image acquisition plays a crucial role in the accuracy of the system. High-resolution cameras with wide field of view are essential for capturing detailed images of pedestrians.

- Algorithm Performance:The efficiency and robustness of the algorithms used for object detection, feature extraction, and classification directly impact the system’s accuracy. Advancements in deep learning techniques have significantly improved the performance of these algorithms.

- Training Data:The system’s accuracy is highly dependent on the quality and quantity of the training data used to train the algorithms. This data should encompass a wide range of pedestrian appearances, lighting conditions, and weather situations.

- Environmental Conditions:As mentioned earlier, lighting, weather, and other environmental factors can significantly affect the performance of the system. Robust algorithms are needed to handle these variations.

Real-World Scenarios of Failure

Despite significant advancements in pedestrian detection technology, there have been instances where these systems have failed to detect pedestrians, leading to accidents. Some examples include:

- Pedestrians in Darkness:Systems have struggled to detect pedestrians in low-light conditions, particularly when they are wearing dark clothing.

- Pedestrians in Shadows:Pedestrians walking in the shadows of buildings or trees can be difficult to detect, as the system may not recognize them as distinct objects.

- Pedestrians Crossing Unexpectedly:Systems may fail to detect pedestrians who cross the road unexpectedly, such as those running across the street or stepping out from behind parked vehicles.

The Potential for Age and Race Biases in Pedestrian Detection

The development of driverless cars is rapidly progressing, with pedestrian detection systems playing a crucial role in ensuring safety. However, these systems are not immune to biases that can arise from the data they are trained on. This section explores the potential for age and race biases in pedestrian detection algorithms and their potential consequences.

How Biases Can Be Introduced into Machine Learning Algorithms

Machine learning algorithms are trained on vast datasets, and the quality and diversity of this data significantly impact the algorithm’s performance. If the training data is biased, the resulting algorithm will likely reflect those biases. In the context of pedestrian detection, biases can be introduced through several ways:

- Data Collection Bias:If the data used to train the algorithm is not representative of the real-world population, the algorithm may struggle to accurately detect pedestrians from underrepresented groups. For instance, if the training data primarily features young, white pedestrians, the algorithm may be less accurate in detecting older or minority pedestrians.

- Labeling Bias:The process of labeling data for training can also introduce biases. If human annotators have implicit biases, they might mislabel pedestrians from certain groups, leading to inaccurate training data and biased algorithms.

- Algorithmic Bias:Even if the training data is unbiased, the algorithm itself can exhibit biases due to its design or the way it processes information. For example, an algorithm might prioritize certain features over others, leading to biased outcomes.

Research Findings on Age and Race Biases in Pedestrian Detection Systems

Several research studies have highlighted the potential for age and race biases in existing pedestrian detection systems. For example, a study by researchers at the University of Washington found that a popular pedestrian detection system was significantly less accurate at detecting darker-skinned pedestrians, particularly in low-light conditions.

This finding suggests that the algorithm might be relying on skin tone as a key feature for pedestrian detection, leading to discriminatory outcomes.

Hypothetical Scenario Demonstrating Harmful Outcomes

Imagine a scenario where a driverless car is navigating a busy city street. The car’s pedestrian detection system, trained on a dataset primarily featuring young, white pedestrians, encounters an elderly Black pedestrian crossing the road. Due to the system’s bias, it fails to detect the pedestrian, resulting in a tragic accident.

This hypothetical scenario illustrates how biases in pedestrian detection systems can have real-world consequences, leading to potentially life-threatening situations.

The Impact of Bias on Pedestrian Safety

The ethical implications of biased pedestrian detection systems in driverless cars are significant. These systems are designed to identify and react to pedestrians, but if they are biased, they could potentially lead to dangerous situations where certain groups of people are more likely to be missed or misidentified.

Discover the crucial elements that make britain underground mine settlement mars the top choice.

The potential for biases to disproportionately impact certain demographic groups is a serious concern. For example, a system that is biased against darker skin tones could lead to a higher risk of accidents involving pedestrians of color. This is because the system might not detect them as effectively as pedestrians with lighter skin tones.

Safety Risks Associated with Biased Systems

The safety risks associated with biased pedestrian detection systems are substantial and can have severe consequences. These systems are designed to ensure the safety of pedestrians by detecting them and taking appropriate action to avoid collisions. However, when these systems are biased, they can fail to recognize certain groups of pedestrians, increasing the risk of accidents and injuries.

- Increased Risk of Accidents:Biased systems may fail to detect pedestrians from certain demographic groups, leading to a higher risk of accidents. For instance, a system that is biased against older pedestrians might miss them, resulting in collisions.

- Higher Injury Rates:Even if an accident does not occur, a biased system could still lead to injuries. If a pedestrian is misidentified, the vehicle might react incorrectly, causing the pedestrian to be hit or injured.

- Disproportionate Impact:The impact of biased systems is not uniform across all groups. Certain demographic groups, such as those with darker skin tones or older individuals, are more likely to be affected by these biases.

Mitigating Bias in Pedestrian Detection Systems

The potential for bias in pedestrian detection systems is a serious concern. These systems are responsible for making critical decisions about vehicle behavior, and any biases could have life-threatening consequences. Therefore, it is crucial to develop strategies for mitigating bias in these systems.

Strategies for Mitigating Bias, Driverless cars pedestrian detection age race biases

There are several strategies that can be employed to mitigate bias in pedestrian detection algorithms. These strategies focus on various stages of the development and training process, aiming to ensure fairness and accuracy in the system’s decision-making.

- Data Augmentation and Balancing:One of the most effective ways to reduce bias is to ensure that the training data used to develop the algorithm is diverse and representative of the real world. This involves augmenting the dataset with images of pedestrians from various backgrounds, ages, races, and clothing styles.

Additionally, data balancing techniques can be used to address any imbalances in the dataset, ensuring that the algorithm is not biased towards certain groups.

- Bias Detection and Mitigation Techniques:Various techniques can be used to detect and mitigate bias during the training process. These include fairness metrics, which quantify the degree of bias in the model’s predictions. By monitoring these metrics, developers can identify and address any biases that arise during training.

- Adversarial Training:Adversarial training involves training a second model to specifically target and expose the biases in the main model. This technique can help identify and mitigate biases that might not be apparent through traditional methods.

- Explainable AI (XAI):XAI techniques can help understand the reasoning behind the algorithm’s decisions, making it easier to identify and address biases. By analyzing the model’s predictions and understanding the factors that influence its decisions, developers can pinpoint and correct any biases that might be present.

The Role of Data Diversity and Representation

Data diversity plays a crucial role in reducing bias in pedestrian detection systems. A diverse dataset that accurately represents the real-world population is essential for training an unbiased algorithm. This includes images of pedestrians from different backgrounds, ages, races, genders, and clothing styles.

- Collecting Data from Diverse Sources:Data should be collected from various sources to ensure a broad representation of the population. This could involve using public datasets, collaborating with diverse communities, and working with organizations that specialize in data collection from underrepresented groups.

- Addressing Data Imbalances:It is important to address any imbalances in the dataset. For example, if the dataset contains a disproportionately large number of images of pedestrians from a particular race or age group, this could lead to biases in the algorithm’s predictions.

Techniques like oversampling or undersampling can be used to balance the dataset and ensure that the algorithm is trained on a representative sample of the population.

Monitoring and Evaluation of Pedestrian Detection Systems

Ongoing monitoring and evaluation are crucial for identifying and addressing potential biases in pedestrian detection systems. This involves regularly assessing the performance of the system on a diverse range of data and identifying any disparities in its predictions across different groups.

- Regular Performance Evaluation:The system should be regularly evaluated on a diverse set of data to assess its performance across different demographic groups. This includes evaluating the system’s accuracy, precision, and recall for various demographics.

- Monitoring for Bias:Metrics should be used to monitor the system for any signs of bias. These metrics could include the difference in performance between different demographic groups or the frequency with which the system makes incorrect predictions for certain groups.

- Transparency and Accountability:It is important to ensure transparency and accountability in the development and deployment of pedestrian detection systems. This involves clearly documenting the data used to train the system, the algorithms employed, and the performance metrics used to evaluate the system.

The Future of Driverless Cars and Pedestrian Safety

The future of driverless cars holds immense promise for safer roads and more efficient transportation, but it also presents unique challenges, particularly when it comes to pedestrian safety. Ensuring that autonomous vehicles can reliably detect and respond to pedestrians is paramount to realizing the full potential of this technology.

While current pedestrian detection systems have made significant strides, there are still areas for improvement, especially in addressing the potential for age and race biases.

Technological Advancements for Improved Pedestrian Detection

Technological advancements offer a promising path towards improving pedestrian detection accuracy and mitigating bias.

- Enhanced Sensor Fusion:Combining data from multiple sensors, such as cameras, lidar, and radar, can provide a more comprehensive and robust understanding of the surrounding environment. This fusion of data helps to compensate for the limitations of individual sensors, leading to more accurate and reliable pedestrian detection.

For example, lidar can provide precise distance measurements, while cameras can offer detailed visual information. By integrating these data streams, driverless cars can achieve a more accurate and nuanced perception of pedestrians, reducing the risk of misidentification.

- Deep Learning and Artificial Intelligence:Advancements in deep learning and artificial intelligence (AI) are revolutionizing the field of computer vision. By training AI models on massive datasets of pedestrian images and videos, these systems can learn to identify pedestrians with greater accuracy and adapt to different lighting conditions, weather patterns, and pedestrian behaviors.

These AI-powered systems can learn to recognize subtle cues, such as body language and clothing, which can enhance their ability to distinguish pedestrians from other objects in the environment.

- Real-Time Adaptive Learning:Driverless cars can benefit from real-time adaptive learning, where the system continuously learns and improves its pedestrian detection capabilities based on its interactions with the real world. This continuous learning process allows the system to adapt to changing conditions and improve its performance over time.

For example, if the system encounters a previously unseen pedestrian behavior, it can learn to recognize and respond to it in the future, enhancing its overall robustness.

The Role of Regulations and Ethical Guidelines

Robust regulations and ethical guidelines are essential to ensure the safety of pedestrians in a future with driverless cars.

- Standardized Testing and Certification:Establishing standardized testing procedures for pedestrian detection systems is crucial to ensure that these systems meet rigorous safety standards. These tests should assess the systems’ ability to detect pedestrians in various conditions, including different lighting, weather, and pedestrian behaviors.

The certification process should also include evaluation of the systems’ ability to handle edge cases and potential biases.

- Transparency and Accountability:Transparency in the development and deployment of driverless car technology is paramount. This includes providing clear explanations of how pedestrian detection systems work, the data used to train them, and the potential for biases. This transparency fosters public trust and allows for accountability in the event of accidents or safety concerns.

- Ethical Considerations:Developing ethical guidelines for the development and deployment of driverless cars is crucial. These guidelines should address the potential for bias in pedestrian detection systems, ensuring that the technology does not discriminate against certain groups of pedestrians. For example, guidelines should ensure that the systems are not biased towards pedestrians of a particular age, race, or gender.

Stakeholders Involved in Addressing Bias in Pedestrian Detection Systems

Addressing bias in pedestrian detection systems requires a collaborative effort involving a wide range of stakeholders.