X investigation suspected breaches eu content rules dsa has sent shockwaves through the online world, raising serious questions about how platforms are complying with the EU’s Digital Services Act (DSA). The DSA, designed to create a safer and more transparent online environment, imposes strict regulations on how platforms handle content moderation and user data.

This investigation delves into alleged violations of these rules, potentially impacting the way we interact with online content and the future of platform responsibility.

At the heart of the matter lie concerns about specific content types that may have breached the DSA’s content moderation guidelines. The investigation examines platforms’ responses to harmful content, their transparency in enforcing policies, and the potential consequences of failing to uphold these standards.

This inquiry goes beyond simple content moderation; it explores the delicate balance between freedom of expression and the need to protect users from harmful content.

The Digital Services Act (DSA)

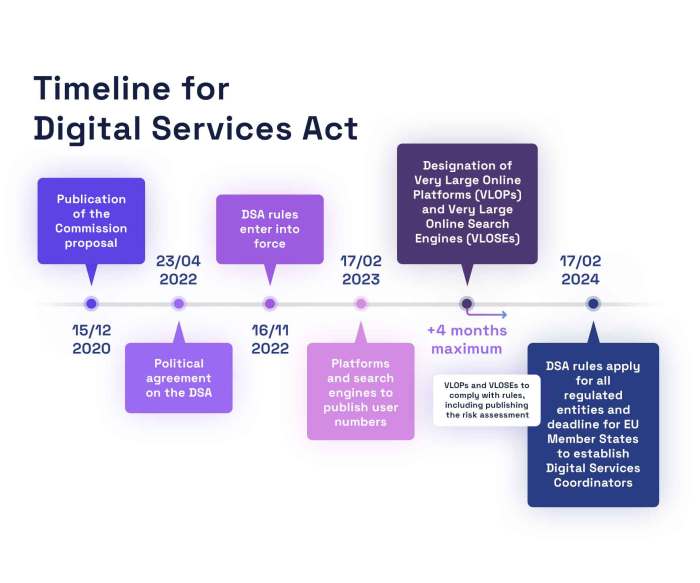

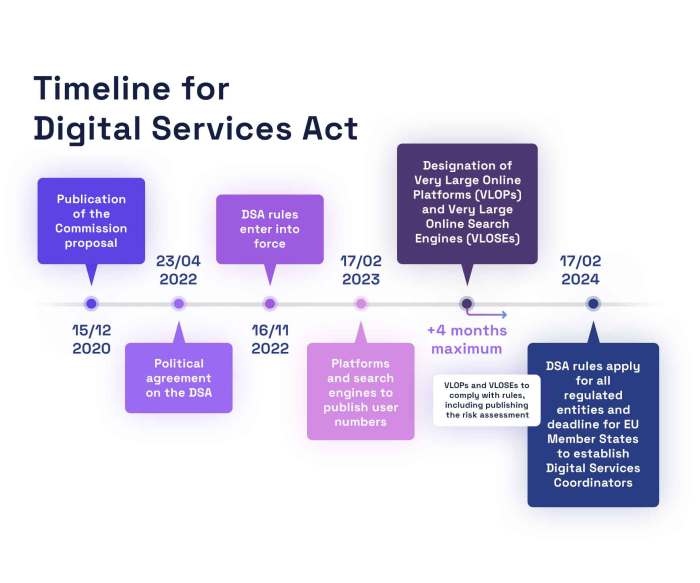

The Digital Services Act (DSA) is a landmark piece of legislation that aims to regulate online platforms and services within the European Union. It’s a response to the growing concerns about the impact of online platforms on society, particularly regarding content moderation, transparency, and the spread of misinformation.

Core Principles and Content Moderation

The DSA is built on several core principles that guide its approach to regulating online platforms. These principles aim to create a safer and more responsible online environment while respecting fundamental rights. Key principles include:

- Transparency:Platforms are required to be transparent about their algorithms, content moderation policies, and how they handle user data. This includes providing users with clear information about how their content is being moderated and the reasons behind any content removals.

- Accountability:Platforms are held accountable for the content they host and the actions they take to moderate it. This includes establishing robust systems for reporting and removing illegal content and for responding to user complaints.

- User Rights:The DSA emphasizes user rights, including the right to access information, the right to freedom of expression, and the right to a fair and transparent process when their content is moderated.

The DSA’s focus on content moderation is particularly relevant to online platforms, as it seeks to address the challenges of harmful content, misinformation, and online abuse. The act sets out specific obligations for platforms regarding content moderation, including:

- Proactive Content Moderation:Platforms are required to take proactive steps to identify and remove illegal content, such as hate speech, child sexual abuse material, and terrorist content. This includes developing and implementing robust systems for content detection and removal.

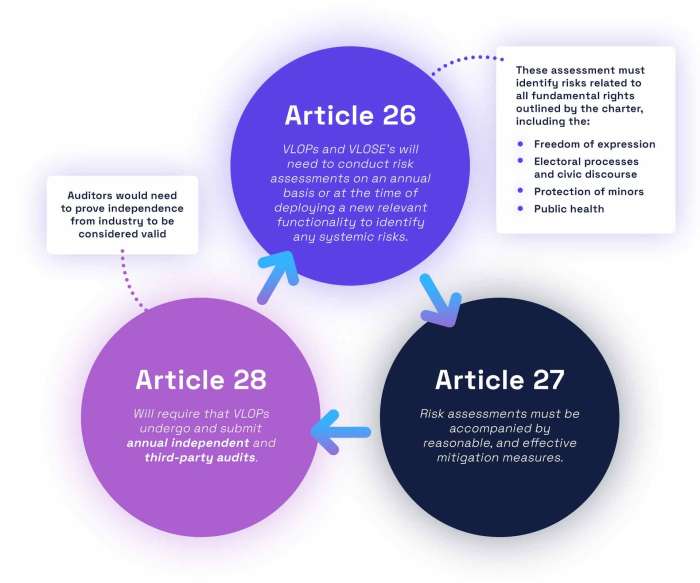

- Risk Assessment:Platforms must conduct risk assessments to identify potential harms associated with their services and implement measures to mitigate those risks. This includes considering the potential for the spread of misinformation, online harassment, and other forms of harm.

- Transparency in Content Moderation:Platforms are required to provide users with clear information about their content moderation policies, including the criteria used for removing content and the process for appealing decisions. This transparency aims to ensure fairness and accountability in content moderation practices.

Obligations for Online Platforms

The DSA imposes several obligations on online platforms, with a particular emphasis on content moderation and transparency. These obligations aim to ensure that platforms are responsible for the content they host and that users have a clear understanding of how their content is being moderated.

- Content Moderation Policies:Platforms must have clear and publicly accessible content moderation policies that Artikel the types of content they will remove and the process for doing so. These policies should be consistent with the DSA’s principles of transparency, accountability, and user rights.

- Transparency Reports:Platforms are required to publish regular transparency reports that provide detailed information about their content moderation practices. These reports should include data on the number of content removals, the reasons for those removals, and the process for handling user complaints.

- Independent Oversight:Platforms must establish mechanisms for independent oversight of their content moderation practices. This could include appointing an independent ombudsman or setting up a dedicated body to review content moderation decisions and address user complaints.

Potential Impact on the Online Content Ecosystem

The DSA is expected to have a significant impact on the online content ecosystem, influencing how platforms operate, how users interact with content, and the overall landscape of online information. Here are some potential impacts:

- Increased Transparency and Accountability:The DSA’s requirements for transparency and accountability are likely to lead to greater openness about how platforms operate and how content is moderated. This could increase trust in online platforms and empower users to better understand and engage with the content they encounter.

- Improved Content Moderation:The DSA’s emphasis on proactive content moderation and risk assessment could lead to more effective and consistent content moderation practices. This could help to reduce the spread of harmful content, misinformation, and online abuse, creating a safer online environment for users.

- Shifts in Platform Responsibilities:The DSA’s obligations for platforms could lead to significant shifts in their responsibilities, particularly regarding content moderation. Platforms may need to invest more resources in developing robust content moderation systems, establishing independent oversight mechanisms, and responding to user complaints.

Suspected Breaches of EU Content Rules: X Investigation Suspected Breaches Eu Content Rules Dsa

The Digital Services Act (DSA) is a landmark piece of legislation designed to regulate online platforms and protect users from harmful content. The DSA introduces a range of content rules that platforms must adhere to, with the aim of creating a safer and more responsible online environment.

This investigation delves into suspected breaches of these content rules, exploring the specific regulations involved, potential examples of violations, and the consequences for non-compliance.

Specific Content Rules Under Investigation

The investigation is focusing on several key content rules Artikeld in the DSA, including:

- Prohibition of Illegal Content:Platforms are required to remove illegal content promptly, such as content that incites violence, hate speech, or child exploitation.

- Transparency and Accountability:Platforms must provide users with clear information about their content moderation policies, including how they handle complaints and appeals.

- Removal of Disinformation:The DSA mandates platforms to take measures to combat the spread of disinformation, including by identifying and removing false or misleading information.

- Protection of Minors:Platforms must implement measures to protect minors from harmful content, including age verification and parental controls.

Examples of Potential Breaches

The investigation has identified several potential breaches of these content rules, with examples including:

- Hate Speech on Social Media Platforms:Social media platforms have been accused of failing to remove hate speech content promptly, allowing it to proliferate and incite violence against marginalized groups.

- Spread of Disinformation on Online News Sites:Some online news sites have been accused of deliberately spreading false or misleading information, often with the aim of influencing public opinion or generating clicks.

- Exposure of Minors to Harmful Content on Video-Sharing Platforms:Video-sharing platforms have been criticized for failing to implement adequate safeguards to protect minors from exposure to inappropriate or harmful content.

Consequences of Violating Content Rules

The DSA Artikels a range of penalties for platforms that violate its content rules. These penalties include:

- Fines:Platforms can be fined up to 6% of their global annual turnover for serious breaches of the DSA.

- Platform Limitations:In cases of repeated or egregious violations, platforms may face restrictions on their operations, such as being forced to remove certain content or features.

- Legal Action:Individuals or organizations can also pursue legal action against platforms for violating the DSA’s content rules.

The Scope and Methodology of the Investigation

This investigation aims to determine whether the suspected breaches of EU content rules under the Digital Services Act (DSA) have occurred. It seeks to assess the extent of compliance with the DSA’s provisions and identify any potential violations.The investigation employs a multi-faceted approach, encompassing data collection, analysis, and expert evaluation.

The primary focus is on gathering evidence from various sources, including platform data, user reports, and expert opinions.

Browse the implementation of netherlands startup scene is booming but still needs to do more in real-world situations to understand its applications.

Data Collection and Analysis

The investigation relies on a comprehensive data collection strategy to gather relevant information. This includes:

- Platform Data:Accessing platform data, such as user content, moderation decisions, and algorithms, is crucial to understand the platform’s operations and potential violations. This data can provide insights into the platform’s content moderation practices and the effectiveness of its systems in addressing harmful content.

- User Reports:User reports play a vital role in identifying potential breaches. These reports provide valuable insights into users’ experiences and highlight specific instances of content that may violate the DSA’s rules.

- Expert Opinions:Engaging with experts in relevant fields, such as law, technology, and social media, provides valuable perspectives and insights. Expert opinions can help interpret platform data, assess compliance with the DSA’s provisions, and identify potential areas of concern.

The collected data is then subjected to rigorous analysis to identify patterns, trends, and potential violations. This analysis involves:

- Content Analysis:Analyzing user content to identify instances of prohibited content, such as hate speech, disinformation, and illegal content, is a key aspect of the investigation. This analysis can help determine whether the platform has taken appropriate measures to remove or mitigate such content.

- Algorithm Analysis:Examining the algorithms used by platforms to recommend content and moderate user interactions is essential. This analysis can help assess the potential for algorithmic bias and its impact on content moderation decisions.

- Transparency Analysis:Evaluating the platform’s transparency measures, including information about its content moderation policies, algorithms, and enforcement practices, is crucial to determine whether the platform is meeting the DSA’s transparency requirements.

Challenges and Complexities

The investigation faces several challenges, including:

- Cross-Border Cooperation:Many platforms operate across multiple jurisdictions, making cross-border cooperation essential for effective enforcement. Coordinating with authorities in different countries can be complex, requiring effective communication and collaboration.

- Access to Data:Obtaining access to platform data can be challenging, as platforms may be reluctant to share sensitive information. Negotiations and legal frameworks are necessary to ensure access to the data required for the investigation.

- Technical Complexity:Understanding and analyzing complex algorithms and platform operations can be technically challenging. Expertise in data science, artificial intelligence, and platform engineering is essential for effective investigation.

- Evolving Landscape:The online environment is constantly evolving, with new technologies and platforms emerging. The investigation must adapt to these changes and ensure its methods remain relevant and effective.

Implications for Online Platforms and Users

The investigation into suspected breaches of EU content rules under the DSA has far-reaching implications for online platforms and their users. The potential regulatory actions and changes in content moderation practices could significantly alter the digital landscape, impacting how platforms operate and how users interact with online content.

Impact on Online Platforms, X investigation suspected breaches eu content rules dsa

The investigation’s findings could lead to a range of regulatory actions, from fines and warnings to more stringent content moderation requirements. Platforms may face pressure to implement more robust content moderation systems, potentially leading to increased investment in technology and human resources.

- Increased Content Moderation:Platforms may be required to adopt stricter content moderation policies and practices, including expanding their efforts to identify and remove illegal content. This could involve implementing new algorithms, hiring more content moderators, and collaborating with external experts.

- Transparency and Accountability:The DSA emphasizes transparency and accountability, requiring platforms to provide more information about their content moderation practices. This could include publishing reports on the volume and types of content removed, as well as providing clear guidelines for users on how to report content and appeal decisions.

- Potential for Fines and Sanctions:Non-compliance with the DSA’s requirements could result in significant fines, potentially impacting platforms’ financial stability and profitability. The investigation could also lead to other sanctions, such as restrictions on platform features or even temporary bans.

Impact on Users

The investigation’s outcomes could also have significant implications for users, potentially impacting their access to content, user rights, and platform accountability.

- Changes in Content Availability:Depending on the investigation’s findings, platforms may remove or restrict access to certain types of content, potentially affecting users’ ability to access information or express themselves freely online.

- Enhanced User Rights:The DSA aims to strengthen user rights, such as the right to access information about platform algorithms and the right to appeal content moderation decisions. This could empower users to have a greater say in how platforms operate and how their content is moderated.

- Increased Platform Accountability:The investigation could lead to increased accountability for platforms, potentially making them more responsive to user complaints and concerns. This could involve providing clearer and more accessible channels for users to report content violations and receive timely responses.

Societal Implications

The investigation raises important questions about the balance between free speech and content moderation, highlighting the complex challenges of regulating online platforms in a way that protects both individual rights and societal interests.

- Balancing Free Speech and Content Moderation:The investigation underscores the need to strike a balance between protecting freedom of expression and preventing the spread of harmful content online. This delicate balance requires careful consideration of the potential impacts on both individual users and society as a whole.

- Impact on Online Discourse:The investigation’s outcomes could influence the nature of online discourse, potentially shaping the types of content that are shared and consumed online. This could have significant implications for the quality and diversity of online conversations.

- Role of Technology and Regulation:The investigation highlights the growing role of technology and regulation in shaping the online environment. It underscores the importance of finding effective ways to regulate online platforms without stifling innovation or limiting freedom of expression.

Future Directions and Recommendations

The DSA’s implementation marks a significant step towards a safer and more accountable online environment. However, the evolving nature of the digital landscape necessitates continuous refinement and adaptation of content moderation practices. This section explores recommendations for improving content moderation, addressing challenges, and navigating the evolving digital landscape.

Recommendations for Improving Content Moderation Practices

The effectiveness of content moderation hinges on a multifaceted approach that balances user safety with freedom of expression. The following recommendations can enhance content moderation practices:

- Transparency and Accountability: Platforms should provide clear and accessible information about their content moderation policies, including the rationale behind decisions and the mechanisms for appeals. This transparency fosters trust and accountability, enabling users to understand the principles governing content moderation.

- Human Oversight and AI Collaboration: While AI tools can play a role in identifying harmful content, human oversight is crucial for nuanced decision-making. Combining AI with human judgment ensures accurate and ethical content moderation, addressing potential biases and mitigating the risk of over-censorship.

- User Empowerment: Platforms should empower users to report problematic content and engage in constructive dialogue about content moderation policies. User feedback can provide valuable insights and help platforms refine their approaches.

- Contextual Understanding: Content moderation should take into account the context in which content is shared. This involves considering factors such as the intent of the user, the audience, and the potential impact of the content.

Addressing Challenges Related to Content Moderation and Online Safety

The digital landscape presents unique challenges in regulating content moderation. These challenges require innovative strategies:

- Combating Disinformation and Manipulation: The spread of misinformation and manipulation poses a significant threat to online safety and democratic processes. Platforms need to develop robust mechanisms for detecting and mitigating these threats, such as fact-checking initiatives, collaboration with independent fact-checkers, and algorithms that identify and demote false or misleading content.

- Protecting Vulnerable Groups: Platforms should prioritize the protection of vulnerable groups from online harm, such as children, minorities, and individuals facing discrimination. This involves implementing targeted measures, such as age verification mechanisms, content filtering tools, and proactive measures to combat hate speech and harassment.

- Balancing Freedom of Expression with User Safety: Striking a balance between freedom of expression and user safety is a delicate task. Platforms need to adopt a nuanced approach that respects diverse viewpoints while ensuring a safe online environment. This requires clear and transparent policies, robust appeals mechanisms, and continuous dialogue with stakeholders.

Navigating the Evolving Digital Landscape

The digital landscape is constantly evolving, with new technologies and platforms emerging. This evolution necessitates continuous adaptation of content moderation practices:

- Emerging Technologies and Content Moderation: The rise of technologies such as artificial intelligence (AI), virtual reality (VR), and augmented reality (AR) presents new challenges and opportunities for content moderation. Platforms need to develop strategies for moderating content generated or shared in these emerging environments.

- Cross-Border Cooperation: Content moderation often transcends national borders, requiring collaboration between platforms and regulators across different jurisdictions. International cooperation is essential for tackling global challenges related to online safety and content regulation.

- User Education and Awareness: Empowering users with knowledge and critical thinking skills is crucial for navigating the complexities of the online world. Platforms can play a role in promoting digital literacy and critical media consumption habits.