What does europes approach data privacy mean for gpt and dall e – Europe’s data privacy approach, particularly the General Data Protection Regulation (GDPR), has significant implications for the development and use of powerful AI models like GPT and DALL-E. These models, capable of generating text and images, rely heavily on vast datasets for training, raising questions about data collection, storage, and usage in compliance with GDPR’s strict regulations.

Imagine a world where AI models can create realistic images of individuals, or generate text that mirrors a person’s writing style. While this opens doors for innovation, it also raises concerns about potential misuse and privacy violations. GDPR aims to address these concerns by establishing a framework for data protection, ensuring individuals have control over their personal information.

GDPR and its Impact on AI

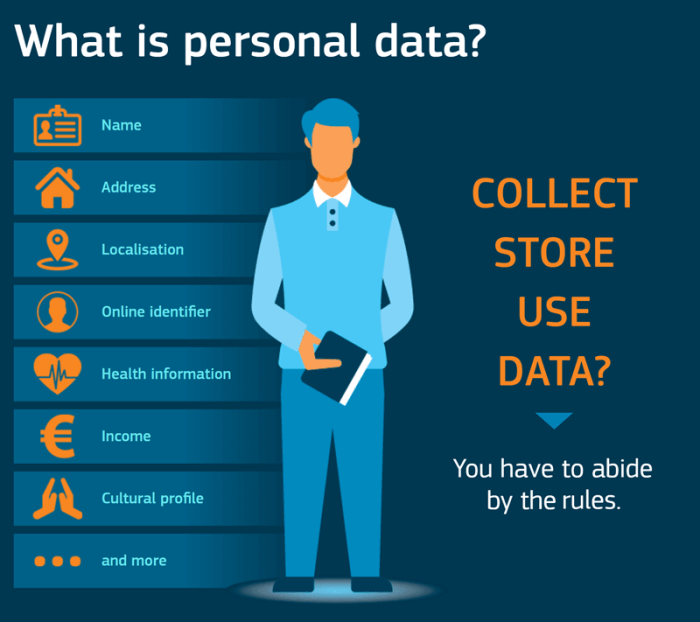

The General Data Protection Regulation (GDPR), enacted in 2018 by the European Union, has significantly impacted the development and deployment of AI models like GPT and DALL-E. It aims to protect the personal data of individuals within the EU, placing strict regulations on how companies collect, process, and store such data.

Data Collection and Processing

GDPR mandates that personal data be collected lawfully, fairly, and transparently. This means that users must be informed about how their data is being collected, processed, and used. AI models like GPT and DALL-E often rely on vast datasets, which may include personal information.

These datasets must be collected in compliance with GDPR regulations.

Data Storage and Security

GDPR requires companies to ensure the security of personal data and protect it from unauthorized access, processing, or disclosure. This necessitates robust security measures, such as encryption and access control, to safeguard sensitive information. AI models, which often store large datasets, must adhere to these security standards.

Data Usage and Purpose Limitation

GDPR restricts the use of personal data to specific, legitimate purposes. AI models, especially those trained on large datasets, must ensure that their data usage aligns with the stated purposes and avoids any misuse or unauthorized processing.

Data Subject Rights

Individuals have specific rights under GDPR, including the right to access, rectify, and erase their personal data. This means that users can request information about their data, correct inaccuracies, and request the deletion of their data under certain circumstances. AI models and their developers must comply with these data subject rights.

Examples of GDPR Impact on GPT and DALL-E

* Training Data:If a dataset used to train a GPT model contains personal information, the model’s developers must ensure compliance with GDPR. This could involve anonymizing the data, obtaining consent from data subjects, or limiting the use of sensitive information.

Check the technical solutions to ebike escooter battery fires to inspect complete evaluations and testimonials from users.

Output Generation

GPT and DALL-E models may generate outputs that contain personal information, such as names, addresses, or images of individuals. GDPR regulations require that such outputs are handled responsibly and avoid any breaches of privacy.

Data Retention

GDPR sets limits on the retention of personal data. AI models that store personal data must adhere to these limitations and implement mechanisms for data deletion or anonymization after the specified retention period.

Data Sharing

If AI models share personal data with third parties, they must comply with GDPR’s data transfer regulations, which include ensuring adequate safeguards and obtaining necessary consents.

“GDPR is a game-changer for AI development. It forces companies to be more transparent about their data practices and prioritize user privacy.”

Data Privacy Concerns with GPT and DALL-E

The rise of powerful AI models like GPT and DALL-E has brought immense potential for innovation, but it has also raised significant concerns regarding data privacy. These models, trained on massive datasets, can inadvertently absorb and reflect biases present in the data, leading to ethical dilemmas and potential misuse.

Potential Data Privacy Risks

The training data used to develop GPT and DALL-E can contain sensitive information about individuals, which may be unintentionally exposed during model usage. For instance, if a model is trained on text data containing personal details like names, addresses, or medical records, there is a risk that this information could be inadvertently generated in the model’s outputs.

- Exposure of Sensitive Information:The models could generate text or images that reveal personal information, potentially leading to privacy breaches. Imagine a GPT model trained on a dataset of medical records generating a story that includes details about a patient’s diagnosis or treatment, which could compromise their privacy.

- Generation of Biased Content:If the training data contains biases, the models may perpetuate these biases in their outputs. For example, a DALL-E model trained on a dataset of images depicting stereotypical gender roles could generate images that reinforce these stereotypes, potentially contributing to discrimination.

- Synthetic Data and Identity Theft:These models can generate realistic synthetic data, such as images of faces or voices, which could be used for malicious purposes like creating fake identities for identity theft or impersonation. The potential for creating highly realistic synthetic data raises serious concerns about the authenticity and integrity of information.

Ethical Considerations in Sensitive Contexts

The use of GPT and DALL-E in sensitive contexts, such as healthcare or finance, requires careful consideration of ethical implications. In these domains, data privacy is paramount, and the potential misuse of these models could have severe consequences.

- Healthcare:GPT models could be used to generate patient summaries or medical reports, but this raises concerns about the accuracy and privacy of the information generated. The models could potentially generate inaccurate or misleading information, leading to misdiagnosis or inappropriate treatment.

- Finance:DALL-E could be used to create realistic financial documents, such as invoices or bank statements, which could be used for fraudulent activities. The ability to generate convincing fake documents poses a significant risk to financial security.

Strategies for Compliance and Mitigation: What Does Europes Approach Data Privacy Mean For Gpt And Dall E

Navigating the complex landscape of data privacy regulations, especially with the rise of powerful AI models like GPT and DALL-E, requires a proactive approach to compliance. Developers and organizations must prioritize data privacy and security by implementing robust strategies to ensure adherence to regulations like GDPR.

Data Anonymization and De-identification

Anonymizing and de-identifying data used to train AI models is crucial to mitigate privacy risks. This process involves removing or transforming personally identifiable information (PII) from the data, making it impossible to link the data back to individuals.

- Differential Privacy:This technique adds random noise to the data, preserving the overall statistical properties while making it difficult to identify individual data points. For example, instead of directly using a user’s age, a model might use a range like “25-34” to protect individual identity.

- Data Aggregation:Combining data from multiple individuals into aggregated statistics can also help anonymize information. For instance, instead of storing individual purchase histories, a model could use aggregated purchase patterns across different user groups.

- Data Masking:Replacing sensitive information with random or generic values can also contribute to anonymization. For example, replacing a user’s name with a random identifier can prevent identification.

Data Governance and Access Control, What does europes approach data privacy mean for gpt and dall e

Establishing robust data governance and access control mechanisms is essential for managing sensitive data in AI development. This involves defining clear policies and procedures for data collection, storage, use, and sharing.

- Data Minimization:Collect only the necessary data for the intended purpose, limiting the amount of sensitive information collected. This principle helps reduce the potential for privacy breaches.

- Role-Based Access Control (RBAC):Implement RBAC to restrict access to sensitive data based on user roles and responsibilities. This ensures that only authorized personnel can access and process sensitive information.

- Data Retention Policies:Establish clear policies for data retention and deletion, ensuring that sensitive data is deleted once it is no longer needed. This helps minimize the risk of unauthorized access or data breaches.

The Future of AI and Data Privacy in Europe

The General Data Protection Regulation (GDPR) and other European data privacy regulations have already had a significant impact on the development and adoption of AI technologies. These regulations are likely to continue to shape the future of AI in Europe, influencing how companies develop, deploy, and use AI systems.

Impact of European Data Privacy Regulations on AI Development and Adoption

The GDPR and other European data privacy regulations have a significant impact on the development and adoption of AI technologies. These regulations impose strict requirements on how companies collect, process, and store personal data, which can be challenging for AI systems that often rely on large datasets for training.

For example, the GDPR’s principle of purpose limitation requires companies to collect data for specific, explicit, and legitimate purposes. This principle can be difficult to comply with for AI systems that may be trained on datasets collected for a variety of purposes.Moreover, the GDPR’s right to erasure, or “right to be forgotten,” allows individuals to request the deletion of their personal data.

This can pose a challenge for AI systems that have already been trained on datasets containing this data. Companies may need to develop new techniques for data anonymization and pseudonymization to ensure compliance with the GDPR.Despite these challenges, the GDPR can also drive innovation in AI.

The regulation’s focus on transparency and accountability can encourage the development of AI systems that are more ethical and responsible. For example, companies may need to develop new methods for explaining the decisions made by AI systems, making them more transparent and understandable to users.

Role of Regulatory Bodies in Shaping the Ethical and Legal Landscape for AI

Regulatory bodies play a crucial role in shaping the ethical and legal landscape for AI. They are responsible for enforcing data privacy regulations, developing ethical guidelines for AI development and deployment, and promoting responsible innovation.The European Union’s (EU) data protection authorities (DPAs) are responsible for enforcing the GDPR.

DPAs are actively involved in shaping the legal landscape for AI by providing guidance on how to comply with the GDPR in the context of AI. They also conduct investigations into potential breaches of the GDPR and issue fines to companies that violate the law.In addition to the GDPR, the EU is also developing other regulations that will specifically address AI.

The proposed AI Act, for example, aims to establish a comprehensive regulatory framework for AI, addressing risks associated with high-risk AI systems. This legislation could have a significant impact on the future of AI in Europe, setting standards for ethical and responsible AI development and deployment.

Comparison of AI Regulation in Europe with Other Regions

Europe is considered a leader in data privacy regulation, with the GDPR setting a high bar for data protection. However, other regions are also developing their own AI regulations. | Region | Key Regulations | Focus ||—|—|—|| Europe | GDPR, AI Act (proposed) | Data privacy, ethical AI || United States | No comprehensive federal AI law | Industry self-regulation, sector-specific laws || China | Cybersecurity Law, Personal Information Protection Law | Data security, national security |As you can see, there are significant differences in the approach to AI regulation across regions.

Europe’s focus on data privacy and ethical AI is distinct from the United States’ approach of relying on industry self-regulation and sector-specific laws. China, on the other hand, prioritizes data security and national security.While there are differences in the regulatory landscape, there is also potential for convergence.

For example, the EU’s proposed AI Act could influence the development of AI regulations in other regions. As AI technologies continue to evolve, it is likely that more countries will develop their own AI regulations, potentially leading to a more globally harmonized approach to AI governance.