Prisoners dilemma ai more cooperative humans – Prisoners Dilemma: AI Makes Humans More Cooperative – this intriguing concept challenges our understanding of human behavior and the potential of artificial intelligence. The Prisoner’s Dilemma, a classic game theory scenario, highlights the conflict between individual self-interest and the potential for mutual benefit.

This game demonstrates that even when cooperation would lead to a better outcome for everyone, individuals may choose to act in their own self-interest, leading to a less desirable outcome. But what happens when we introduce AI into the equation?

Could AI help us overcome this inherent tension and foster greater cooperation among humans?

This article delves into the fascinating world of AI and its impact on human cooperation, exploring how AI algorithms can be used to promote trust, facilitate communication, and ultimately, encourage mutually beneficial outcomes. We’ll analyze the strategies AI employs, comparing them to human decision-making in the Prisoner’s Dilemma.

We’ll also discuss the ethical considerations surrounding AI’s role in influencing human behavior and the potential for AI to manipulate or exploit humans in the name of cooperation. Join us as we navigate this complex and intriguing landscape, where the lines between human and machine blur, and the future of cooperation hangs in the balance.

The Prisoner’s Dilemma

The Prisoner’s Dilemma is a foundational concept in game theory that explores the tension between individual self-interest and the potential for collective benefit. This classic scenario helps us understand why cooperation can be difficult, even when it’s in everyone’s best interest.

Check netherlands first in europe to approve lab grown meat tastings to inspect complete evaluations and testimonials from users.

The Classic Scenario

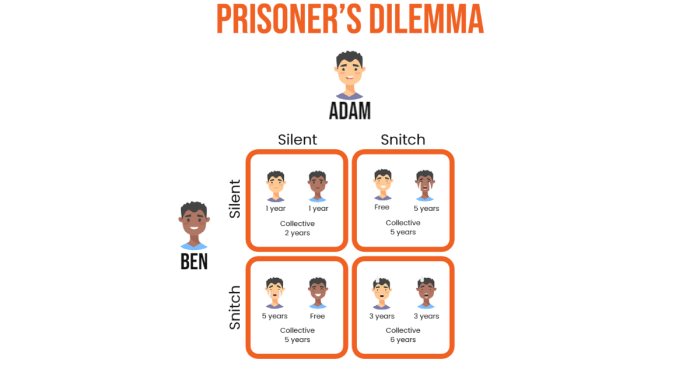

The Prisoner’s Dilemma involves two suspects, let’s call them Alice and Bob, who are arrested for a crime. They are separated and interrogated by the police. Each suspect has two options: to cooperate with the police by confessing or to defect by remaining silent.

The police offer a deal: if one suspect confesses and the other remains silent, the confessor goes free, and the silent suspect receives a heavy sentence. If both suspects confess, they both receive a moderate sentence. If both remain silent, they both receive a light sentence.

The dilemma arises because each suspect acts in their own self-interest, but this can lead to a worse outcome for both of them.

Nash Equilibrium

The Nash Equilibrium is a concept in game theory where no player can improve their outcome by unilaterally changing their strategy, assuming the other players’ strategies remain the same. In the Prisoner’s Dilemma, the Nash Equilibrium is for both suspects to confess.

This is because, regardless of what the other suspect does, confessing always yields a better outcome for the individual. If the other suspect remains silent, confessing leads to freedom, while remaining silent leads to a light sentence. If the other suspect confesses, confessing leads to a moderate sentence, while remaining silent leads to a heavy sentence.

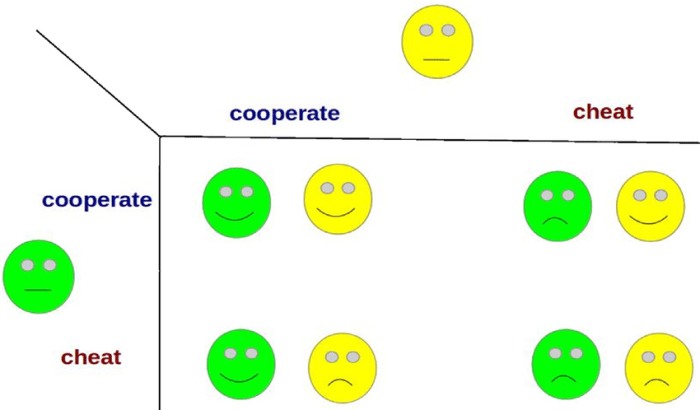

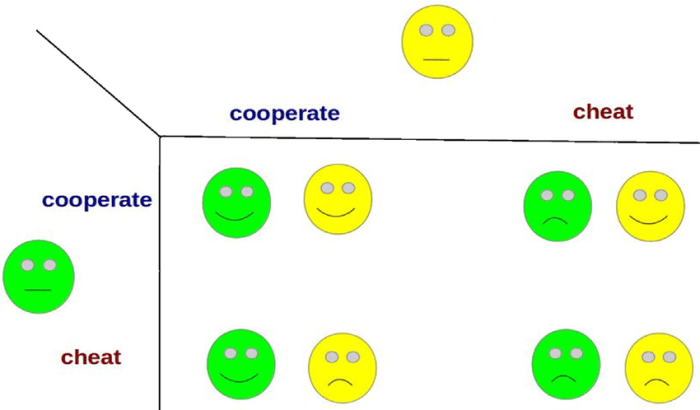

Outcomes of Cooperation and Defection, Prisoners dilemma ai more cooperative humans

The Prisoner’s Dilemma highlights the difference between the outcomes of cooperation and defection. If both suspects cooperate by remaining silent, they both receive a light sentence, which is the best possible outcome for both. However, if both suspects defect by confessing, they both receive a moderate sentence, which is worse than the outcome of cooperation.

This shows that individual self-interest can lead to a suboptimal outcome for everyone involved.

AI’s Approach to the Prisoner’s Dilemma: Prisoners Dilemma Ai More Cooperative Humans

The Prisoner’s Dilemma is a classic game theory scenario that explores the tension between cooperation and self-interest. AI algorithms have been applied to this scenario to study how agents can learn to cooperate and achieve better outcomes.AI researchers have utilized the Prisoner’s Dilemma as a testbed for developing and evaluating various cooperative strategies.

By simulating multiple rounds of the game, they can observe how AI agents learn and adapt their behavior based on past interactions.

Tit-for-Tat and Other Cooperative Strategies

AI agents often employ strategies that aim to achieve cooperation in the Prisoner’s Dilemma. One such strategy is Tit-for-Tat, which involves mirroring the opponent’s previous move. If the opponent cooperates, the agent cooperates; if the opponent defects, the agent defects.

This strategy has proven to be remarkably successful in repeated rounds of the game, as it encourages cooperation while punishing defection.Other cooperative approaches include:

- Randomized Strategies:These strategies involve randomly choosing between cooperation and defection, with a certain probability. This can help agents avoid falling into predictable patterns that might be exploited by opponents.

- Learning Algorithms:AI agents can be trained using reinforcement learning algorithms to learn optimal strategies based on the outcomes of past interactions. These algorithms allow agents to adapt their behavior over time, potentially discovering new cooperative strategies.

- Reputation-Based Strategies:In scenarios where agents interact repeatedly, they can develop reputations based on their past behavior. Agents might choose to cooperate with those who have a history of cooperation and defect against those who have a history of defection.

Examples of Successful AI Cooperation in Prisoner’s Dilemma

There have been several examples of AI systems successfully achieving cooperation in Prisoner’s Dilemma scenarios.

One notable example is the “Iterated Prisoner’s Dilemma Tournament” organized by Robert Axelrod in 1980. The tournament involved various computer programs competing against each other in repeated rounds of the Prisoner’s Dilemma. The Tit-for-Tat strategy, submitted by Anatol Rapoport, emerged as the most successful strategy, demonstrating the power of simple, cooperative rules.

Other examples include AI agents trained using reinforcement learning, which have learned to cooperate in complex scenarios with multiple agents and varying payoffs. These examples highlight the potential of AI to achieve cooperation in real-world settings, such as in multi-agent systems and economic negotiations.

Human Behavior in the Prisoner’s Dilemma

The Prisoner’s Dilemma is a fascinating game theory scenario that reveals the complexities of human decision-making, especially when it comes to cooperation. While AI strategies are often based on logic and optimization, human behavior is influenced by a wide range of factors, including emotions, social norms, and individual beliefs.

This section explores how humans navigate the Prisoner’s Dilemma and the factors that contribute to their choices.

Comparison of Human and AI Strategies

Humans and AI approaches to the Prisoner’s Dilemma diverge significantly. AI strategies typically prioritize maximizing individual gain, often leading to the “defect” choice. This approach is based on the assumption that the other player will also act in their self-interest.

Conversely, human behavior is more nuanced and can be influenced by factors beyond pure self-interest. Humans are more likely to consider the potential consequences of their actions on the other player and the overall outcome of the game. This leads to a greater likelihood of cooperation, especially in repeated interactions.

Factors Influencing Human Cooperation

- Trust:Trust plays a crucial role in human cooperation. If individuals believe that the other player will cooperate, they are more likely to do the same. This trust can be built through repeated interactions, shared experiences, and a sense of shared goals.

- Reputation:The reputation of the players involved can also influence their decision-making. If an individual has a reputation for being cooperative, others are more likely to trust them and reciprocate their cooperation. Conversely, a reputation for being selfish can lead to a lack of trust and a higher likelihood of defection.

- Social Norms:Social norms and expectations can also impact human behavior in the Prisoner’s Dilemma. In many cultures, cooperation is valued, and individuals are expected to act in a way that benefits the group. These norms can create pressure to cooperate, even when it might not be in an individual’s immediate self-interest.

Empathy and Altruism

Empathy and altruism can also play a role in human cooperation. When individuals feel empathy for the other player, they may be more likely to consider their well-being and choose to cooperate. This is particularly true in situations where individuals have a strong sense of fairness or a desire to see the other player succeed.

Altruism, the act of helping others without expecting anything in return, can also motivate individuals to cooperate, even if it means sacrificing their own gains.

The Potential for AI to Foster Human Cooperation

The Prisoner’s Dilemma, a classic game theory problem, highlights the challenges of cooperation even when it’s mutually beneficial. However, AI’s potential to facilitate communication and trust-building opens exciting possibilities for overcoming this challenge and promoting human cooperation.

AI’s Role in Facilitating Communication and Trust

AI can play a crucial role in fostering cooperation by improving communication and trust between individuals and groups. AI-powered tools can analyze vast amounts of data to identify patterns and predict outcomes, helping individuals understand each other’s perspectives and motivations.

This enhanced understanding can lead to more constructive dialogue and reduced mistrust. For example, AI-powered chatbots can be used to facilitate negotiations and conflict resolution. By analyzing the conversation and understanding the emotional nuances of the participants, these chatbots can guide individuals toward a mutually beneficial agreement.

Additionally, AI can be used to create platforms for anonymous communication, where individuals can share their ideas and concerns without fear of reprisal, leading to greater transparency and trust.

Ethical Considerations in AI and Cooperation

The integration of AI into human society raises profound ethical questions, particularly when considering its potential to influence human behavior and foster cooperation. While AI can be a powerful tool for promoting collaboration, its use also presents risks that require careful consideration and mitigation.

The Potential for AI to Manipulate or Exploit Humans

The use of AI to influence human behavior can raise concerns about manipulation and exploitation. AI algorithms can be designed to exploit human biases and vulnerabilities, leading to decisions that are not in the best interests of individuals or society as a whole.

For instance, AI-powered social media platforms can personalize content to create echo chambers, reinforcing existing biases and hindering critical thinking. Additionally, AI-driven advertising can target individuals with highly personalized messages, potentially manipulating their choices and exploiting their vulnerabilities.

Recommendations for Ensuring that AI is Used Ethically to Promote Human Cooperation

To ensure that AI is used ethically to promote human cooperation, it is crucial to establish robust ethical frameworks and guidelines. These frameworks should address key considerations such as:

- Transparency and Explainability:AI systems should be transparent and explainable, allowing users to understand how decisions are made and to identify potential biases. This transparency is essential for building trust and ensuring accountability.

- Fairness and Non-discrimination:AI systems should be designed to be fair and non-discriminatory, avoiding biases that could perpetuate existing inequalities. This requires careful consideration of data sets used to train AI models and ongoing monitoring of their performance.

- Privacy and Data Security:The use of AI raises significant concerns about privacy and data security. Robust measures should be implemented to protect sensitive data and prevent unauthorized access or misuse.

- Human Oversight and Control:AI systems should be designed with appropriate human oversight and control mechanisms to ensure that they are used responsibly and ethically. This includes establishing clear guidelines for human intervention in decision-making processes.

- Public Engagement and Dialogue:Open and inclusive public engagement is essential for developing and implementing ethical AI frameworks. This dialogue should involve diverse stakeholders, including experts, policymakers, and members of the public.