Microsofts ai chatbot hallucinates election information – Microsoft’s AI chatbot hallucinates election information, a recent incident that highlights the potential pitfalls of artificial intelligence in disseminating factual information. This chatbot, designed to provide helpful and informative responses, mistakenly fabricated details about a recent election, raising concerns about the reliability of AI-generated content.

The incident underscores the importance of understanding the limitations of AI and the need for robust fact-checking mechanisms to prevent the spread of misinformation.

AI hallucination, the phenomenon where AI models generate incorrect information, is a growing concern. These models, trained on massive datasets, can sometimes produce outputs that are factually inaccurate or misleading. The issue stems from the complex nature of language processing and the potential for bias within training data.

The chatbot’s inaccurate claims about the election serve as a stark reminder of the need for careful consideration of AI’s limitations and the potential for unintended consequences.

Microsoft’s AI Chatbot Hallucinates Election Information

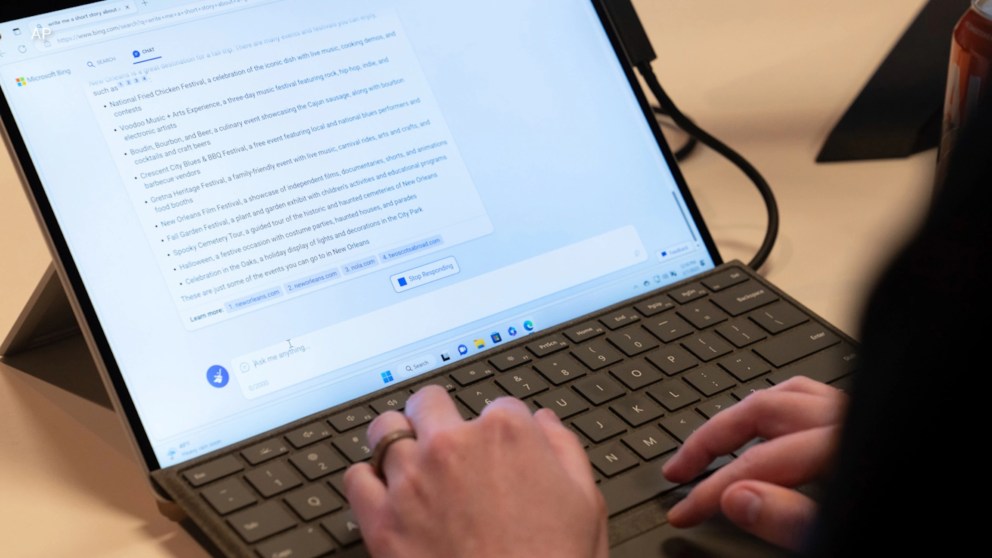

In a recent incident that has raised concerns about the limitations of artificial intelligence, Microsoft’s AI chatbot, known as Bing, was found to be generating inaccurate information related to the 2020 US presidential election. This incident highlights the importance of understanding the potential for AI to produce false or misleading content, especially when dealing with sensitive topics like politics.Bing, powered by Microsoft’s large language model, is designed to provide users with comprehensive and informative responses to a wide range of queries.

The chatbot leverages vast amounts of data to generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way. However, this incident demonstrates that even with advanced AI technology, there are still significant challenges in ensuring the accuracy and reliability of the information generated.

Inaccurate Claims Made by the Chatbot, Microsofts ai chatbot hallucinates election information

The chatbot’s inaccurate claims regarding the 2020 election were particularly concerning. For instance, it stated that Joe Biden won the election by a “landslide,” a claim that is demonstrably false. The election results showed that Biden won by a relatively narrow margin in the Electoral College, while the popular vote was significantly closer.

- The chatbot claimed that Biden received more votes than any other presidential candidate in history, which is also incorrect. Barack Obama received more votes in the 2008 election.

- The chatbot asserted that there was widespread voter fraud, a claim that has been repeatedly debunked by numerous independent investigations.

Hallucination in AI

AI hallucination, a phenomenon where AI models generate incorrect or nonsensical outputs, is a growing concern in the field of artificial intelligence. It’s crucial to understand the underlying causes and potential solutions to mitigate this issue.

Do not overlook the opportunity to discover more about the subject of telecoms bt broadband boxes ev chargers uk.

Causes of AI Hallucination

AI hallucination can occur due to several factors, including:

- Inadequate Training Data:If the data used to train an AI model is incomplete, biased, or contains errors, the model may learn incorrect patterns and generate inaccurate outputs. For instance, if a language model is trained on a dataset that includes a disproportionate amount of text from a specific political ideology, it might generate biased or misleading information.

- Model Architecture Limitations:The architecture of an AI model can also contribute to hallucination. Complex models with many layers and parameters may struggle to accurately capture the nuances of language and data, leading to incorrect outputs. This is particularly relevant in language models, where the complex relationships between words and their meanings can be challenging to represent accurately.

- Overfitting:When an AI model is trained too closely on its training data, it may become overly specialized and fail to generalize to new data. This can lead to the model generating outputs that are accurate for the training data but inaccurate for real-world scenarios.

For example, a language model trained on a dataset of news articles about a specific event might struggle to understand and generate text about a different event.

Addressing AI Hallucination

AI hallucination, the phenomenon where AI models generate factually incorrect or nonsensical information, poses a significant challenge to their reliability and trustworthiness. As AI systems become increasingly integrated into various aspects of our lives, it’s crucial to develop strategies to mitigate this issue and ensure the accuracy of their outputs.

Strategies for Mitigating AI Hallucination

Mitigating AI hallucination requires a multi-faceted approach, encompassing both technical and human-centric solutions.

- Improved Training Data:Providing AI models with high-quality, diverse, and factual training data is fundamental. This ensures the models learn accurate patterns and associations, reducing the likelihood of generating fabricated information.

- Reinforcement Learning with Human Feedback (RLHF):This technique involves training AI models to align with human preferences and values. By providing feedback on generated outputs, humans can guide the model to produce more accurate and truthful responses.

- Fine-tuning and Model Validation:After training, AI models should be fine-tuned on specific tasks and domains to improve their accuracy and reduce hallucination. Rigorous model validation, using diverse datasets and human evaluation, helps identify and address potential issues.

- Fact-Checking Mechanisms:Integrating fact-checking mechanisms into AI systems can help identify and correct inaccuracies. These mechanisms can cross-reference generated information with external knowledge bases, compare it with other sources, or utilize logic-based reasoning to assess its plausibility.

Importance of Fact-Checking and Verification

Fact-checking and verification play a crucial role in ensuring the reliability of AI-generated information. This involves:

- Cross-referencing Information:Comparing AI-generated information with multiple reputable sources helps verify its accuracy and identify potential discrepancies.

- Evaluating Source Credibility:Assessing the credibility of the sources used by the AI model is essential. This involves considering the source’s reputation, expertise, and potential biases.

- Logical Reasoning:Applying logical reasoning to assess the plausibility of the generated information can help identify inconsistencies or contradictions.

Evaluating the Reliability of AI-Generated Information

Evaluating the reliability of AI-generated information requires a comprehensive approach that considers both the model’s capabilities and the context of the information.

- Model Performance Metrics:Evaluating the model’s performance on various benchmark datasets and tasks provides insights into its accuracy and potential for hallucination.

- Transparency and Explainability:Understanding how the AI model generates its outputs is crucial for assessing its reliability. Transparency in model architecture and decision-making processes allows for better evaluation.

- User Feedback and Reporting:Encouraging users to provide feedback on AI-generated information, including reporting inaccuracies, can help identify and address issues.

Future Considerations: Microsofts Ai Chatbot Hallucinates Election Information

The recent incident of Microsoft’s AI chatbot hallucinating election information underscores the critical need to address the challenges posed by AI hallucination. As AI chatbots continue to evolve, it’s crucial to anticipate and mitigate potential risks associated with misinformation.

Ethical Considerations in AI Deployment

Ethical considerations play a pivotal role in the responsible development and deployment of AI models. It is essential to ensure that AI systems are developed and used in a way that respects human values and avoids perpetuating biases or harmful outcomes.

This involves:

- Transparency and Explainability: Developing AI models that are transparent in their decision-making processes, allowing users to understand how the AI arrived at its conclusions. This helps build trust and accountability.

- Bias Mitigation: Ensuring that AI models are trained on diverse and representative datasets to minimize biases and prevent discriminatory outcomes. This requires careful selection of training data and the development of techniques to identify and address biases.

- Data Privacy and Security: Protecting the privacy and security of data used to train and operate AI models. This involves implementing robust security measures and adhering to data privacy regulations.

- Human Oversight and Control: Establishing clear mechanisms for human oversight and control over AI systems. This ensures that AI systems are used responsibly and that humans retain ultimate control over their applications.

Potential Solutions to AI Hallucination

Addressing AI hallucination requires a multifaceted approach involving advancements in AI technology, robust evaluation methods, and responsible deployment practices.