Llms generative ai information warfare weapon – LLMs: Generative AI’s New Weapon in Information Warfare – It’s a chilling thought, isn’t it? The power of language, once a tool for communication and understanding, now wielded as a weapon in the digital age. Large language models (LLMs), with their uncanny ability to generate human-like text, have opened a Pandora’s Box of possibilities, both fascinating and frightening.

Imagine AI crafting convincing disinformation campaigns, manipulating public opinion with ease, and blurring the lines between truth and fiction. This is the reality we face as LLMs become increasingly sophisticated, blurring the lines between truth and fabrication.

This blog delves into the dark side of LLMs, exploring their potential as weapons in information warfare. We’ll examine how these powerful tools can be used to spread misinformation, manipulate public opinion, and sow discord. We’ll also look at the ethical implications of weaponizing LLMs and explore countermeasures to combat this growing threat.

Introduction

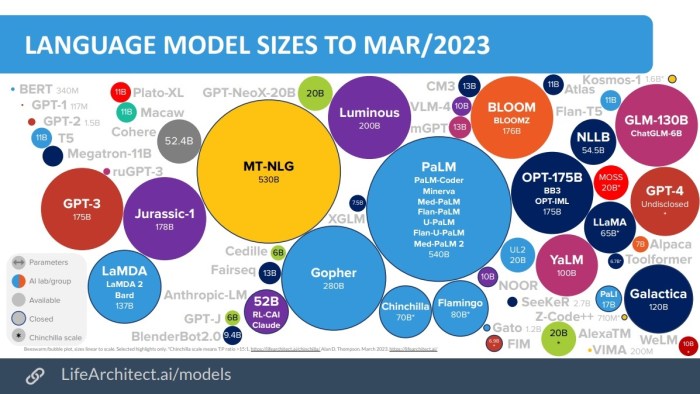

Large language models (LLMs) are a type of artificial intelligence (AI) that have rapidly advanced in recent years, demonstrating remarkable capabilities in natural language processing. These models, trained on vast amounts of text data, can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

LLMs are the driving force behind many AI applications we use today, from chatbots to text summarizers.Generative AI, a subset of AI, focuses on creating new content, such as text, images, audio, video, and code. It leverages algorithms to learn patterns from existing data and generate new data that shares similar characteristics.

Generative AI has found widespread applications in various fields, including art generation, music composition, and software development.Information warfare refers to the use of information as a weapon to achieve strategic goals. This concept has evolved over time, from traditional propaganda to modern cyberattacks and the manipulation of online narratives.

The history of information warfare dates back to ancient times, with examples such as the use of rumors and disinformation during wars. Today, information warfare is a complex and multifaceted phenomenon, encompassing a wide range of tactics and strategies.

The Capabilities of LLMs

LLMs are powerful tools with diverse capabilities, enabling them to perform various tasks related to language and information processing. These capabilities include:

- Text Generation:LLMs can generate human-like text, writing stories, articles, poems, and even code.

- Language Translation:LLMs can translate text between multiple languages with high accuracy.

- Text Summarization:LLMs can condense large amounts of text into concise summaries, highlighting key information.

- Question Answering:LLMs can answer your questions based on their training data, providing information and insights.

- Code Generation:LLMs can generate code in various programming languages, assisting developers in writing software.

The Applications of Generative AI

Generative AI has revolutionized many industries, offering innovative solutions to diverse challenges. Some notable applications include:

- Art and Design:Generative AI can create unique artwork, including paintings, sculptures, and music compositions.

- Content Creation:Generative AI can assist in writing articles, blog posts, and marketing copy, streamlining content creation processes.

- Drug Discovery:Generative AI can help design new drugs and therapies, accelerating the drug development process.

- Software Development:Generative AI can assist developers in writing code, automating tasks, and improving software quality.

- Education and Training:Generative AI can create personalized learning experiences, tailoring content to individual needs and learning styles.

LLMs as Weapons in Information Warfare: Llms Generative Ai Information Warfare Weapon

The potential for LLMs to be weaponized in information warfare is a serious concern. These powerful language models can be used to generate and spread misinformation, manipulate public opinion, and sow discord among populations.

The Potential for LLMs to Generate and Spread Misinformation

LLMs are capable of producing text that is indistinguishable from human-written content. This makes them ideal tools for creating and disseminating fake news, propaganda, and other forms of misinformation. For example, an LLM could be used to generate a convincing news article about a fictitious event, or to create social media posts that spread false information.

- Generating Fake News Articles:An LLM could be trained on a dataset of news articles and then used to generate new articles that are indistinguishable from real news. These fake articles could be used to spread misinformation about a particular event or person.

For example, an LLM could be used to generate a fake news article about a politician’s corruption, which could then be shared on social media and other platforms.

- Creating Social Media Posts:LLMs can also be used to create social media posts that spread misinformation. These posts could be designed to look like they are coming from real people, and they could be used to spread rumors, conspiracy theories, and other forms of disinformation.

For example, an LLM could be used to create a fake social media profile that spreads false information about a particular product or service. This could damage the reputation of the product or service and harm the company that makes it.

You also can investigate more thoroughly about eu cheme aims to help ukrainian smes benefit from single market to enhance your awareness in the field of eu cheme aims to help ukrainian smes benefit from single market.

- Manipulating Search Results:LLMs can also be used to manipulate search results. By creating large amounts of fake content that is relevant to a particular search term, LLMs could be used to push specific websites or articles to the top of search results.

This could be used to promote propaganda or to bury information that is unfavorable to a particular party or individual. For example, an LLM could be used to create a large number of fake websites that are relevant to a particular political candidate.

These websites could then be used to promote the candidate’s agenda or to spread negative information about their opponents.

How LLMs Can Be Used to Manipulate Public Opinion, Llms generative ai information warfare weapon

LLMs can be used to manipulate public opinion by creating targeted propaganda that is tailored to specific audiences. This propaganda could be used to sway people’s opinions on a particular issue, to promote a specific candidate or political party, or to undermine trust in institutions.

- Targeted Propaganda:LLMs can be used to create targeted propaganda that is tailored to specific audiences. This propaganda could be used to sway people’s opinions on a particular issue, to promote a specific candidate or political party, or to undermine trust in institutions.

For example, an LLM could be used to create a propaganda campaign that targets a specific demographic group with messages that are designed to appeal to their interests and beliefs.

- Emotional Manipulation:LLMs can be used to manipulate people’s emotions. By understanding the emotional triggers of different audiences, LLMs can be used to create content that is designed to evoke specific emotions, such as anger, fear, or sadness. This can be used to influence people’s opinions and to encourage them to take specific actions.

For example, an LLM could be used to create a social media campaign that spreads fear and anxiety about a particular issue, which could then be used to promote a specific political agenda.

- Creating Fake Identities:LLMs can be used to create fake identities that can be used to spread propaganda or to manipulate public opinion. These fake identities could be used to create social media accounts, to write online reviews, or to participate in online forums.

This could be used to spread misinformation or to create the impression that there is widespread support for a particular idea or belief. For example, an LLM could be used to create a fake social media account that spreads false information about a particular company or product.

This could damage the reputation of the company or product and harm its sales.

The Ethical Implications of Weaponizing LLMs for Information Warfare

The weaponization of LLMs for information warfare raises serious ethical concerns. The use of LLMs to spread misinformation, manipulate public opinion, and sow discord could have a devastating impact on society.

- Erosion of Trust:The spread of misinformation by LLMs could erode trust in institutions and in the media. This could lead to a decline in civic engagement and a weakening of democratic processes. For example, if people lose trust in the media because they believe that it is spreading fake news, they may be less likely to participate in elections or to hold their elected officials accountable.

- Social Polarization:The use of LLMs to spread propaganda and to manipulate public opinion could lead to social polarization. This could create divisions within society and make it more difficult to address important social issues. For example, if LLMs are used to spread propaganda that promotes hatred and division, it could lead to increased violence and social unrest.

- Impact on Human Rights:The weaponization of LLMs could have a negative impact on human rights. For example, LLMs could be used to target individuals with hate speech or to spread disinformation that could lead to violence or persecution. For example, LLMs could be used to create targeted propaganda that is designed to incite violence against a particular group of people.

This could lead to human rights abuses and could undermine the rule of law.

Examples of LLMs in Information Warfare

The use of LLMs in information warfare is a growing concern, as these powerful tools can be used to manipulate public opinion, spread misinformation, and sow discord. This section explores specific instances where LLMs have been employed for these purposes, analyzing their effectiveness and societal impact.

LLMs in Political Campaigns

The 2016 US Presidential election saw the emergence of sophisticated botnets utilizing LLMs to generate and spread propaganda on social media platforms. These bots, often disguised as human users, amplified specific narratives, spread misinformation, and engaged in coordinated attacks against opposing candidates.

While the exact role of LLMs in these campaigns remains a subject of debate, there is evidence suggesting their use in generating convincing fake news articles, social media posts, and even comments.

LLMs in Social Media Manipulation

Beyond political campaigns, LLMs have been employed to manipulate public opinion on a variety of issues. For example, LLMs can be used to create highly personalized messages tailored to individual users’ interests and beliefs, making them more susceptible to propaganda.

This tactic can be used to promote specific products, influence public opinion on social issues, or even incite violence.

LLMs in Cyberwarfare

LLMs are also being used in cyberwarfare, particularly in the realm of phishing attacks and social engineering. By leveraging LLMs’ ability to generate human-like text, attackers can craft highly convincing emails, social media messages, and even phone calls, designed to trick victims into divulging sensitive information.

This tactic can be used to steal data, disrupt critical infrastructure, or even influence political outcomes.

Countermeasures and Mitigation Strategies

The rise of LLMs as weapons in information warfare necessitates the development of effective countermeasures and mitigation strategies. Recognizing and understanding LLM-generated content, combating misinformation, and building resilience against LLM-based attacks are crucial for navigating this evolving landscape.

Detecting and Identifying LLM-Generated Content

Identifying content generated by LLMs is essential for understanding its origins and potential biases. While LLMs are becoming increasingly sophisticated, several techniques can help detect their presence.

- Statistical Analysis:LLMs often exhibit distinct statistical patterns in their output. Analyzing the frequency of words, sentence structure, and other linguistic features can reveal potential LLM-generated content.

- Style and Tone:LLMs tend to produce content with a consistent style and tone, which can be different from human-generated content. Analyzing the writing style, vocabulary choices, and overall tone can help identify LLM-generated text.

- Watermarking:Embedding unique patterns or signatures within LLM-generated content can act as a watermark, allowing for easier identification. This technique is still under development but holds promise for future applications.

Combating Misinformation and Propaganda Disseminated by LLMs

LLMs can be used to generate and disseminate misinformation and propaganda on a massive scale. Countering these threats requires a multi-pronged approach.

- Fact-Checking and Verification:Robust fact-checking mechanisms are crucial for identifying and debunking false information. This involves cross-referencing information with reliable sources, verifying claims, and identifying potential biases.

- Media Literacy Education:Empowering individuals with media literacy skills is essential for discerning credible information from misinformation. This involves teaching critical thinking, source evaluation, and understanding the limitations of online information.

- Platform Responsibility:Social media platforms and other online platforms have a responsibility to address the spread of misinformation. This includes implementing policies to remove false content, promoting fact-checking initiatives, and encouraging responsible user behavior.

Building Resilience Against Information Warfare Tactics Using LLMs

Resilience against LLM-based information warfare requires a proactive approach that combines technological solutions, human awareness, and strategic planning.

- Developing Counter-LLMs:Building counter-LLMs capable of identifying and neutralizing malicious LLM-generated content is a promising strategy. These counter-LLMs can be trained on datasets of known misinformation and propaganda to detect similar patterns in future content.

- Promoting Critical Thinking and Skepticism:Encouraging individuals to critically evaluate information and maintain a healthy level of skepticism is crucial for combating misinformation. This involves questioning sources, identifying biases, and seeking diverse perspectives.

- Strengthening Information Security:Protecting sensitive information and systems from LLM-based attacks is vital. This includes implementing strong cybersecurity measures, regularly updating software, and educating users about potential threats.

Future Implications and Considerations

The rapid evolution of LLMs raises concerns about their potential misuse in information warfare. As these models become more sophisticated, they could pose a significant threat to global security and societal stability. Understanding the potential implications and developing appropriate safeguards is crucial to mitigating these risks.

The Increasing Sophistication of LLMs in Information Warfare

LLMs are continuously evolving, becoming more powerful and capable of generating increasingly convincing and persuasive content. This advancement presents a significant challenge to combating misinformation and propaganda. For example, future LLMs could be used to:

- Create highly realistic deepfakes, indistinguishable from genuine videos, to spread disinformation and damage reputations.

- Generate personalized propaganda tailored to individual users’ beliefs and vulnerabilities, making it more effective and difficult to detect.

- Automate the creation and dissemination of misinformation campaigns across multiple platforms, amplifying their reach and impact.

The Need for Regulations and Ethical Guidelines

The potential for LLMs to be misused in information warfare underscores the urgent need for regulations and ethical guidelines governing their development and deployment. These frameworks should:

- Establish clear accountability mechanisms for the use of LLMs in information warfare, ensuring that developers and users are held responsible for their actions.

- Promote transparency in the development and deployment of LLMs, allowing for greater scrutiny and accountability.

- Foster collaboration between governments, industry, and academia to develop robust countermeasures against the misuse of LLMs in information warfare.

The Long-Term Impact of LLMs on the Information Landscape and Societal Trust

The widespread adoption of LLMs could have profound and long-lasting implications for the information landscape and societal trust.

- The proliferation of misinformation generated by LLMs could erode public trust in traditional media and institutions, leading to a fragmented and polarized information environment.

- The increasing difficulty in distinguishing between genuine and AI-generated content could undermine the credibility of information and make it challenging to discern truth from falsehood.

- The potential for LLMs to be used for manipulation and propaganda could undermine democratic processes and erode public trust in democratic institutions.