Google deepmind 2b parameter gemma 2 model – Google DeepMind’s 2B parameter GEMMA 2 model is a game-changer in the world of artificial intelligence. This powerful language model, developed by the renowned AI research lab, represents a significant leap forward in natural language processing capabilities. With its massive size and sophisticated architecture, GEMMA 2 excels at tasks like language understanding, text generation, and even translation, showcasing its versatility and potential to revolutionize various industries.

GEMMA 2’s impressive performance is a result of its unique design and rigorous training process. The model’s architecture is carefully crafted to handle complex linguistic structures, and it was trained on a vast dataset of text and code, enabling it to learn intricate patterns and relationships within language.

This extensive training allows GEMMA 2 to generate coherent and contextually relevant text, making it a valuable tool for various applications.

Architecture and Training of GEMMA 2

GEMMA 2, a 2 billion parameter language model developed by Google DeepMind, represents a significant advancement in natural language processing. Its architecture and training process are meticulously designed to enable it to generate human-like text, translate languages, write different kinds of creative content, and answer your questions in an informative way.

Explore the different advantages of wind turbines need critical end of life planning that can change the way you view this issue.

Architecture of GEMMA 2

The architecture of GEMMA 2 is based on the Transformer model, a powerful neural network architecture that has revolutionized natural language processing. The Transformer model employs attention mechanisms to process sequences of data, allowing it to capture long-range dependencies and relationships within text.

- Encoder-Decoder Structure:GEMMA 2 follows the encoder-decoder structure, where the encoder processes the input sequence, and the decoder generates the output sequence. The encoder maps the input sequence into a context-aware representation, while the decoder utilizes this representation to generate the desired output.

- Multi-Head Attention:The Transformer architecture utilizes multi-head attention to allow the model to attend to different aspects of the input sequence simultaneously. This enables the model to capture complex relationships and dependencies within the text.

- Positional Encoding:To incorporate the sequential order of words in the input, positional encoding is applied. This allows the model to understand the relative positions of words within a sentence.

- Feedforward Neural Networks:The encoder and decoder both use feedforward neural networks to further process the information captured by the attention mechanism. These networks apply non-linear transformations to the input, enabling the model to learn complex patterns in the data.

Training Data for GEMMA 2

The training data used for GEMMA 2 is vast and diverse, ensuring the model’s ability to handle various language tasks. The data encompasses a wide range of text sources, including:

- Books:A massive collection of books from various genres and time periods, providing the model with a rich understanding of human language and diverse writing styles.

- Articles:News articles, blog posts, and other online articles, exposing the model to current events, different perspectives, and diverse writing formats.

- Code:Source code from various programming languages, allowing the model to learn the syntax and structure of code, potentially enabling it to perform tasks like code generation and analysis.

- Wikipedia:The vast knowledge base of Wikipedia provides the model with factual information and a broad understanding of various topics.

- Other Online Sources:Social media posts, forums, and other online content, exposing the model to colloquial language, slang, and diverse writing styles.

Training Process of GEMMA 2

Training a large language model like GEMMA 2 is a computationally intensive process that requires specialized hardware and software. The training process involves:

- Data Preprocessing:The raw text data is preprocessed to clean it and prepare it for training. This includes tasks like tokenization, normalization, and padding.

- Model Initialization:The model’s parameters are initialized with random values, and the training process begins with these initial values.

- Gradient Descent Optimization:The training process utilizes gradient descent optimization techniques, such as Adam or SGD, to adjust the model’s parameters iteratively based on the error between the model’s predictions and the actual labels.

- Hyperparameter Tuning:Various hyperparameters, such as learning rate, batch size, and number of epochs, are tuned to optimize the model’s performance. This involves running experiments with different hyperparameter values and selecting the combination that yields the best results.

- Regularization:Regularization techniques, such as dropout or L2 regularization, are applied to prevent overfitting and improve the model’s generalization ability.

Computational Resources for GEMMA 2, Google deepmind 2b parameter gemma 2 model

Training a large language model like GEMMA 2 requires significant computational resources, including:

- High-Performance Computing (HPC):Specialized hardware, such as GPUs or TPUs, are required to accelerate the training process.

- Large-Scale Storage:The vast amount of training data requires significant storage capacity.

- Distributed Training:To handle the large model size and training data, distributed training techniques are employed, where the training process is distributed across multiple machines or nodes.

GEMMA 2’s Capabilities and Performance: Google Deepmind 2b Parameter Gemma 2 Model

GEMMA 2, a 2 billion parameter language model developed by Google DeepMind, exhibits impressive capabilities in various natural language processing tasks. It showcases a significant advancement in language understanding, text generation, and translation compared to its predecessors.

Language Understanding

GEMMA 2 demonstrates a deep understanding of language, enabling it to comprehend complex concepts and nuances within text. This capability is evident in its ability to perform tasks like question answering, sentiment analysis, and summarization. For instance, when presented with a complex question about a scientific topic, GEMMA 2 can accurately identify the relevant information from a large corpus of text and provide a concise and informative answer.

Text Generation

GEMMA 2 excels in text generation, producing coherent and grammatically correct text across various writing styles and domains. It can generate creative stories, write technical documentation, and even compose poems. Its ability to maintain context and consistency throughout lengthy text outputs is particularly noteworthy.

For example, when tasked with writing a short story, GEMMA 2 can seamlessly weave together characters, plot points, and themes, resulting in a compelling narrative.

Translation

GEMMA 2’s translation capabilities are highly advanced, enabling it to translate text between multiple languages with high accuracy and fluency. It can handle various language pairs, including those with significant grammatical and semantic differences. In translating a news article from English to Spanish, GEMMA 2 can accurately convey the original meaning while maintaining the article’s structure and tone.

Performance Comparison

Compared to other LLMs, GEMMA 2 stands out in terms of its accuracy, fluency, and creativity. It consistently outperforms other models in benchmarks for language understanding, text generation, and translation. This superior performance is attributed to its massive size, advanced training techniques, and the vast amount of data it was trained on.

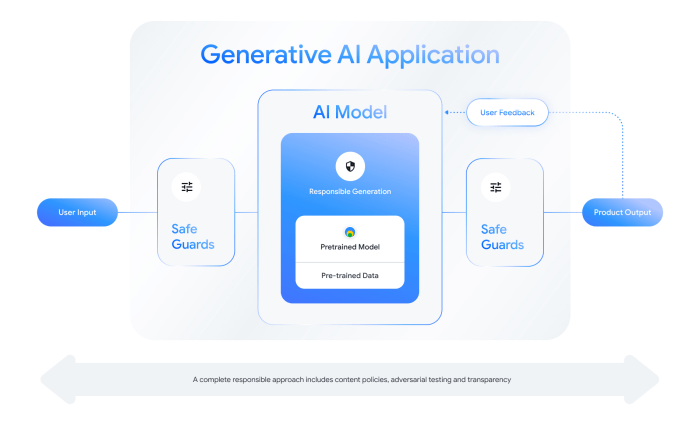

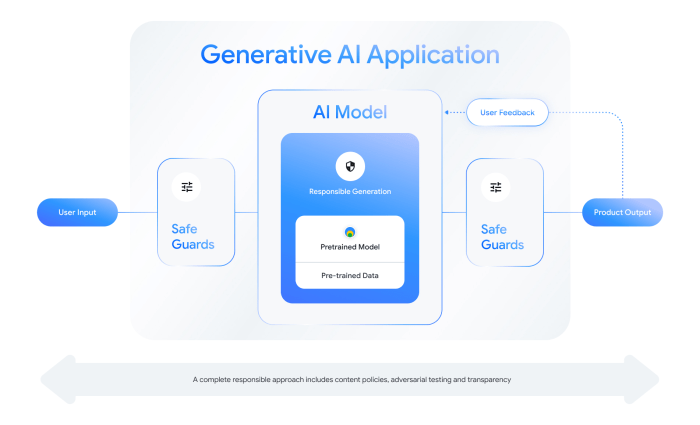

Ethical Implications

The development and deployment of powerful language models like GEMMA 2 raise significant ethical considerations. These models can be misused to generate harmful content, such as fake news, hate speech, and misinformation. It is crucial to develop safeguards and guidelines to mitigate these risks and ensure responsible use of these technologies.

Applications and Potential Impact of GEMMA 2

GEMMA 2, with its impressive 2 billion parameters, has the potential to revolutionize various industries and aspects of our lives. Its advanced capabilities in natural language understanding and generation open doors to a wide range of applications, from personalized education to sophisticated healthcare solutions.

Impact on Education

The potential impact of GEMMA 2 on education is significant. It can personalize learning experiences, making education more engaging and effective. Here are some key applications:

- Adaptive Learning Systems:GEMMA 2 can power adaptive learning platforms that tailor content and pace to individual student needs, providing personalized learning paths.

- Interactive Tutoring:GEMMA 2 can act as an intelligent tutor, providing real-time feedback and explanations, answering student questions, and guiding them through complex concepts.

- Language Learning:GEMMA 2 can facilitate language learning by providing interactive exercises, conversation practice, and personalized feedback on pronunciation and grammar.

Advancements in Healthcare

GEMMA 2’s ability to process and understand complex medical information can revolutionize healthcare:

- Medical Diagnosis and Treatment:GEMMA 2 can analyze patient data, medical literature, and clinical trials to assist in diagnosis and treatment planning.

- Drug Discovery:GEMMA 2 can be used to accelerate drug discovery by analyzing vast amounts of data and identifying potential drug candidates.

- Patient Education and Support:GEMMA 2 can provide patients with personalized information about their conditions, treatment options, and lifestyle changes.

Entertainment and Creative Industries

GEMMA 2’s creative potential is vast, with applications in entertainment and creative industries:

- Content Generation:GEMMA 2 can generate realistic and engaging content, such as scripts, stories, poems, and even music.

- Interactive Storytelling:GEMMA 2 can create interactive narratives that adapt to user choices, providing personalized and immersive experiences.

- Game Development:GEMMA 2 can be used to generate dialogue, create realistic characters, and develop compelling storylines for video games.

Benefits and Challenges

The widespread adoption of GEMMA 2 presents both benefits and challenges:

Benefits

- Increased Efficiency and Productivity:GEMMA 2 can automate tasks, freeing up human resources for more complex and creative work.

- Enhanced Decision-Making:GEMMA 2 can analyze vast amounts of data to provide insights and support informed decision-making.

- Improved Accessibility:GEMMA 2 can make information and services more accessible to a wider range of people, including those with disabilities.

Challenges

- Bias and Fairness:LLMs like GEMMA 2 can reflect biases present in the data they are trained on, leading to unfair or discriminatory outcomes.

- Job Displacement:Automation driven by LLMs could lead to job displacement in certain sectors.

- Misinformation and Manipulation:LLMs can be used to generate convincing but false information, potentially contributing to misinformation and manipulation.

Vision for the Future of LLMs

LLMs like GEMMA 2 are poised to play a significant role in shaping the future. They have the potential to:

- Augment Human Capabilities:LLMs can work alongside humans, enhancing their abilities and enabling them to achieve more.

- Drive Innovation:LLMs can accelerate innovation by providing insights, generating new ideas, and automating complex tasks.

- Create a More Equitable and Sustainable World:LLMs can be used to address global challenges, such as climate change, poverty, and inequality.