Generative ai threatens democracy midjourney dalle2 stable diffusion – Generative AI Threatens Democracy: Midjourney, DALL-E 2, and Stable Diffusion. These powerful tools, capable of creating realistic images and text, have ushered in a new era of digital creation. While they offer incredible potential for artistic expression and innovation, they also raise serious concerns about the future of democracy.

The ability to generate convincing deepfakes and manipulate information presents a chilling prospect for democratic societies. Imagine a world where fabricated evidence can sway elections, or where AI-generated propaganda spreads unchecked, eroding trust in institutions and fueling societal divisions. These are not hypothetical scenarios; they are increasingly real possibilities, and we must confront them head-on.

The Rise of Generative AI

Generative AI, a branch of artificial intelligence focused on creating new content, has witnessed an explosive rise in recent years, revolutionizing various sectors. This rise is largely attributed to advancements in deep learning techniques, particularly in areas like natural language processing and computer vision.

These advancements have paved the way for the development of powerful generative AI models such as Midjourney, DALL-E 2, and Stable Diffusion, each boasting unique capabilities and impacting diverse industries.

Key Advancements in Generative AI, Generative ai threatens democracy midjourney dalle2 stable diffusion

The development of these advanced generative AI models is a testament to the significant progress made in deep learning and computer vision. A key factor contributing to this progress is the availability of massive datasets for training these models.

These datasets, often containing millions or even billions of images and text data, provide the necessary information for the models to learn complex patterns and generate realistic outputs. Another crucial advancement is the development of novel deep learning architectures like Generative Adversarial Networks (GANs) and Diffusion Models.

GANs consist of two neural networks – a generator and a discriminator – that compete against each other to produce increasingly realistic outputs. Diffusion Models, on the other hand, work by gradually adding noise to an image and then learning to reverse the process, generating high-quality images from random noise.

Impact on Art, Design, and Media

The emergence of generative AI models like Midjourney, DALL-E 2, and Stable Diffusion has had a profound impact on various creative industries. In the art world, these models have democratized art creation, enabling individuals with limited artistic skills to create unique and visually appealing artwork.

These models are also transforming the design industry by streamlining the design process and enabling rapid prototyping. Designers can use these tools to generate multiple variations of designs quickly, allowing them to explore different concepts and iterate faster.

Expand your understanding about what does europes approach data privacy mean for gpt and dall e with the sources we offer.

In the media industry, generative AI is being used to create realistic and immersive content. These models can generate realistic images and videos, enabling filmmakers and game developers to create more engaging and immersive experiences.

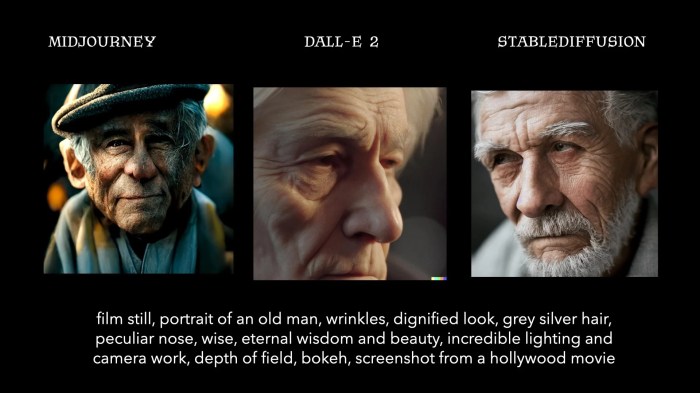

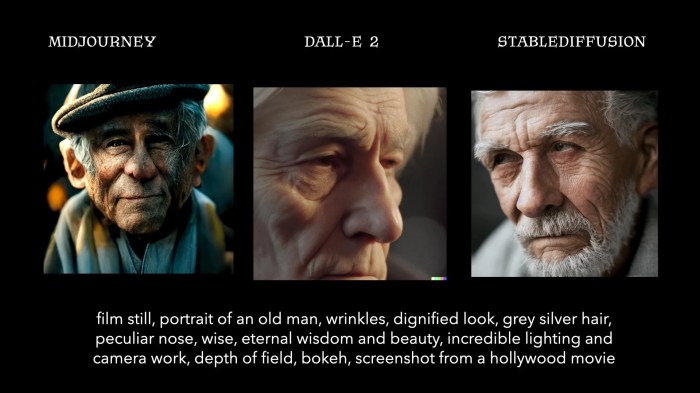

Comparison of Midjourney, DALL-E 2, and Stable Diffusion

- Midjourneyis known for its artistic style and ability to generate images based on text prompts. It excels in creating surreal and abstract artwork, often producing visually striking and imaginative results.

- DALL-E 2, developed by OpenAI, is renowned for its photorealistic image generation capabilities. It can create images based on complex and detailed text prompts, often producing images that are indistinguishable from real photographs.

- Stable Diffusionis an open-source model known for its flexibility and versatility. It offers a wide range of capabilities, including image generation, in-painting, and image-to-image translation.

Generative AI and Democracy: Generative Ai Threatens Democracy Midjourney Dalle2 Stable Diffusion

The rapid advancement of generative AI, with its ability to create realistic and convincing content, has raised serious concerns about its potential impact on democratic processes. While the technology offers numerous benefits, its misuse could undermine the very foundations of a healthy democracy.

Potential Threats to Democratic Processes

The potential for generative AI to disrupt democratic processes is a significant concern. Here are some key threats:

- Deepfakes and AI-Generated Propaganda: Generative AI can be used to create highly realistic deepfakes, manipulated videos that appear to show individuals saying or doing things they never did. These deepfakes can be used to spread misinformation, damage reputations, and sow discord. For instance, during the 2020 US presidential election, deepfakes of political figures were circulated online, raising concerns about their potential to influence voters.

- Manipulation of Public Opinion: Generative AI can be used to create large volumes of fake news articles, social media posts, and other content designed to manipulate public opinion. This can be done by creating accounts that appear to be genuine users and then using them to spread false information or propaganda.

For example, during the 2016 US presidential election, Russian operatives used social media bots to spread disinformation and influence the outcome of the election.

- Undermining Trust in Institutions: The widespread availability of generative AI tools makes it easier for individuals and groups to create fabricated content and spread misinformation. This can erode trust in institutions and make it more difficult for people to distinguish between truth and falsehood.

Examples of Deepfakes and AI-Generated Propaganda

The use of deepfakes and AI-generated propaganda to manipulate public opinion and undermine trust in institutions is a growing concern. Here are some examples:

- The “Obama Deepfake”: In 2018, a deepfake video of former US President Barack Obama was created and circulated online. The video, which appeared to show Obama making offensive remarks, went viral and raised concerns about the potential for deepfakes to be used for malicious purposes.

- AI-Generated Propaganda in the 2019 Hong Kong Protests: During the 2019 Hong Kong protests, AI-generated propaganda was used to spread misinformation and sow discord among protesters. This included fake news articles, social media posts, and deepfakes of protesters making false statements.

- AI-Powered Disinformation Campaigns: In 2020, a research study found evidence of AI-powered disinformation campaigns targeting the US presidential election. These campaigns involved the use of bots and other automated tools to spread misinformation and influence public opinion.

Ethical Implications of Generative AI in Creating Fabricated Content

The use of generative AI to create realistic but fabricated content raises significant ethical concerns. Here are some key considerations:

- The Spread of Misinformation: Generative AI can be used to create and spread misinformation at an unprecedented scale. This can have a profound impact on public discourse and decision-making.

- The Erosion of Trust: The widespread availability of generative AI tools makes it more difficult for people to distinguish between truth and falsehood. This can erode trust in institutions and lead to a decline in civic engagement.

- The Manipulation of Elections: Generative AI can be used to create deepfakes and other forms of fabricated content that could influence the outcome of elections. This raises concerns about the integrity of democratic processes.

Mitigating Risks and Fostering Responsible Use

The rapid advancement of Generative AI, while promising immense benefits, also necessitates a proactive approach to mitigate potential risks and ensure its responsible use. A comprehensive framework for responsible development and deployment is crucial to safeguard democracy and prevent unintended consequences.

Strategies for Mitigating Risks

Mitigating the risks associated with Generative AI requires a multi-pronged approach that addresses both technological and societal concerns. Key strategies include:

- Developing Robust Safety Mechanisms:Integrating safety mechanisms into AI systems is paramount. This includes techniques like adversarial training, data poisoning detection, and model interpretability to identify and address potential biases, vulnerabilities, and malicious uses.

- Promoting Transparency and Explainability:Ensuring transparency in AI algorithms and decision-making processes is crucial for building trust and accountability. This involves making model parameters and training data accessible, along with providing clear explanations for AI-generated outputs.

- Combating Deepfakes and Misinformation:The potential for Generative AI to create realistic deepfakes poses a significant threat to democratic processes. Developing robust detection and verification tools, coupled with public education campaigns, is vital to counter the spread of misinformation.

- Addressing Bias and Fairness:Generative AI systems are susceptible to inheriting biases from the training data. Efforts to address bias include using diverse datasets, developing fairness metrics, and implementing bias mitigation techniques during model development.

Framework for Responsible AI Development and Deployment

A comprehensive framework for responsible AI development and deployment should prioritize transparency, accountability, and ethical considerations. Key elements include:

- Ethical Guidelines and Principles:Establishing clear ethical guidelines and principles for AI development and use is crucial. These guidelines should address issues like fairness, privacy, accountability, and transparency, ensuring that AI aligns with societal values and promotes human well-being.

- Auditing and Oversight Mechanisms:Regular auditing and oversight of AI systems are essential to identify and mitigate potential risks. This includes evaluating model performance, detecting bias, and ensuring compliance with ethical guidelines.

- Data Governance and Privacy Protection:Robust data governance frameworks are essential to protect user privacy and prevent misuse of personal information. This includes establishing clear data collection, storage, and usage policies, along with mechanisms for data anonymization and encryption.

- Public Engagement and Education:Public education and engagement are vital for fostering understanding and trust in AI. This includes providing accessible information about AI technology, its potential benefits and risks, and promoting responsible use.

Role of Government Regulation, Industry Self-Regulation, and Public Education

Addressing the challenges posed by Generative AI requires a collaborative effort involving government, industry, and the public.

- Government Regulation:Governments play a crucial role in establishing regulations that promote responsible AI development and use. This includes developing clear legal frameworks for data privacy, algorithmic transparency, and liability for AI-related harms. For instance, the European Union’s General Data Protection Regulation (GDPR) sets standards for data protection and privacy, which can serve as a model for other regions.

- Industry Self-Regulation:Industry self-regulation can complement government regulations by promoting ethical practices and best practices within the AI community. Industry bodies can develop and enforce codes of conduct, establish certification standards, and promote transparency and accountability within their respective sectors.

- Public Education:Public education is essential for fostering critical thinking about AI and promoting responsible use. This includes raising awareness about the potential benefits and risks of AI, empowering individuals to make informed decisions, and encouraging public dialogue about the ethical and societal implications of AI.

The Future of Generative AI and Democracy

The rapid advancement of generative AI presents both exciting opportunities and significant challenges for democratic societies. As these technologies become more powerful and pervasive, it is crucial to consider their potential long-term impact on our institutions and the very fabric of our shared public life.

This section delves into the future of generative AI and its implications for democracy, exploring the potential risks and opportunities while emphasizing the need for responsible development and use.

The Long-Term Impact of Generative AI on Democracy

The potential long-term impact of generative AI on democracy is a complex and multifaceted issue. It involves understanding how these technologies might reshape political discourse, influence public opinion, and potentially undermine democratic processes. Here are some key areas of concern and opportunity:

- Deepfakes and Misinformation: Generative AI can create hyperrealistic synthetic media, such as videos and audio recordings, that can be used to spread misinformation and manipulate public opinion. Deepfakes, for instance, can be used to fabricate evidence or create false narratives that undermine trust in institutions and individuals.

This raises concerns about the erosion of truth and the potential for political manipulation.

- Algorithmic Bias and Discrimination: Generative AI models are trained on vast datasets, which may contain biases and reflect existing societal inequalities. If these biases are not addressed, generative AI could perpetuate and amplify existing forms of discrimination in areas like employment, education, and even political representation.

This raises concerns about the potential for algorithmic bias to exacerbate social divisions and undermine democratic principles of equality and fairness.

- Erosion of Trust and Polarization: The proliferation of AI-generated content, particularly in the form of personalized news feeds and social media platforms, can contribute to filter bubbles and echo chambers. This can lead to the erosion of trust in traditional institutions and a deepening of political polarization.

Individuals may become increasingly isolated in their own information silos, making it harder to engage in meaningful dialogue and find common ground.

- Automation of Political Processes: Generative AI could automate tasks like campaign management, speechwriting, and even policy development. While this might seem efficient, it also raises concerns about the potential for algorithmic decision-making to replace human judgment and oversight. This could lead to a decrease in transparency and accountability, as well as a loss of public participation in political processes.

- New Forms of Political Engagement: Generative AI can also empower citizens and create new avenues for political participation. For example, it can be used to create interactive simulations that allow people to explore different policy options or to develop citizen-led initiatives that address local concerns.

This has the potential to foster greater civic engagement and a more informed and active citizenry.

Prioritizing Ethical Considerations and Societal Well-being

To mitigate the risks and harness the opportunities of generative AI, it is crucial to prioritize ethical considerations and societal well-being in the development and deployment of these technologies. This requires a multi-pronged approach that involves:

- Transparency and Accountability: Ensuring transparency in the development and deployment of generative AI models is essential for building trust and holding developers accountable. This involves providing clear information about the data used to train models, the algorithms employed, and the potential biases or limitations.

It also requires establishing mechanisms for auditing and oversight to ensure that AI systems are operating as intended and are not being used for harmful purposes.

- Regulation and Governance: Effective regulation and governance frameworks are essential to ensure that generative AI is used responsibly and ethically. This involves establishing clear guidelines for the development and deployment of these technologies, addressing issues like data privacy, algorithmic bias, and the potential for misuse.

It also requires ongoing dialogue and collaboration between policymakers, technologists, and civil society to develop appropriate regulations that balance innovation with ethical considerations.

- Education and Awareness: Raising public awareness about the capabilities and limitations of generative AI is crucial for fostering informed decision-making and critical thinking. This involves educating citizens about the potential risks and benefits of these technologies, as well as equipping them with the skills and knowledge to discern fact from fiction and to navigate the complex information landscape.

It also requires promoting media literacy and critical thinking skills to help people identify and evaluate AI-generated content.