Deepfake face swap attacks increase, posing a growing threat to individuals, institutions, and society as a whole. These attacks, which use artificial intelligence to seamlessly swap faces in videos and images, are becoming increasingly sophisticated and accessible, blurring the lines between reality and fabrication.

The potential for misuse is alarming, ranging from financial scams and political manipulation to the spread of misinformation and the erosion of trust in digital content.

The rise of deepfakes can be attributed to advancements in machine learning algorithms and the availability of powerful computing resources. This technology has become readily available, empowering individuals with malicious intent to create highly convincing deepfakes with minimal technical expertise.

The motivations behind these attacks are diverse, ranging from financial gain and political agendas to personal vendettas and the pursuit of notoriety.

The Rise of Deepfake Face Swap Attacks

The world of technology is constantly evolving, and with each new advancement comes new challenges. One such challenge is the rise of deepfake technology, which has the potential to be both beneficial and harmful. Deepfakes are synthetic media, often videos or images, that are created using artificial intelligence (AI) to manipulate or generate realistic-looking content.

While deepfakes have legitimate applications in entertainment and education, they have also been increasingly used for malicious purposes, particularly in the form of face swap attacks.These attacks involve using deepfake technology to seamlessly swap the face of one person onto another, creating a convincing illusion that the individual in the video or image is someone they are not.

This can have serious consequences, ranging from personal embarrassment and reputational damage to financial fraud and political manipulation.

Technical Advancements Fueling Deepfake Face Swap Attacks

The accessibility and sophistication of deepfake technology have significantly increased in recent years, making it easier for individuals with limited technical expertise to create convincing deepfakes. Several factors have contributed to this trend:

- Open-source deepfake software:The availability of open-source software tools like DeepFaceLab has made it easier for individuals to learn and experiment with deepfake technology. This accessibility has lowered the barrier to entry, allowing more people to create and manipulate media.

- Advancements in AI algorithms:The development of more powerful and sophisticated AI algorithms has significantly improved the realism of deepfakes. These algorithms can now generate more realistic facial expressions, movements, and lighting conditions, making it increasingly difficult to distinguish deepfakes from real footage.

- Increased computing power:The availability of affordable and powerful computing resources, such as GPUs and cloud computing services, has enabled individuals to train deepfake models more efficiently. This has made it possible to create high-quality deepfakes with less time and effort.

Motivations Behind Deepfake Face Swap Attacks

The motivations behind deepfake face swap attacks can vary widely, ranging from personal vendettas to financial gain and political manipulation. Some common motives include:

- Personal revenge:Deepfakes can be used to spread false information about individuals, damaging their reputation and causing emotional distress. This can be motivated by personal grudges or a desire to seek revenge for perceived wrongs.

- Financial gain:Deepfakes can be used to create fraudulent videos or images, such as fake testimonials or endorsements, which can be used to deceive individuals and organizations into making financial decisions. This can lead to significant financial losses for victims.

- Political manipulation:Deepfakes can be used to create fake videos or images that portray politicians or public figures in a negative light, potentially swaying public opinion and influencing elections. This can undermine trust in democratic institutions and lead to social unrest.

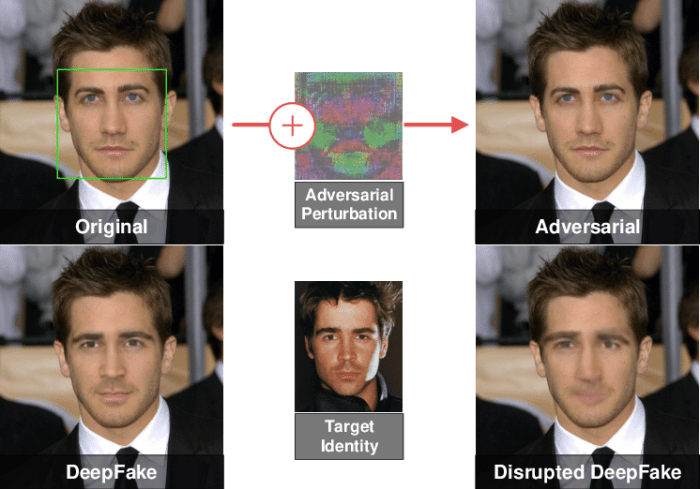

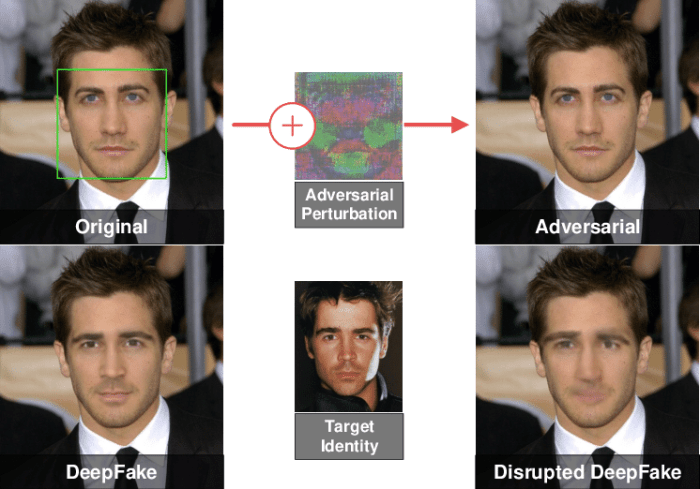

How Deepfake Face Swap Attacks Work

Deepfake face swap attacks are a growing concern, with the potential to cause significant harm. Understanding how these attacks work is crucial for developing effective countermeasures. This section delves into the process of creating deepfakes and examines the ethical implications of this technology.

Deepfake face swap attacks involve using artificial intelligence (AI) to manipulate videos and images, replacing one person’s face with another. This process is often achieved through deep learning algorithms, which are trained on massive datasets of images and videos.

Deepfake Algorithms and Their Capabilities

Deepfake algorithms utilize various techniques, each with its own strengths and limitations. Some common approaches include:

- Autoencoders:These algorithms learn to compress and reconstruct images, enabling them to generate realistic faces by combining features from different sources.

- Generative Adversarial Networks (GANs):GANs consist of two neural networks, a generator and a discriminator, that compete with each other. The generator creates fake images, while the discriminator tries to identify them. This adversarial process leads to increasingly realistic deepfakes.

- Convolutional Neural Networks (CNNs):CNNs are specifically designed for image processing. They can be trained to recognize patterns in images and generate new ones based on the learned patterns.

The capabilities of these algorithms are constantly evolving, with newer models becoming increasingly sophisticated and capable of producing more convincing deepfakes. This advancement raises concerns about the potential for misuse and the difficulty in detecting these attacks.

Ethical Considerations

The development and widespread use of deepfake technology have raised significant ethical concerns. Some of the key issues include:

- Misinformation and Propaganda:Deepfakes can be used to spread false information, manipulate public opinion, and undermine trust in institutions.

- Reputation Damage:Deepfakes can be used to create damaging content that tarnishes someone’s reputation or portrays them in a negative light.

- Privacy Violations:Deepfake technology can be used to create realistic videos of individuals without their consent, violating their privacy and potentially leading to emotional distress.

- Legal Implications:The legal implications of deepfakes are still being debated, with questions surrounding liability, copyright infringement, and the potential for malicious use.

As deepfake technology continues to advance, it is crucial to address these ethical considerations and develop safeguards to prevent its misuse.

The Impact of Deepfake Face Swap Attacks

The rise of deepfake face swap attacks presents a significant threat to our digital world, impacting individual privacy, security, and social order. Deepfakes have the potential to manipulate and distort reality, making it increasingly difficult to discern truth from falsehood.

You also can understand valuable knowledge by exploring uks absolute radio set to launch tailored ads for online listeners.

Privacy and Security Implications

Deepfake face swap attacks can severely compromise individual privacy and security. By manipulating visual data, attackers can create convincing deepfakes that can be used for malicious purposes, such as:

- Identity Theft:Deepfakes can be used to impersonate individuals, enabling attackers to access sensitive information or financial accounts.

- Reputation Damage:Deepfakes can be used to create fabricated videos or images that portray individuals in a negative light, damaging their reputation and causing emotional distress.

- Blackmail and Extortion:Attackers can use deepfakes to create compromising content and threaten individuals with its release unless they comply with demands.

- Surveillance and Spying:Deepfakes can be used to create fake footage of individuals in compromising situations, allowing attackers to monitor their activities or gain access to private spaces.

Misinformation and Social Disruption

Deepfake face swap attacks have the potential to spread misinformation and disrupt social order. By manipulating visual data, attackers can create fabricated content that can be used to:

- Manipulate Public Opinion:Deepfakes can be used to create false narratives that can sway public opinion on political, social, or economic issues.

- Undermine Trust in Institutions:Deepfakes can be used to create fabricated videos of politicians or other public figures making false statements, eroding public trust in institutions.

- Inciting Violence and Hate:Deepfakes can be used to create fabricated videos that portray individuals or groups in a negative light, inciting violence and hate.

- Interfering with Elections:Deepfakes can be used to create fabricated videos of candidates making false statements, potentially influencing the outcome of elections.

Real-World Examples of Deepfake Face Swap Attacks

Deepfake face swap attacks have already been used in real-world scenarios, demonstrating their potential for harm.

- The Case of the Fake Barack Obama Video:In 2018, a video surfaced online that appeared to show former President Barack Obama making offensive remarks. The video was later revealed to be a deepfake, highlighting the potential for deepfakes to be used to spread misinformation and sow discord.

- The Case of the Fake CEO Video:In 2019, a company CEO was impersonated in a deepfake video that was used to authorize a fraudulent transaction. This incident demonstrated the potential for deepfakes to be used for financial fraud.

- The Case of the Fake Celebrity Pornography:In recent years, there have been numerous cases of deepfakes being used to create fake pornography featuring celebrities without their consent. This practice has raised concerns about the exploitation of individuals and the potential for deepfakes to be used for revenge porn.

Defending Against Deepfake Face Swap Attacks

Deepfake face swap attacks are a growing threat, and it is crucial to understand the methods used to detect and prevent them. This section will explore current detection and prevention methods, examine emerging technologies, and highlight the importance of education and awareness in mitigating the risks of deepfake attacks.

Current Methods for Detecting and Preventing Deepfake Face Swap Attacks

Several methods are currently employed to detect and prevent deepfake face swap attacks. These methods are often used in combination to achieve greater accuracy and effectiveness.

- Deepfake Detection Algorithms:These algorithms use machine learning to analyze various visual cues, such as inconsistencies in facial expressions, subtle artifacts, and inconsistencies in lighting and shadows, to identify deepfakes. Examples include:

- Face Morphing Detection:Detects inconsistencies in the facial geometry and texture, such as unnatural warping or blurring around the edges of the swapped face.

- Blink Detection:Analyzes the frequency and duration of blinks, which are often unnatural in deepfakes due to the difficulty of replicating them accurately.

- Media Forensics Techniques:These techniques analyze the metadata and underlying structure of media files to identify signs of manipulation or tampering. This includes:

- Pixel-Level Analysis:Examines the distribution of pixel values and patterns to detect inconsistencies or artifacts introduced by deepfake software.

- Compression Artifacts:Identifies patterns in the compressed data that can indicate manipulation or tampering.

- Biometric Verification:This involves using biometric data, such as facial recognition or voice analysis, to authenticate the identity of the person in the video or image. While not foolproof, it can help to identify deepfakes by comparing the biometric data to known records.

Emerging Technologies and Strategies for Combating Deepfakes

Researchers and developers are constantly working on new technologies and strategies to combat deepfakes. These advancements aim to enhance detection capabilities, develop more robust authentication mechanisms, and create new methods for preventing the creation of deepfakes in the first place.

- Generative Adversarial Networks (GANs):GANs are a type of machine learning model that can be used to create synthetic data, including deepfakes. However, researchers are exploring the use of GANs to generate “anti-deepfakes” that can be used to train deepfake detection models. These anti-deepfakes are designed to mimic the characteristics of deepfakes, allowing detection models to learn to identify them more effectively.

- Blockchain Technology:Blockchain can be used to create a tamper-proof record of media files, making it more difficult to manipulate or alter them. By tracking the history of a media file through the blockchain, it is possible to verify its authenticity and identify any unauthorized modifications.

- Watermarking Techniques:Watermarking involves embedding hidden information into media files, such as a unique identifier or timestamp. This can help to authenticate the origin and integrity of the file and make it more difficult to create deepfakes without detection.

The Role of Education and Awareness in Mitigating Deepfake Attacks, Deepfake face swap attacks increase

Education and awareness play a crucial role in mitigating the risks of deepfake attacks. By understanding the technology behind deepfakes, individuals can become more discerning consumers of online content and less susceptible to manipulation.

- Critical Thinking and Media Literacy:Encouraging critical thinking skills and media literacy is essential. Individuals should be able to question the authenticity of online content, consider the source, and look for signs of manipulation or tampering.

- Public Awareness Campaigns:Public awareness campaigns can educate the public about the dangers of deepfakes and provide guidance on how to identify them. This can help to reduce the spread of misinformation and prevent the misuse of deepfake technology.

- Ethical Considerations:Open discussions about the ethical implications of deepfake technology are necessary. This includes exploring the potential for misuse, the need for responsible development and deployment, and the importance of protecting individuals from harm.

The Future of Deepfake Face Swap Attacks: Deepfake Face Swap Attacks Increase

The rapid advancement of deepfake technology, particularly in face swap attacks, raises significant concerns about its potential future impact on society. As deepfakes become more sophisticated and accessible, it is crucial to understand the evolving landscape of this technology and its implications.

Future Trends in Deepfake Technology

The future of deepfake technology is likely to witness further advancements in its sophistication and accessibility. These advancements will likely lead to more realistic and convincing deepfakes, making it even more challenging to distinguish between genuine and fabricated content.

- Improved Deepfake Algorithms:Ongoing research and development in artificial intelligence (AI) and machine learning (ML) will likely lead to more sophisticated deepfake algorithms capable of generating even more realistic and believable face swaps. This could involve advancements in techniques like Generative Adversarial Networks (GANs) and deep neural networks, resulting in deepfakes that are harder to detect.

- Increased Accessibility:Deepfake tools and software are becoming increasingly accessible, with user-friendly interfaces and readily available online resources. This could enable individuals with limited technical expertise to create and distribute deepfakes, potentially exacerbating the spread of misinformation and malicious content.

- Real-Time Deepfakes:The development of real-time deepfake capabilities could pose significant challenges for security and privacy. Imagine scenarios where individuals could be impersonated in real-time video conferences or live broadcasts, raising concerns about identity theft, fraud, and social manipulation.

Efforts to Regulate Deepfake Technology

The potential for deepfakes to cause harm has led to growing calls for regulation and governance of this technology. Governments and organizations are actively exploring ways to mitigate the risks associated with deepfakes.

- Legislation and Policy:Several countries, including the United States and the European Union, are considering legislation to address the misuse of deepfakes. These regulations may focus on requiring disclosure of deepfakes, imposing penalties for malicious use, and promoting ethical development and use of deepfake technology.

- Industry Initiatives:Tech companies and industry organizations are collaborating to develop ethical guidelines and best practices for the creation and use of deepfakes. These initiatives aim to foster responsible development and mitigate potential harms associated with this technology.

- Public Awareness and Education:Raising public awareness about deepfakes and their potential impact is crucial. Educating individuals about how to identify and critically evaluate deepfake content can help mitigate the spread of misinformation and manipulation.

Impact of Deepfakes on Industries and Sectors

The implications of deepfake technology extend beyond individual privacy and security. Deepfakes have the potential to disrupt various industries and sectors, requiring adaptation and mitigation strategies.

- Politics and Elections:Deepfakes could be used to manipulate public opinion, spread disinformation, and undermine democratic processes. The potential for deepfakes to create fabricated videos of politicians making false statements or engaging in inappropriate behavior raises serious concerns about election integrity and political discourse.

- Media and Journalism:Deepfakes pose challenges for media organizations in verifying the authenticity of content and ensuring the credibility of their reporting. The spread of deepfakes could erode public trust in traditional media outlets and contribute to the spread of misinformation.

- Finance and Security:Deepfakes could be used to perpetrate financial fraud, impersonate individuals for unauthorized transactions, or compromise security systems. The potential for deepfakes to bypass authentication systems and deceive security personnel raises serious concerns about financial security and identity theft.