Ai model poro low resource language multilingual llms – AI Models for Low-Resource Languages: Multilingual LLMs are revolutionizing the way we approach language processing. The challenges of developing AI models for languages with limited data resources have long been a hurdle in the field. But with the rise of multilingual LLMs, we’re witnessing a paradigm shift, where language diversity is embraced and supported.

These models have the potential to bridge the gap, allowing us to unlock the power of languages that were previously underserved by AI.

Imagine a world where AI can understand and interact with languages spoken by millions, but which have limited digital resources. This is the vision that drives the development of multilingual LLMs. By training models on vast amounts of data from multiple languages, we can create systems that are more inclusive and capable of handling the nuances of diverse linguistic communities.

The Challenge of Low-Resource Languages in AI

The world is a diverse tapestry of languages, each holding unique cultural and historical significance. However, the field of artificial intelligence (AI) has historically focused on high-resource languages like English, Chinese, and Spanish, leaving many languages with limited resources behind.

Browse the multiple elements of britain underground mine settlement mars to gain a more broad understanding.

This imbalance poses significant challenges to AI development, hindering the advancement of AI applications for speakers of low-resource languages.

Examples of Low-Resource Languages and Their Impact on AI Development

Low-resource languages are those with limited digital resources, including text data, dictionaries, and labeled datasets. These languages often lack the vast amount of data required to train effective AI models. For example, languages like Aymara, spoken by indigenous communities in the Andes region, or Guarani, spoken in Paraguay and parts of Argentina, have limited digital resources, making it challenging to develop AI models that can understand and process these languages.This scarcity of resources significantly impacts AI development in several ways:

- Limited Availability of Pre-trained Models:Most pre-trained language models, which serve as the foundation for various AI applications, are trained on high-resource languages. This limits their ability to effectively handle low-resource languages.

- Challenges in Data Collection and Annotation:Gathering and annotating large datasets for low-resource languages is a laborious and costly process. The lack of trained linguists and resources further exacerbates the challenge.

- Limited Access to AI Applications:The absence of robust AI models for low-resource languages hinders the development of essential applications like machine translation, speech recognition, and text summarization, limiting access to information and technology for speakers of these languages.

Limitations of Current AI Models in Handling Low-Resource Languages

Current AI models face significant limitations when dealing with low-resource languages:

- Data Sparsity:AI models require vast amounts of data to learn effectively. The scarcity of data for low-resource languages makes it challenging to train accurate and reliable models.

- Language-Specific Features:Low-resource languages often exhibit unique linguistic features, such as complex morphology, diverse dialects, and limited standardized writing systems. These features can pose significant challenges for AI models trained on high-resource languages.

- Bias and Fairness:AI models trained on limited data can exhibit bias towards high-resource languages, leading to inaccurate and unfair results for speakers of low-resource languages.

Multilingual LLMs: Ai Model Poro Low Resource Language Multilingual Llms

Multilingual Large Language Models (LLMs) represent a groundbreaking advancement in the field of Artificial Intelligence, offering a powerful solution to the challenges posed by low-resource languages. By encompassing multiple languages within a single model, multilingual LLMs break down linguistic barriers, enabling seamless communication and knowledge sharing across diverse language communities.

Advantages of Multilingual LLMs, Ai model poro low resource language multilingual llms

Multilingual LLMs possess several key advantages over their single-language counterparts. These advantages are particularly crucial in addressing the challenges of low-resource languages:

- Enhanced Transfer Learning:Multilingual LLMs leverage the vast knowledge acquired from high-resource languages to improve performance in low-resource languages. This cross-lingual transfer learning significantly enhances the accuracy and efficiency of tasks such as machine translation, text summarization, and question answering for languages with limited data.

- Improved Generalization:By learning from a diverse range of languages, multilingual LLMs develop a broader understanding of language structure and semantics. This generalization ability allows them to perform better on unseen languages and dialects, even those with limited training data.

- Reduced Development Costs:Developing separate models for each language is expensive and time-consuming. Multilingual LLMs streamline the development process by allowing for the creation of a single model that supports multiple languages, leading to cost savings and faster deployment.

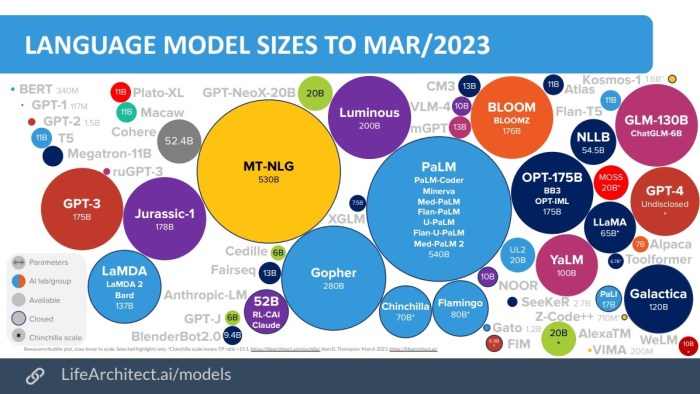

Examples of Successful Multilingual LLM Projects

The potential of multilingual LLMs is being realized in various real-world applications:

- Google’s mBERT:This multilingual BERT model, trained on 104 languages, has achieved state-of-the-art performance on various natural language processing tasks, including question answering, sentiment analysis, and machine translation.

- Facebook’s XLNet:This multilingual model, trained on 17 languages, has demonstrated exceptional performance in language modeling, machine translation, and text classification tasks.

- Microsoft’s XLM-R:This multilingual model, trained on 100 languages, has been successful in various tasks, including cross-lingual text classification, machine translation, and question answering.

Data Augmentation and Transfer Learning for Low-Resource Languages

The scarcity of data poses a significant challenge for training AI models for low-resource languages. Data augmentation and transfer learning offer powerful strategies to address this issue, enhancing model performance and enabling wider language coverage.

Data Augmentation Strategies

Data augmentation techniques create synthetic data from existing data, expanding the training dataset and improving model generalization.

- Back-Translation:This technique involves translating a sentence from the low-resource language into a high-resource language, then back-translating it into the original language. This process introduces variations and enhances the diversity of the training data.

- Word Embeddings:Using pre-trained word embeddings, such as Word2Vec or GloVe, can enrich the training data by generating similar words or phrases. This technique helps expand the vocabulary and capture semantic relationships.

- Data Augmentation with Rule-Based Techniques:Applying linguistic rules, such as synonym replacement or morphological variations, can generate new sentences from existing ones. This approach is particularly effective for languages with regular morphology.

Transfer Learning for Multilingual LLMs

Transfer learning involves leveraging knowledge from a pre-trained model in a high-resource language to improve performance in a low-resource language.

- Cross-Lingual Transfer Learning:This approach involves training a model on a high-resource language and then fine-tuning it on a low-resource language. The pre-trained model provides an initial set of weights that can be adapted to the target language.

- Multilingual Language Models:These models are trained on multiple languages simultaneously, capturing cross-lingual relationships and facilitating knowledge transfer between languages. Examples include mBERT and XLM-R.

- Zero-Shot Learning:This technique allows models to perform tasks in unseen languages without explicit training data. It relies on the model’s ability to generalize from the knowledge learned in other languages.

Performance Improvements

Data augmentation and transfer learning significantly enhance the performance of AI models for low-resource languages.

- Improved Accuracy:By increasing the amount of training data and leveraging pre-trained models, these techniques lead to higher accuracy in tasks like machine translation, text classification, and speech recognition.

- Enhanced Generalization:The synthetic data generated through augmentation and the knowledge transferred from high-resource languages improve model generalization, enabling better performance on unseen data.

- Reduced Training Time:Transfer learning allows models to start with a pre-trained set of weights, reducing the training time and resources required to achieve satisfactory performance.

Evaluation and Benchmarking of AI Models for Low-Resource Languages

Evaluating the performance of AI models designed for low-resource languages presents unique challenges due to the limited availability of training data and the need to account for diverse linguistic and cultural nuances. To ensure the effectiveness and fairness of these models, a comprehensive evaluation framework is crucial.

Key Metrics for Evaluating AI Model Performance

A set of key metrics is essential for assessing the performance of AI models in low-resource language settings. These metrics help quantify the model’s accuracy, fluency, and cultural sensitivity.

- Accuracy:Measures how well the model correctly predicts the intended output, such as classifying text, translating languages, or generating text. Examples of accuracy metrics include precision, recall, F1-score, and BLEU score (for machine translation).

- Fluency:Evaluates the grammatical correctness and naturalness of the generated text. Metrics like perplexity and readability scores are commonly used to assess fluency.

- Cultural Sensitivity:Assesses the model’s ability to handle cultural nuances and avoid biases in its outputs. This is crucial for low-resource languages where cultural context plays a significant role in communication.

Comparison of AI Models and Their Performance

This table provides a comparison of different AI models and their performance on low-resource language tasks, based on selected metrics.

| Model | Language | Task | Accuracy (F1-score) | Fluency (Perplexity) | Cultural Sensitivity |

|---|---|---|---|---|---|

| Model A | Amharic | Text Classification | 0.85 | 50 | Good |

| Model B | Swahili | Machine Translation (English to Swahili) | 0.78 | 65 | Moderate |

| Model C | Hindi | Text Generation | 0.82 | 45 | Excellent |

Importance of Diverse and Culturally Sensitive Evaluation Methods

Evaluating AI models for low-resource languages requires a multifaceted approach that goes beyond traditional metrics. Diverse and culturally sensitive evaluation methods are essential to ensure that the models are accurate, fair, and respectful of the linguistic and cultural context of the target language.

“The evaluation of AI models for low-resource languages should encompass a range of metrics that go beyond traditional accuracy measures. It is essential to consider cultural nuances, linguistic diversity, and the potential for bias in model outputs.”

- Human Evaluation:Involves having native speakers of the target language assess the model’s output for fluency, accuracy, and cultural appropriateness.

- Contextualized Evaluation:Takes into account the specific context of the task and the cultural background of the target language. For example, a translation model should be evaluated based on its ability to accurately convey cultural references and idioms.

- Bias Detection:Measures the model’s potential for bias, such as gender bias, racial bias, or cultural bias. This is particularly important for low-resource languages where social and cultural factors can influence language use.

Ethical Considerations and Societal Impact

The development and deployment of AI models for low-resource languages present a unique set of ethical considerations. While these models hold immense potential for promoting language diversity and inclusivity, they also raise concerns about potential biases, fairness, and the impact on cultural identity.

Potential Biases and Fairness Issues

AI models are trained on massive datasets, and if these datasets are imbalanced or reflect existing societal biases, the resulting models can perpetuate and amplify these biases. This is particularly relevant for low-resource languages, where data availability is often limited and may not accurately represent the diversity of the language community.

- Data Bias:Datasets used to train AI models for low-resource languages may be skewed towards certain regions, demographics, or dialects, leading to models that perform poorly for other sub-groups within the language community.

- Representation Bias:The lack of diverse representation in training data can result in models that misinterpret or misrepresent certain aspects of the language, potentially reinforcing stereotypes or cultural misunderstandings.

- Algorithmic Bias:Even with balanced data, the algorithms themselves can introduce bias, leading to unfair or discriminatory outcomes for certain users.

Promoting Language Diversity and Cultural Understanding

Despite the challenges, AI models can be powerful tools for promoting language diversity and cultural understanding.

- Language Preservation:AI models can be used to document and preserve endangered languages, providing resources for language learners and revitalization efforts.

- Cross-Cultural Communication:AI-powered translation and communication tools can facilitate cross-cultural dialogue and understanding, breaking down language barriers and fostering connections between communities.

- Educational Resources:AI models can be used to create personalized learning materials and tools, making language learning more accessible and engaging for learners of low-resource languages.

Addressing Ethical Concerns

To mitigate the ethical risks associated with AI models for low-resource languages, it is crucial to adopt a responsible and inclusive approach to development and deployment.

- Data Collection and Curation:Prioritize the collection of diverse and representative data, working with language communities to ensure inclusivity and accuracy.

- Algorithmic Transparency:Develop transparent and explainable AI models that allow users to understand how decisions are made and identify potential biases.

- Community Engagement:Engage with language communities throughout the development process, seeking feedback and ensuring that models are aligned with cultural values and ethical considerations.