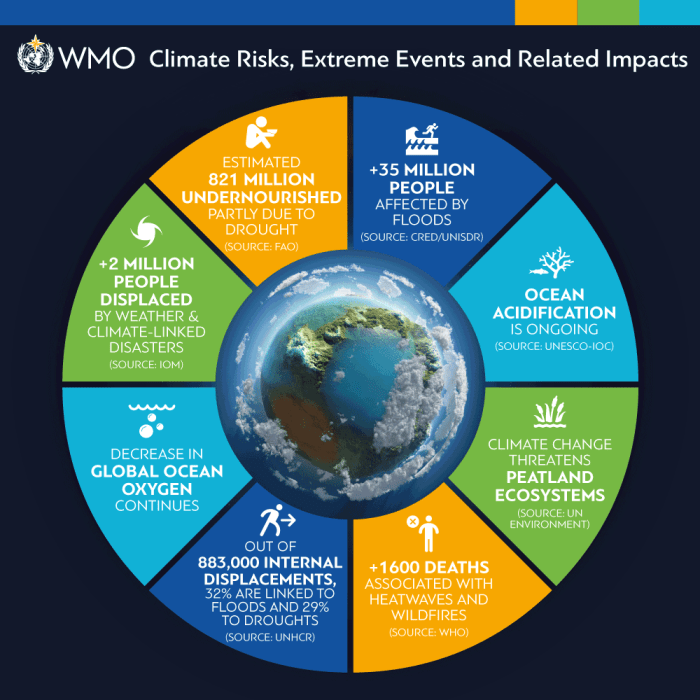

Ai fall short climate change biased datasets study – AI Falls Short: Climate Change Biased Datasets Study reveals a critical flaw in our approach to tackling climate change – the datasets used to train AI models are often biased, leading to inaccurate predictions and ineffective solutions. This study delves into the complexities of these biases, highlighting how they can perpetuate existing inequalities and exacerbate environmental injustices.

Imagine a climate model trained on data primarily from developed countries, overlooking the unique challenges faced by developing nations. This skewed representation can lead to inaccurate predictions and ultimately hinder the development of effective climate action strategies. The study meticulously examines these biases, exploring their impact on climate change research and the development of AI-driven solutions.

The Problem of Biased Datasets in AI for Climate Change

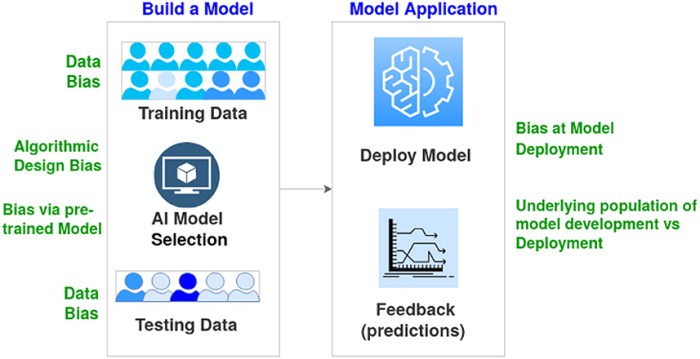

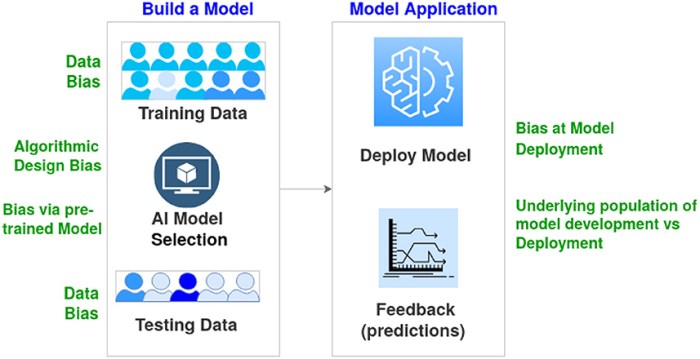

The increasing use of AI in climate change research and mitigation efforts raises concerns about the potential for biased datasets to influence the development and effectiveness of these models. AI models are only as good as the data they are trained on, and biases in datasets can lead to inaccurate predictions and ineffective solutions.

Enhance your insight with the methods and methods of meet the finalists of the tnw valencia startup pitch battle.

Geographical Representation, Ai fall short climate change biased datasets study

Geographical biases in datasets can result in underrepresentation of certain regions or climate zones. For example, datasets might contain more data from developed countries than developing countries, leading to models that are less accurate in predicting climate impacts in regions with less data.

This can lead to a disproportionate focus on mitigating climate change in developed countries, while neglecting the needs of developing countries that are often most vulnerable to climate change impacts.

Impacts of Biased Datasets on Climate Change Research

Biased datasets can significantly impact the development and application of AI models for climate change research. These biases can arise from various sources, including data collection methods, historical data limitations, and the inherent assumptions embedded in the data. The consequences of biased datasets can be far-reaching, affecting the accuracy, reliability, and ultimately, the effectiveness of AI-driven climate change solutions.

Influence on AI Model Development

Biased datasets can influence the development of AI models in several ways. For instance, if a dataset primarily represents data from developed countries with robust infrastructure and data collection practices, the resulting AI model might not accurately reflect the realities of developing countries with limited data availability or different climate patterns.

This can lead to inaccurate predictions and inadequate risk assessments, particularly for vulnerable communities.

- Limited Representation:Datasets often lack representation from diverse geographical locations, socioeconomic groups, and climate zones, leading to models that fail to generalize to real-world scenarios.

- Sampling Bias:Data collection methods can introduce bias by oversampling certain regions or underrepresenting specific populations, leading to skewed model outputs.

- Historical Data Limitations:Using historical data that does not capture recent climate change trends or extreme events can result in models that are not robust to future climate scenarios.

Consequences for Climate Change Solutions

The presence of biases in datasets can have significant consequences for the accuracy and reliability of AI-driven climate change solutions.

- Inaccurate Forecasts:Models trained on biased datasets may produce inaccurate forecasts, leading to inadequate preparation for extreme weather events or misallocation of resources.

- Misleading Risk Assessments:Biased datasets can result in inaccurate risk assessments, potentially underestimating or overestimating the vulnerability of certain communities to climate change impacts.

- Ineffective Policy Recommendations:AI models trained on biased data may generate policy recommendations that are not tailored to the specific needs and contexts of different regions or populations, leading to ineffective climate change mitigation and adaptation strategies.

Perpetuating Inequalities and Environmental Injustices

Biased datasets can exacerbate existing inequalities and environmental injustices by perpetuating discriminatory practices.

- Unequal Access to Resources:AI models trained on biased data may reinforce existing inequalities by allocating resources based on historical patterns that do not account for the changing needs of marginalized communities.

- Disproportionate Impacts:Biased models can lead to disproportionate impacts on vulnerable populations, such as those living in areas with limited access to clean water, food security, or healthcare, who are often disproportionately affected by climate change.

- Exclusion and Marginalization:Biased datasets can exclude marginalized communities from decision-making processes, perpetuating their vulnerability to climate change impacts.

Addressing Bias in AI for Climate Change

The use of AI in climate change research holds immense potential for accelerating our understanding of the climate crisis and developing effective solutions. However, the effectiveness of these AI models hinges on the quality and unbiased nature of the datasets they are trained on.

This section delves into strategies for mitigating bias in datasets used for climate change research, highlighting the crucial role of ethical considerations in AI development.

Improving Data Collection Methods

Data collection methods significantly impact the quality and representativeness of datasets. To minimize bias, we need to adopt strategies that ensure data is collected systematically and comprehensively.

- Standardization of data collection protocols:Implementing standardized protocols across different research groups ensures data consistency and comparability, reducing potential biases introduced by variations in data collection methods.

- Increased spatial and temporal resolution:Gathering data at finer spatial and temporal scales allows for capturing local variations and capturing events that might be missed with coarser resolutions. This is particularly crucial for climate change research, as local variations in climate and environmental conditions can be significant.

- Incorporation of diverse data sources:Combining data from multiple sources, including satellite imagery, ground-based measurements, and citizen science initiatives, can provide a more comprehensive picture of climate change impacts. This approach helps reduce reliance on single data sources that might be prone to specific biases.

Ensuring Diverse Representation

Data diversity is crucial for building robust and representative AI models. Ensuring diverse representation in datasets means incorporating data from different regions, time periods, and socioeconomic backgrounds. This helps account for the diverse impacts of climate change and avoid over-reliance on data from specific regions or demographics.

- Geographic representation:Data should encompass a wide range of geographic locations, including both developed and developing countries, to capture the full spectrum of climate change impacts and responses.

- Temporal representation:Including data from different time periods helps capture long-term trends and assess the evolution of climate change impacts over time. This is essential for understanding historical trends and predicting future climate scenarios.

- Socioeconomic representation:Datasets should incorporate data from diverse socioeconomic backgrounds to understand how climate change affects different populations and communities. This includes data on income levels, access to resources, and vulnerability to climate-related hazards.

Addressing Historical Biases

Historical biases in data can perpetuate inequalities and distort AI models’ outputs. Recognizing and addressing these biases is crucial for ensuring equitable and reliable climate change research.

- Data cleaning and correction:Identifying and correcting historical biases in data requires careful analysis and potentially the use of data imputation techniques. This involves replacing missing or inaccurate data with estimates based on available information.

- Developing bias mitigation algorithms:AI researchers are developing algorithms specifically designed to mitigate biases in datasets. These algorithms can identify and correct for historical biases, leading to more equitable and representative AI models.

- Collaboration with marginalized communities:Engaging with marginalized communities that have been historically underrepresented in data collection can help identify and address historical biases. This collaborative approach ensures that data reflects the lived experiences of diverse communities and leads to more inclusive AI models.

Ethical Considerations in AI Development for Climate Change

Ethical considerations are paramount in the development and deployment of AI for climate change. Data privacy, transparency, and accountability are key aspects of responsible AI development.

“AI systems should be designed and developed in a way that respects human rights, promotes social good, and avoids perpetuating existing inequalities.”

- Data privacy:Protecting the privacy of individuals whose data is used for AI development is essential. This involves implementing strong data security measures and obtaining informed consent from individuals before using their data.

- Transparency:AI models should be transparent in their workings, allowing for understanding of how they reach their conclusions. This includes documenting the data sources used, the algorithms employed, and the potential biases that might influence model outputs.

- Accountability:Establishing clear lines of accountability for AI systems is crucial. This means identifying who is responsible for the development, deployment, and potential consequences of AI models, ensuring that appropriate safeguards are in place to mitigate potential harms.

Case Studies of Biased Datasets in Climate Change Research: Ai Fall Short Climate Change Biased Datasets Study

The impact of biased datasets on AI-driven climate change research is a growing concern. It’s crucial to understand how these biases manifest in real-world scenarios and the consequences they have on scientific findings. Examining specific case studies allows us to identify the types of biases present, their impact on results, and learn valuable lessons for future research.

Case Study: Predicting Forest Fire Risk

This case study explores a project aimed at predicting forest fire risk using AI models. The dataset used for training these models was heavily biased towards data from specific geographic regions with a history of frequent forest fires. This led to the AI model being more accurate in predicting fires in those specific regions, while underestimating the risk in areas with less historical data.

- Bias:Geographic bias, favoring data from regions with frequent fires.

- Impact:The model accurately predicted fire risk in regions with historical data but underestimated the risk in regions with less data.

- Lesson Learned:Emphasize the importance of collecting data from diverse geographic locations to avoid regional bias.

Case Study: Estimating Crop Yields

Another case study involves an AI project aiming to estimate crop yields using satellite imagery. The dataset used for training the model primarily comprised data from industrialized agricultural regions with high levels of mechanization and resource availability. This led to the model overestimating crop yields in less developed regions with lower levels of agricultural infrastructure.

- Bias:Resource availability bias, favoring data from regions with high resource availability.

- Impact:The model overestimated crop yields in less developed regions with lower resource availability.

- Lesson Learned:Ensure the dataset includes data from diverse agricultural contexts, reflecting varying levels of resource availability.

The Future of AI and Climate Change

The potential of AI to address climate change is immense. AI can analyze vast amounts of data to identify patterns, predict climate impacts, and optimize resource allocation. However, this potential is threatened by the pervasive issue of bias in AI datasets.

Addressing this bias is crucial to ensure that AI solutions are equitable, effective, and truly serve the purpose of mitigating climate change.

Ethical Considerations and Responsible AI Development

Ethical considerations and responsible AI development are paramount for ensuring that AI solutions for climate change are equitable and beneficial. The development and deployment of AI for climate change mitigation should prioritize the following:

- Transparency and Explainability:AI models should be transparent and explainable, allowing users to understand how decisions are made and identify potential biases. This transparency fosters trust and accountability, crucial for responsible AI development.

- Fairness and Inclusivity:AI systems should be designed to be fair and inclusive, ensuring that all communities benefit from their implementation. This requires addressing biases in data and algorithms, and considering the diverse needs and perspectives of different communities.

- Privacy and Data Security:The use of AI for climate change should respect privacy and data security. Data collection and usage must be ethical and compliant with relevant regulations, ensuring the protection of individuals’ information.

- Collaboration and Engagement:Effective AI solutions for climate change require collaboration and engagement with diverse stakeholders, including researchers, policymakers, and communities. This ensures that AI development is aligned with real-world needs and priorities.

Roadmap for Unbiased and Equitable AI Solutions

A roadmap for creating unbiased and equitable AI solutions for climate change research and action involves a multi-faceted approach that addresses data, algorithms, and societal context:

- Data Collection and Curation:The first step is to address bias in data collection. This involves identifying and mitigating biases in data sources, ensuring diverse and representative datasets, and developing robust data quality control measures. For example, incorporating data from underrepresented regions and communities is crucial to ensure that AI models accurately reflect the diverse impacts of climate change.

- Algorithm Development and Evaluation:Algorithm development should prioritize fairness and equity. This includes developing techniques for bias detection and mitigation, employing fairness metrics to evaluate model performance, and using explainable AI techniques to understand the reasoning behind model predictions. For instance, using counterfactual analysis can help identify how changes in data would impact model predictions, revealing potential biases.

- Community Engagement and Validation:Engaging with communities affected by climate change is essential to ensure that AI solutions are aligned with their needs and priorities. This includes involving communities in the design, development, and evaluation of AI systems, ensuring that their voices are heard and their perspectives are reflected in the solutions.

- Policy and Regulation:Policy and regulatory frameworks need to be developed to guide the responsible development and deployment of AI for climate change. These frameworks should address ethical considerations, data privacy, and accountability, ensuring that AI solutions are used for good and do not exacerbate existing inequalities.