Unitary AI social media content moderation sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. Imagine a world where social media platforms are free from harmful content, where hateful speech and misinformation are detected and removed before they can spread, and where user safety is paramount.

This is the promise of unitary AI, a cutting-edge technology that leverages the power of artificial intelligence to revolutionize content moderation.

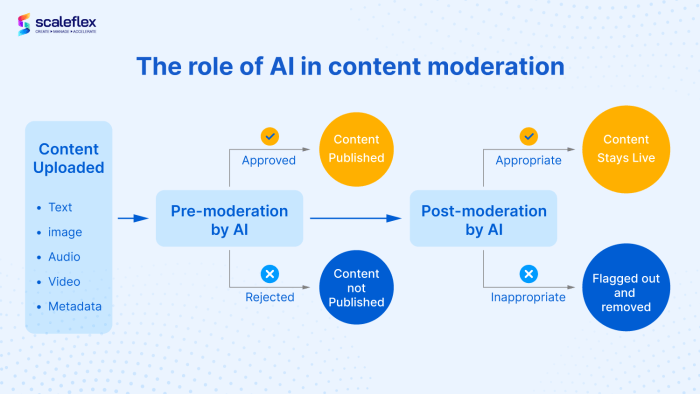

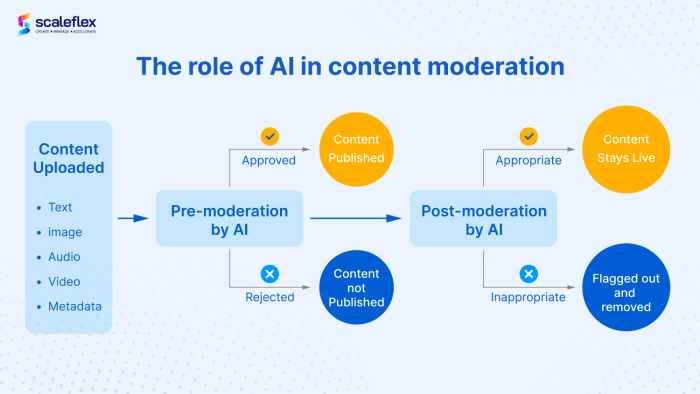

Unitary AI, unlike traditional content moderation methods that rely heavily on human review, utilizes sophisticated algorithms trained on vast datasets to identify and flag potentially harmful content. This intelligent approach offers a more efficient and accurate way to safeguard online communities, allowing human moderators to focus on more complex and nuanced cases.

Introduction to Unitary AI in Social Media Content Moderation

Unitary AI is a revolutionary approach to content moderation that aims to enhance the effectiveness and efficiency of online platforms in identifying and removing harmful content. This technology leverages the power of artificial intelligence to analyze vast amounts of data and learn complex patterns, enabling it to make more informed and nuanced decisions about content moderation.

The Evolution of AI-Powered Content Moderation

Content moderation has undergone a significant transformation with the advent of AI. Initially, AI-powered content moderation systems relied on rule-based approaches, which involved creating a set of predefined rules to identify and flag potentially harmful content. These systems were often inflexible and struggled to adapt to the ever-evolving nature of online content.

Finish your research with information from 3 ways tech companies can support their employees during the cost of living crisis.

- Rule-based systems: Early AI-powered content moderation systems used rule-based approaches, relying on predefined rules to identify and flag potentially harmful content. These systems often struggled to adapt to the evolving nature of online content.

- Machine learning: The introduction of machine learning algorithms enabled AI systems to learn from data and improve their accuracy over time. These systems could analyze vast amounts of data to identify patterns and classify content more effectively.

- Deep learning: More recently, deep learning algorithms have emerged as a powerful tool for content moderation. These algorithms can analyze complex patterns in data and make more nuanced decisions about content moderation.

Challenges of Traditional Content Moderation Methods

Traditional content moderation methods often face several challenges, including:

- Scale: The sheer volume of content generated online makes it difficult for human moderators to keep up with the task of identifying and removing harmful content.

- Bias: Human moderators can be susceptible to bias, which can lead to inconsistent and unfair content moderation decisions.

- Evolving Content: The constant evolution of online content, including new forms of harmful content, makes it difficult for traditional methods to keep up.

How Unitary AI Addresses Content Moderation Challenges

Unitary AI addresses these challenges by leveraging the power of AI to:

- Automate Content Moderation: Unitary AI can automate the process of identifying and removing harmful content, freeing up human moderators to focus on more complex tasks.

- Reduce Bias: Unitary AI algorithms are trained on massive datasets, reducing the risk of human bias influencing moderation decisions.

- Adapt to Evolving Content: Unitary AI systems can learn from new data and adapt to the ever-changing landscape of online content.

Key Features of Unitary AI for Content Moderation

Unitary AI is a powerful tool for content moderation, leveraging advanced technologies like natural language processing (NLP), machine learning (ML), and deep learning (DL) to identify and remove harmful content. These features allow unitary AI to analyze content with greater accuracy and speed than traditional methods, ultimately leading to a safer online environment.

Natural Language Processing (NLP)

NLP is a critical component of unitary AI, enabling it to understand the meaning and context of text. NLP algorithms analyze the structure, syntax, and semantics of text, allowing the AI to recognize patterns and nuances that humans might miss.

This ability is essential for detecting subtle forms of hate speech, harassment, and other harmful content.

Machine Learning (ML), Unitary ai social media content moderation

ML algorithms play a crucial role in training unitary AI models to identify harmful content. These algorithms learn from vast datasets of labeled content, identifying patterns and features associated with different types of harmful content. As the AI encounters new content, it applies these learned patterns to determine whether it is harmful or not.

Deep Learning (DL)

DL is a subset of ML that utilizes artificial neural networks with multiple layers to analyze complex data. In the context of content moderation, DL enables unitary AI to understand the nuances of human language and identify subtle forms of harmful content that traditional methods might miss.

DL algorithms can also learn from unstructured data, such as images and videos, making them highly effective for moderating multimedia content.

Comparison with Traditional Content Moderation Techniques

Unitary AI offers several advantages over traditional content moderation techniques, which often rely on manual review or rule-based systems.

- Speed and Efficiency:Unitary AI can analyze content much faster than human moderators, allowing for quicker identification and removal of harmful content. This is particularly important in the fast-paced environment of social media, where content is constantly being shared.

- Accuracy:Unitary AI models can be trained on vast datasets, allowing them to learn complex patterns and nuances that human moderators might miss. This leads to more accurate identification of harmful content, reducing the risk of false positives and negatives.

- Scalability:Unitary AI can handle large volumes of content, making it ideal for platforms with millions of users. Traditional methods often struggle to keep up with the sheer volume of content generated on social media.

“Unitary AI is a game-changer for content moderation, offering a more efficient and accurate way to identify and remove harmful content. By leveraging advanced technologies like NLP, ML, and DL, unitary AI empowers social media platforms to create a safer online environment for all users.”

Benefits of Unitary AI in Content Moderation: Unitary Ai Social Media Content Moderation

Unitary AI offers a significant advantage in social media content moderation by streamlining processes, enhancing accuracy, and scaling operations effectively. This technology empowers platforms to address the ever-increasing volume of user-generated content while maintaining a high standard of moderation.

Increased Efficiency

Unitary AI significantly boosts efficiency in content moderation by automating repetitive tasks and reducing the workload on human moderators. This allows human teams to focus on more complex issues that require nuanced judgment and human understanding. For example, unitary AI can automatically flag content that violates platform policies, such as hate speech or spam, freeing up human moderators to concentrate on addressing more complex cases involving satire, sarcasm, or cultural context.

Improved Accuracy

Unitary AI enhances the accuracy of content moderation by leveraging machine learning algorithms to identify patterns and nuances in content that may be missed by human moderators. This technology can analyze vast amounts of data and learn from past moderation decisions, continuously improving its ability to identify and flag inappropriate content.

For example, unitary AI can be trained to recognize subtle forms of hate speech, even when disguised as humor or sarcasm, which might be overlooked by human moderators.

Scalability

Unitary AI provides a scalable solution for content moderation, allowing platforms to handle the increasing volume of user-generated content without compromising the quality of moderation. As platforms grow, unitary AI can adapt to the increased workload, ensuring that content is moderated consistently and effectively.

For example, platforms like Facebook and YouTube rely heavily on unitary AI to manage the billions of posts and videos uploaded daily, ensuring that their platforms remain safe and welcoming for all users.

Reduced Workload for Human Moderators

Unitary AI significantly reduces the workload for human moderators by automating repetitive tasks and flagging potentially problematic content for review. This allows human moderators to focus on more complex cases that require human judgment and understanding. For example, unitary AI can be used to pre-screen content for violations of platform policies, such as hate speech or spam, allowing human moderators to concentrate on addressing more nuanced cases involving satire, sarcasm, or cultural context.

Real-World Applications

Unitary AI has been successfully implemented in real-world applications for content moderation, demonstrating its effectiveness in improving efficiency, accuracy, and scalability. For example, Facebook utilizes unitary AI to identify and remove harmful content, such as hate speech and misinformation, from its platform.

Similarly, YouTube employs unitary AI to flag inappropriate videos and comments, ensuring a safe and enjoyable experience for its users. These examples highlight the real-world impact of unitary AI in shaping a safer and more responsible online environment.

Ethical Considerations of Unitary AI in Content Moderation

The rise of unitary AI in content moderation presents a compelling opportunity to enhance efficiency and accuracy, but it also raises crucial ethical concerns that need careful consideration. As AI systems increasingly influence decision-making in this domain, it is essential to address potential biases, privacy implications, and transparency issues.

Potential for Bias in Unitary AI Content Moderation

AI algorithms are trained on vast datasets, and if these datasets contain biases, the AI systems will inevitably inherit and amplify those biases. This can lead to discriminatory content moderation practices, unfairly targeting certain groups or individuals. For example, an AI trained on a dataset that disproportionately reflects certain demographics might misinterpret content from other groups, leading to unfair content removal or suppression.

Privacy Implications of Unitary AI in Content Moderation

Unitary AI systems often require access to large amounts of data, including user content and metadata. This raises concerns about privacy, as the collection and analysis of this data could potentially be used to identify and track individuals without their consent.

For instance, AI systems might be able to infer sensitive information about users based on their online activity, such as their political views, religious beliefs, or health conditions.

Transparency and Explainability in Unitary AI Content Moderation

The decision-making processes of AI systems can be complex and opaque, making it difficult to understand why a particular piece of content was flagged or removed. This lack of transparency can lead to mistrust and undermine user confidence in content moderation systems.

It is crucial to develop mechanisms that provide clear explanations for AI-driven moderation decisions, allowing users to understand the reasoning behind the actions taken.

Recommendations for Mitigating Ethical Risks

- Use diverse and representative datasets:Training AI systems on diverse and representative datasets is crucial to minimize bias. This involves ensuring that the data used for training reflects the diversity of the user population and includes content from various backgrounds and perspectives.

- Implement robust auditing and monitoring:Regularly auditing and monitoring AI systems for bias is essential. This involves analyzing the performance of the AI system across different demographics and identifying any potential biases or discriminatory outcomes.

- Ensure transparency and explainability:Developers should strive to create AI systems that are transparent and explainable. This involves providing clear and concise explanations for AI-driven decisions, allowing users to understand the reasoning behind the actions taken.

- Promote human oversight and accountability:Human oversight and accountability are crucial in AI-driven content moderation. This involves ensuring that human moderators have the final say in controversial cases and are responsible for addressing any errors or biases in the AI system.

Future Directions of Unitary AI in Social Media Content Moderation

Unitary AI, with its potential to harmonize diverse content moderation approaches, is poised to shape the future of online platforms. As technology evolves, so too will the capabilities of unitary AI, leading to more nuanced and effective content moderation practices.

Emerging Technologies and Approaches

The advancement of unitary AI in content moderation is closely intertwined with emerging technologies and approaches. These innovations promise to enhance the capabilities of unitary AI and address the evolving challenges of online content.

- Explainable AI (XAI):XAI aims to make AI decision-making transparent and understandable. By providing insights into the reasoning behind unitary AI’s content moderation decisions, platforms can improve trust and accountability. For example, XAI could help explain why a specific post was flagged as harmful, providing users with a clear understanding of the system’s logic.

- Federated Learning:This approach enables multiple platforms to collaborate on training unitary AI models without sharing their sensitive data. This decentralized learning paradigm allows for more robust and diverse models, better equipped to handle the complexities of global content moderation. For instance, platforms could collaborate to train a unitary AI model that identifies hate speech across different languages and cultural contexts.

- Multimodal Content Understanding:Unitary AI can be enhanced to analyze and understand multimodal content, such as text, images, videos, and audio. This capability is crucial for effectively moderating content that goes beyond text-based communication, such as hate speech expressed through memes or videos.

For example, unitary AI could identify hate speech in videos by analyzing both the visual and audio components.

Hypothetical Scenario: Addressing Future Challenges

Imagine a future where unitary AI plays a pivotal role in combating misinformation and harmful content related to climate change. A global network of platforms, using federated learning, could train a unitary AI model to detect and flag false or misleading information about climate science.

This model could be equipped with multimodal content understanding capabilities to analyze images, videos, and text, identifying misleading claims, manipulated data, and conspiracy theories. The unitary AI system could then provide users with accurate information from credible sources, helping to counter the spread of misinformation and promote a more informed public discourse on climate change.