EU Rules AI Must Protect Human Rights takes center stage as the world grapples with the potential risks and benefits of artificial intelligence. This groundbreaking legislation seeks to ensure that AI development and deployment are guided by ethical principles, prioritizing human rights above all else.

The EU AI Act aims to establish a robust regulatory framework that balances innovation with the protection of fundamental freedoms, paving the way for a future where AI empowers humanity without compromising our values.

At the heart of the EU AI Act lies a comprehensive approach to risk categorization. The Act distinguishes between different levels of risk associated with AI systems, ranging from minimal to unacceptable. High-risk AI systems, those deemed to pose significant threats to human rights, are subject to stringent requirements, including rigorous risk assessments, mitigation measures, and ongoing monitoring.

This tiered approach ensures that AI development is guided by a framework that prioritizes human safety and well-being.

The Need for AI Regulation

The rapid advancement of artificial intelligence (AI) has brought immense potential for progress in various fields, but it also presents significant risks if not properly regulated. Unchecked AI development could lead to unforeseen consequences that could harm individuals and society as a whole.

Potential Risks of Unregulated AI

The potential risks of unregulated AI are multifaceted and can have far-reaching consequences. Without proper safeguards, AI could be used to:

- Amplify existing biases:AI systems trained on biased data can perpetuate and even amplify existing societal prejudices, leading to discrimination in areas like hiring, lending, and criminal justice.

- Undermine privacy:AI-powered surveillance systems can collect and analyze vast amounts of personal data, raising concerns about privacy violations and the potential for misuse.

- Threaten job security:As AI systems become more sophisticated, they could automate tasks currently performed by humans, potentially leading to widespread job displacement.

- Enable autonomous weapons systems:The development of AI-powered autonomous weapons systems raises ethical and legal concerns about the potential for unintended consequences and the loss of human control over warfare.

Examples of AI Applications Posing Threats to Human Rights

Several real-world examples illustrate how AI applications, if not carefully developed and deployed, can pose threats to human rights.

- Facial recognition systems:These systems have been used for surveillance purposes, leading to concerns about privacy violations and the potential for misuse by authoritarian regimes.

- Predictive policing algorithms:These algorithms have been criticized for perpetuating racial biases in law enforcement, leading to unfair targeting of minority communities.

- Social media algorithms:These algorithms can contribute to the spread of misinformation and hate speech, potentially fueling social unrest and polarization.

Importance of Ethical Guidelines for AI Development and Deployment

Establishing clear ethical guidelines for AI development and deployment is crucial to mitigating the risks and ensuring that AI benefits humanity. These guidelines should address:

- Transparency and explainability:AI systems should be transparent and explainable, allowing users to understand how they work and make informed decisions.

- Fairness and non-discrimination:AI systems should be designed and trained to avoid bias and discrimination, ensuring equal treatment for all individuals.

- Privacy and data protection:AI systems should respect individual privacy and data protection rights, minimizing the collection and use of personal information.

- Accountability and oversight:Mechanisms for accountability and oversight should be established to ensure that AI systems are used responsibly and ethically.

Key Provisions of the EU AI Act

The EU AI Act, currently under negotiation, aims to establish a comprehensive regulatory framework for AI systems within the European Union. This framework prioritizes human rights protection and fosters responsible AI development and deployment. The Act classifies AI systems into different risk categories, imposing specific requirements based on their potential impact on individuals and society.

Risk Categories for AI Systems

The EU AI Act categorizes AI systems based on their potential risk levels, with each category attracting different regulatory obligations. The Act aims to strike a balance between promoting innovation and ensuring ethical and safe use of AI.

- Unacceptable Risk AI Systems:These systems are prohibited as they pose an unacceptable risk to human safety and fundamental rights. Examples include AI systems used for social scoring, real-time facial recognition in public spaces for law enforcement purposes, and AI-powered manipulative technologies designed to exploit vulnerable individuals.

- High-Risk AI Systems:These systems are subject to stringent requirements due to their potential for significant harm. The Act defines high-risk AI systems as those that could affect individuals’ fundamental rights, safety, or health, or could impact their access to essential services. Examples include AI systems used in critical infrastructure, healthcare, education, and law enforcement.

- Limited Risk AI Systems:These systems are subject to less stringent requirements, as they pose a lower risk to individuals and society. Examples include AI systems used in video games, spam filters, and chatbots.

- Minimal Risk AI Systems:These systems are considered to pose minimal risk and are not subject to specific regulatory requirements. Examples include AI systems used in simple tasks like image recognition or text generation.

Requirements for High-Risk AI Systems

The EU AI Act imposes specific requirements on high-risk AI systems to ensure their safety, transparency, and accountability. These requirements aim to mitigate potential risks and protect individuals’ rights.

- Risk Assessment:Developers of high-risk AI systems must conduct thorough risk assessments, identifying potential risks and evaluating their likelihood and severity. This assessment should consider factors like data quality, algorithmic bias, and potential impact on individuals and society.

- Risk Mitigation Measures:Developers must implement appropriate risk mitigation measures to minimize the likelihood and impact of identified risks. These measures can include data anonymization, bias detection and mitigation techniques, and robust testing and validation procedures.

- Transparency and Explainability:High-risk AI systems should be designed and operated in a transparent and explainable manner. This means users should be informed about the system’s functioning and be able to understand the rationale behind its decisions.

- Human Oversight:Developers must ensure appropriate human oversight over the operation of high-risk AI systems. This can involve human review of decisions made by the system, the ability to intervene in case of errors or biases, and the establishment of clear lines of responsibility.

- Data Governance:Developers must ensure the use of high-quality, relevant, and unbiased data for training and operation of high-risk AI systems. The Act emphasizes the importance of data governance and responsible data handling practices.

- Conformity Assessment:High-risk AI systems must undergo conformity assessment to ensure they comply with the requirements of the EU AI Act. This assessment can be conducted by independent third-party bodies or by the developer itself, depending on the specific system and its risks.

- Market Surveillance:The EU AI Act establishes a system of market surveillance to monitor the compliance of AI systems with the Act’s requirements. National authorities will be responsible for enforcing the Act and ensuring that AI systems meet the necessary standards.

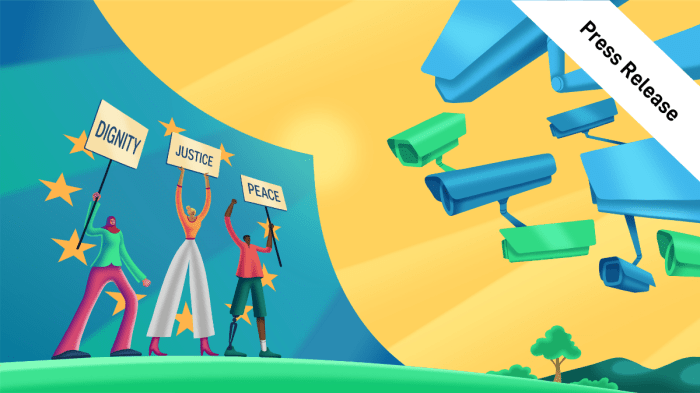

Protecting Fundamental Rights

The EU AI Act recognizes that artificial intelligence, while offering immense potential, can also pose significant risks to fundamental rights. The Act aims to ensure that AI development and deployment are aligned with European values and principles, safeguarding individual freedoms and promoting a fair and inclusive society.

Privacy and Data Protection

The EU AI Act acknowledges the inherent connection between AI and data processing, emphasizing the need for robust data protection measures. The Act mandates that AI systems should be designed and operated in a way that minimizes the collection, use, and storage of personal data.

This includes principles such as data minimization, purpose limitation, and accountability. The Act also reinforces the right to access, rectification, and erasure of personal data, ensuring individuals have control over their information. Examples of AI applications that could infringe on privacy include:

- Facial recognition systems used for mass surveillance without proper legal justification or oversight.

- AI-powered marketing tools that track and analyze user behavior across multiple platforms without explicit consent.

- Health apps that collect sensitive health data without transparency or clear consent mechanisms.

Non-discrimination and Fairness

The EU AI Act emphasizes the importance of ensuring that AI systems do not perpetuate or exacerbate existing societal biases. The Act mandates risk assessments to identify and mitigate potential discrimination in AI systems. This includes evaluating the training data used to develop the AI, as well as the potential impact of the system on different groups of people.

Examine how eu media surveillance law journalists can boost performance in your area.

Examples of AI applications that could lead to discrimination include:

- AI-powered recruitment tools that favor certain demographics over others based on biased data.

- Credit scoring algorithms that unfairly disadvantage individuals based on their socioeconomic background.

- AI-driven loan applications that discriminate against applicants based on their race, ethnicity, or gender.

Freedom of Expression and Information

The EU AI Act recognizes the crucial role of AI in promoting freedom of expression and access to information. However, it also acknowledges the potential for AI to be used to manipulate information or censor content. The Act aims to strike a balance between protecting freedom of expression and mitigating the risks associated with AI-powered disinformation and manipulation.

Examples of AI applications that could infringe on freedom of expression include:

- AI-powered censorship tools used to suppress dissenting voices or political opinions.

- Deepfake technology used to create and spread false or misleading content, undermining trust in information sources.

- AI-driven social media algorithms that promote echo chambers and limit exposure to diverse viewpoints.

Right to Work and Social Security

The EU AI Act recognizes the potential impact of AI on the labor market, acknowledging both the opportunities and challenges it presents. The Act encourages the development of AI systems that complement human skills and create new job opportunities. It also emphasizes the need for social safety nets and retraining programs to support workers who may be displaced by automation.

Examples of AI applications that could impact the right to work include:

- Automated decision-making systems that replace human judgment in hiring and promotion processes.

- AI-powered robots that perform tasks traditionally done by human workers, potentially leading to job displacement.

- AI-driven algorithms that optimize work schedules and allocate tasks, potentially leading to increased workload and burnout for workers.

| AI Application | Potential Risks | Safeguards |

|---|---|---|

| Facial Recognition | Privacy violations, discrimination, mass surveillance | Data minimization, purpose limitation, transparency, oversight, independent audits |

| AI-powered Recruitment Tools | Bias and discrimination, unfair hiring practices | Bias detection and mitigation, transparency, human oversight, independent audits |

| Health Apps | Data breaches, privacy violations, misuse of sensitive health data | Data encryption, secure storage, consent mechanisms, transparency, independent audits |

| AI-driven Social Media Algorithms | Echo chambers, spread of misinformation, manipulation of public opinion | Content moderation policies, transparency, user control over algorithms, independent audits |

| Automated Decision-making Systems | Bias and discrimination, lack of transparency, limited human oversight | Transparency, explainability, human oversight, independent audits, right to challenge decisions |

Enforcement and Oversight

The EU AI Act establishes a robust framework for enforcing its provisions and ensuring that AI systems are developed and used responsibly. This involves a multi-layered approach with national authorities playing a central role in implementation and oversight.

National Authorities’ Role

National authorities are responsible for enforcing the EU AI Act within their respective jurisdictions. This includes:

- Issuing permits for high-risk AI systems:National authorities will assess applications for permits, ensuring that high-risk AI systems meet the requirements of the Act, such as conformity assessments and risk mitigation measures.

- Monitoring and auditing AI systems:They will conduct periodic checks to verify compliance with the Act’s requirements and identify any potential risks. This can involve inspecting data sets, algorithms, and deployment processes.

- Investigating violations:National authorities have the power to investigate potential breaches of the Act and impose sanctions on non-compliant entities.

- Cooperating with other authorities:They will collaborate with other national authorities and the European Commission to ensure consistent enforcement across the EU.

Mechanisms for Monitoring and Auditing AI Systems

The EU AI Act emphasizes the importance of continuous monitoring and auditing of AI systems to ensure compliance and identify potential risks. This involves:

- Technical documentation:Developers are required to provide comprehensive technical documentation for their AI systems, including details about the algorithms, data sets, and intended use cases. This information is crucial for monitoring and auditing purposes.

- Independent audits:National authorities may require independent audits of high-risk AI systems to assess their compliance with the Act’s requirements. These audits can be conducted by accredited bodies or experts.

- Data monitoring:The Act encourages the use of data monitoring tools and techniques to track the performance and impact of AI systems over time. This helps identify potential biases, errors, or unintended consequences.

- Transparency and explainability:The Act promotes transparency by requiring developers to provide clear and understandable information about how AI systems work and the decisions they make. This enhances accountability and enables effective monitoring.

Challenges in Implementing and Enforcing the Act

While the EU AI Act represents a significant step towards responsible AI development and use, implementing and enforcing it presents certain challenges:

- Defining high-risk AI systems:Accurately identifying and categorizing high-risk AI systems can be complex, requiring ongoing evaluation and adaptation as AI technology evolves.

- Ensuring consistent enforcement:Harmonizing enforcement practices across different national authorities is essential to prevent inconsistencies and ensure a level playing field.

- Resource constraints:Implementing and enforcing the Act requires significant resources, including expertise in AI and data privacy. National authorities may face challenges in securing adequate funding and staffing.

- Technological advancements:Keeping pace with rapid advancements in AI technology can be challenging, requiring constant updates and revisions to the Act and its enforcement mechanisms.

Future Directions: Eu Rules Ai Must Protect Human Rights

The EU AI Act, as a groundbreaking piece of legislation, lays the foundation for a future where AI is developed and used responsibly, ensuring that human rights are protected. However, the rapidly evolving nature of AI technology demands a dynamic regulatory approach, one that can adapt to emerging challenges and opportunities.

Evolving Regulatory Landscape, Eu rules ai must protect human rights

The AI landscape is constantly changing, with new technologies and applications emerging regularly. This dynamic environment presents both challenges and opportunities for AI regulation.

- One challenge is keeping pace with the rapid advancements in AI. Regulations need to be flexible enough to accommodate new technologies and applications while maintaining their core principles of human rights protection.

- Another challenge is ensuring that regulations are not overly restrictive, hindering innovation and development of beneficial AI applications. Finding the right balance between promoting innovation and safeguarding human rights is crucial.

- Opportunities lie in leveraging the EU AI Act as a framework for international cooperation. The EU can work with other countries to develop common standards and best practices for AI governance, fostering a global ecosystem of responsible AI development and use.

Adapting the EU AI Act

The EU AI Act, while comprehensive, can be further developed and adapted to address emerging challenges and ensure its effectiveness.

- One area for improvement is the development of clearer guidelines and criteria for classifying AI systems into risk categories. This would provide greater clarity and consistency in the application of regulatory requirements.

- The Act could also benefit from incorporating a mechanism for regular review and updates to ensure its alignment with technological advancements and evolving societal concerns. This would allow the EU to adapt to new challenges and ensure the Act remains relevant and effective.

- Further research and development of effective AI auditing and certification mechanisms is crucial. This would provide a robust framework for assessing the compliance of AI systems with the Act’s requirements and building public trust in AI technologies.

International Cooperation

International cooperation is essential for ensuring the responsible development and use of AI globally. The EU AI Act can serve as a foundation for building consensus and promoting international collaboration on AI regulation.

- The EU can work with other countries to develop common standards and best practices for AI governance, fostering a global ecosystem of responsible AI development and use.

- Sharing knowledge and expertise on AI regulation, particularly in areas like risk assessment, ethical considerations, and data governance, is crucial for ensuring consistent and effective AI regulation globally.

- International collaboration can also help address the challenges of cross-border data flows and ensure the effective enforcement of AI regulations across different jurisdictions.