Predictive policing project shows even EU lawmakers can be targets sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. This story isn’t just about crime prediction, it’s about the power of data, the potential for abuse, and the very real threat to our democratic institutions.

Imagine a world where algorithms can predict who might commit a crime before they even think about it. This is the reality of predictive policing, a technology that’s increasingly being used around the world. But what happens when these algorithms are used to target those in power, like EU lawmakers?

This article delves into the world of predictive policing, exploring its historical development, the rationale behind its use, and the ethical concerns surrounding its implementation. We’ll examine a specific case where a predictive policing project targeted EU lawmakers, analyzing the data sources used and the potential vulnerabilities they present.

The implications of this project are far-reaching, impacting not only the security and privacy of EU officials but also the very foundations of our democracy.

The Rise of Predictive Policing

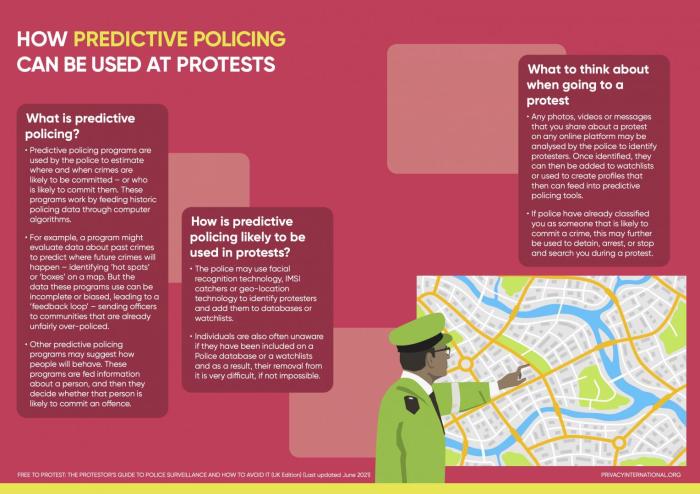

Predictive policing, a relatively recent development in law enforcement, utilizes data analysis and statistical modeling to anticipate future criminal activity. By analyzing historical crime data, social factors, and environmental conditions, predictive policing algorithms aim to identify areas and individuals at a higher risk of criminal involvement.

Finish your research with information from andy baio relaunches community event site upcoming.

While its proponents argue that it can help allocate resources efficiently and prevent crime, the technology has also sparked ethical concerns and debates regarding its potential for bias and misuse.

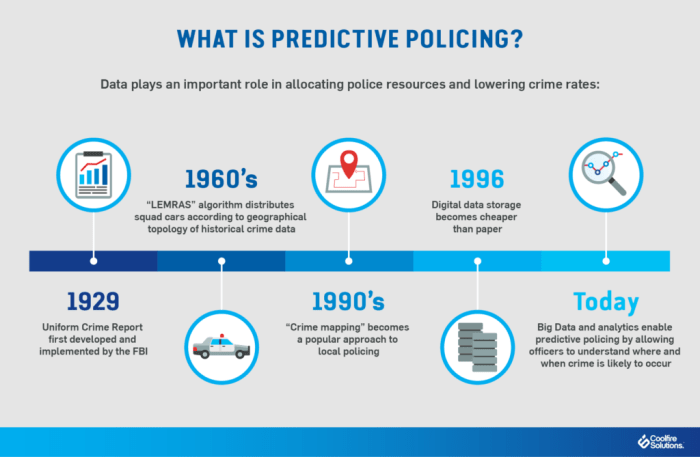

Historical Development of Predictive Policing Technologies

Predictive policing has evolved over several decades, with its roots in the development of crime mapping and statistical analysis techniques. Early forms of predictive policing relied on simple statistical models to identify crime hotspots and allocate police resources accordingly. However, advancements in computing power, data availability, and machine learning algorithms have enabled the development of more sophisticated predictive models.

- 1980s-1990s:The development of Geographic Information Systems (GIS) allowed law enforcement agencies to map crime incidents spatially, providing valuable insights into crime patterns and hotspots. This marked the beginning of spatial crime analysis and the use of data-driven approaches to allocate police resources.

- Early 2000s:The emergence of machine learning and data mining techniques fueled the development of more complex predictive models. Researchers began to explore the use of historical crime data, social indicators, and environmental factors to predict future crime events.

- 2010s-Present:Predictive policing technologies have gained significant traction, with numerous agencies around the world implementing these tools. These technologies leverage advanced algorithms, including machine learning, artificial intelligence, and deep learning, to analyze vast amounts of data and predict crime patterns.

Rationale for Using Data Analysis to Predict Crime

The rationale behind predictive policing rests on the assumption that crime is not a random event but rather a pattern that can be analyzed and predicted. By identifying factors that contribute to crime, such as poverty, unemployment, and gang activity, law enforcement agencies can proactively deploy resources to areas at a higher risk of crime.

Proponents of predictive policing argue that it can:

- Improve resource allocation:By identifying high-risk areas, predictive policing can help police departments allocate resources more efficiently, focusing on areas where crime is most likely to occur.

- Reduce crime:By identifying individuals at risk of committing crimes, law enforcement can intervene early to prevent criminal activity and provide support services to address underlying issues.

- Enhance public safety:By predicting potential crime events, law enforcement can take proactive measures to prevent crime and ensure public safety.

Examples of Predictive Policing Projects Implemented Globally

Numerous predictive policing projects have been implemented worldwide, showcasing the diverse applications and impact of this technology. Here are a few examples:

- Chicago’s Strategic Decision Support System (SDS):Implemented in 2012, SDS uses historical crime data and social indicators to identify areas at risk of violent crime. The system provides officers with real-time crime predictions, allowing them to deploy resources strategically and proactively.

- PredPol:PredPol is a company that provides predictive policing software to law enforcement agencies. Its algorithms analyze historical crime data to predict crime patterns and identify high-risk areas. PredPol has been implemented in cities such as Los Angeles, San Francisco, and Seattle.

- The UK’s “Operation Data Horizon”:This project, launched in 2012, uses data analysis to identify individuals at risk of committing crime. The program has been criticized for its potential for bias and misuse, leading to calls for greater transparency and accountability.

The EU’s Stance on Predictive Policing

The European Union (EU) is grappling with the implications of predictive policing, a technology that uses data analysis to anticipate and prevent crime. This technology, while promising in its potential to enhance public safety, also raises significant concerns about data privacy, algorithmic bias, and the erosion of fundamental rights.

The EU’s stance on predictive policing is shaped by its commitment to upholding human rights and its comprehensive legal framework governing data protection and surveillance.

Data Privacy and Surveillance

The EU’s legal framework on data privacy and surveillance is a cornerstone of its approach to predictive policing. The General Data Protection Regulation (GDPR), which came into effect in 2018, sets stringent rules for the collection, processing, and storage of personal data.

The GDPR emphasizes the principles of lawfulness, fairness, and transparency, and it grants individuals significant rights over their data, including the right to access, rectification, erasure, and restriction of processing. Furthermore, the EU’s legal framework includes directives on data protection in law enforcement, which aim to strike a balance between public security and individual privacy.

These directives establish specific requirements for the use of surveillance technologies, including predictive policing tools, emphasizing the need for legal authorization, proportionality, and oversight.

Ethical Concerns Surrounding Predictive Policing

The ethical concerns surrounding predictive policing in the EU context stem from the potential for misuse and the impact on fundamental rights. One key concern is the potential for bias and discrimination in predictive policing algorithms. These algorithms are trained on historical data, which may reflect existing societal biases, leading to discriminatory outcomes against certain groups.

For instance, an algorithm trained on data showing a higher crime rate in certain neighborhoods could disproportionately target individuals residing in those areas, regardless of their actual criminal activity. Another ethical concern is the potential for over-policing and the erosion of trust in law enforcement.

Predictive policing tools could lead to increased surveillance and targeting of individuals based on their perceived risk, even if they have not committed any crime. This could create a chilling effect on civil liberties and undermine the principle of innocent until proven guilty.

Potential for Bias and Discrimination in Predictive Policing Algorithms, Predictive policing project shows even eu lawmakers can be targets

The potential for bias and discrimination in predictive policing algorithms is a significant concern in the EU. Algorithms are trained on historical data, which can reflect existing societal biases. This can lead to algorithms that disproportionately target certain groups, such as racial minorities or individuals from disadvantaged socioeconomic backgrounds.

For example, a predictive policing algorithm trained on data showing a higher crime rate in certain neighborhoods could disproportionately target individuals residing in those areas, even if they have not committed any crime. This could lead to over-policing and the erosion of trust in law enforcement.To mitigate the risk of bias, the EU emphasizes the importance of transparency, accountability, and human oversight in the development and deployment of predictive policing tools.

The GDPR requires that data controllers ensure that algorithms are fair and non-discriminatory. The EU also encourages the use of data anonymization techniques and the development of algorithms that are less susceptible to bias.

The Case of EU Lawmakers as Targets

The use of predictive policing in the EU has sparked concerns about the potential targeting of individuals, including EU lawmakers. A specific project, developed by a private company, aimed to identify potential threats to EU officials using data analysis and machine learning.

While the project was ultimately abandoned due to ethical concerns, it raises crucial questions about the potential for misuse of predictive policing technologies and their impact on individual liberties.

Data Sources and Vulnerabilities

The project utilized a vast array of data sources to create its predictive models. These included publicly available information like social media posts, news articles, and official statements. Additionally, the project relied on data from various databases, including financial transactions, travel records, and communication logs.

The reliance on such diverse data sources raises significant concerns about data privacy and security. For example, social media posts can be easily misinterpreted, and financial transactions may not always reflect criminal activity. Moreover, the use of personal data without explicit consent raises serious ethical questions.

The project also relied on data from government agencies, which raises concerns about the potential for misuse of sensitive information. For instance, access to travel records could be used to track the movements of EU officials, potentially compromising their security and privacy.

The potential for misuse of this data is a serious concern, as it could lead to the targeting of individuals based on their political views, personal beliefs, or other factors that may not be relevant to actual threats.

Implications for Democracy and Governance: Predictive Policing Project Shows Even Eu Lawmakers Can Be Targets

Predictive policing, with its potential to identify and preemptively address crime, raises significant concerns about its impact on democratic principles and citizen rights. While the promise of enhanced security is enticing, the technology’s inherent biases, potential for misuse, and lack of transparency necessitate careful consideration of its implications for a democratic society.

Potential for Abuse of Power and Surveillance

The potential for abuse of power and excessive surveillance is a major concern with predictive policing. These systems rely on large datasets that often contain sensitive personal information, raising concerns about privacy violations. The potential for misuse of this data by law enforcement agencies or other authorities is significant.

For instance, if a system misidentifies individuals as high-risk based on biased data, it could lead to discriminatory policing practices and disproportionate targeting of certain communities.

“The use of predictive policing raises serious concerns about the potential for abuse of power and excessive surveillance. It is essential to ensure that these systems are used responsibly and ethically, with appropriate safeguards in place to protect individual rights.”

[Name of Expert/Organization]

Transparency and Accountability

Transparency and accountability are crucial for ensuring the ethical and responsible use of predictive policing systems. The algorithms used in these systems are often complex and opaque, making it difficult for the public to understand how they work and how decisions are made.

This lack of transparency can lead to a lack of trust in the system and make it difficult to hold authorities accountable for potential misuse.

“It is imperative that predictive policing systems are transparent and accountable. The public must have access to information about how these systems work, the data they use, and the decisions they make. This transparency is essential for building trust and ensuring that these systems are used responsibly.”

[Name of Expert/Organization]

Future Directions and Recommendations

The potential of predictive policing to enhance public safety is undeniable, but so are the risks associated with its misuse. Moving forward, a robust framework is essential to guide the ethical and responsible development and deployment of these technologies. This framework should address the concerns raised by the potential targeting of EU lawmakers, ensuring that predictive policing tools are used to promote justice and fairness while safeguarding democratic principles.

Recommendations for Ethical and Responsible Development

To mitigate the risks and ensure the fair and equitable application of predictive policing, a set of recommendations should be implemented for policymakers and technology developers. These recommendations are crucial for fostering trust in these technologies and ensuring their responsible use.

- Transparency and Accountability: Predictive policing algorithms should be transparent and their workings readily understandable to the public. This includes providing clear documentation of the data used, the models employed, and the reasoning behind the predictions generated. Regular audits and independent oversight are crucial to ensure accountability and prevent bias.

- Data Privacy and Security: Data used for predictive policing must be collected, stored, and processed ethically, respecting individuals’ privacy and security. Strict regulations should be implemented to prevent data breaches and ensure responsible data governance. Data should be anonymized and used only for its intended purpose.

- Bias Mitigation and Fairness: Algorithms should be rigorously tested for bias, ensuring that they do not disproportionately target specific demographics or communities. This includes addressing historical biases present in data and implementing mechanisms to identify and correct biased outcomes.

- Human Oversight and Intervention: Predictive policing systems should not replace human judgment but rather serve as a tool to assist law enforcement officers. Human oversight is essential to ensure that predictions are evaluated within the context of specific situations and that decisions are not solely based on algorithmic outputs.

- Public Engagement and Consultation: Public engagement and consultation are crucial to ensure that predictive policing technologies are developed and implemented in a way that reflects societal values and addresses public concerns. This includes involving communities, civil society organizations, and experts in the development and evaluation of these technologies.

Framework for Ethical and Responsible Development

A comprehensive framework for the ethical and responsible development of predictive policing technologies should include the following key elements:

- Clear Ethical Principles: Defining a set of ethical principles to guide the development and use of predictive policing, emphasizing transparency, accountability, fairness, and respect for human rights.

- Independent Oversight and Review: Establishing independent oversight bodies to monitor the development, deployment, and impact of predictive policing technologies, ensuring that they are used ethically and effectively.

- Robust Legal and Regulatory Frameworks: Implementing clear legal and regulatory frameworks to govern the use of predictive policing, addressing data privacy, security, bias mitigation, and algorithmic transparency.

- Public Education and Awareness: Raising public awareness about predictive policing, its potential benefits and risks, and the importance of ethical considerations in its development and use.

- Continuous Evaluation and Improvement: Establishing mechanisms for ongoing evaluation and improvement of predictive policing systems, ensuring that they remain effective, unbiased, and aligned with ethical principles.

Mitigating Risks and Ensuring Fairness

Predictive policing, if not implemented responsibly, can exacerbate existing inequalities and create new injustices. To mitigate these risks, it is essential to:

- Address Data Biases: Ensure that the data used to train predictive policing algorithms is representative and free from biases that could perpetuate discrimination. This includes addressing historical biases present in data and implementing mechanisms to identify and correct biased outcomes.

- Focus on Risk Factors: Predictive policing systems should focus on identifying risk factors that are objectively measurable and demonstrably linked to criminal activity, avoiding subjective factors that could lead to discrimination.

- Promote Transparency and Explainability: The algorithms used in predictive policing should be transparent and their workings readily understandable to the public. This includes providing clear documentation of the data used, the models employed, and the reasoning behind the predictions generated.

- Implement Human Oversight: Predictive policing systems should not replace human judgment but rather serve as a tool to assist law enforcement officers. Human oversight is essential to ensure that predictions are evaluated within the context of specific situations and that decisions are not solely based on algorithmic outputs.

- Encourage Public Participation: Engage with communities and stakeholders in the development and implementation of predictive policing technologies, ensuring that their concerns and perspectives are taken into account.