Ai governance critical trustworthy explainable ai – AI Governance: Critical, Trustworthy, Explainable AI takes center stage as we navigate the rapid advancement of artificial intelligence. The potential of AI is immense, but so are the risks if we fail to implement robust governance frameworks. This is where the concept of “critical AI” comes into play, focusing on ensuring the safety and reliability of AI systems in crucial sectors like healthcare, transportation, and finance.

But safety alone isn’t enough. We also need to build trust in AI, which requires transparency, accountability, and explainability. This is where “explainable AI” steps in, demystifying AI decisions and empowering users to understand how AI systems arrive at their conclusions.

In this blog post, we’ll delve deeper into the importance of AI governance, explore the key principles of trustworthy AI, and examine the role of explainable AI in building confidence and transparency. We’ll also discuss governance mechanisms, case studies, and future directions for AI governance, paving the way for a future where AI is developed and deployed responsibly.

The Importance of AI Governance

The rapid advancement of artificial intelligence (AI) technologies has brought about a new era of innovation and transformation across various industries. From self-driving cars to personalized healthcare, AI is poised to revolutionize our lives in unprecedented ways. However, this rapid progress also raises critical concerns about the potential risks and ethical challenges associated with unregulated AI deployment.

Therefore, establishing robust AI governance frameworks is paramount to ensuring responsible and ethical development and use of AI.

The Necessity of AI Governance Frameworks

AI governance frameworks are essential to address the potential risks and ethical challenges posed by the increasing use of AI. These frameworks provide a set of guidelines, principles, and mechanisms to ensure that AI development and deployment align with societal values, ethical considerations, and legal requirements.

“AI governance is not just about regulating AI; it’s about shaping its development and deployment in a way that benefits society as a whole.” Dr. Fei-Fei Li, Stanford University

Potential Risks and Ethical Challenges of Unregulated AI

The lack of appropriate governance can lead to several risks and ethical challenges, including:

- Bias and Discrimination: AI systems can perpetuate and amplify existing biases in data, leading to unfair or discriminatory outcomes. For example, an AI-powered hiring system trained on biased data could unfairly discriminate against certain demographic groups.

- Privacy Violations: AI systems often collect and process vast amounts of personal data, raising concerns about privacy violations. For instance, facial recognition technologies can be used to track individuals without their consent, leading to potential surveillance abuses.

- Job Displacement: The automation capabilities of AI have the potential to displace human workers in certain sectors, raising concerns about unemployment and economic inequality.

- Weaponization of AI: The use of AI in autonomous weapons systems raises serious ethical concerns about the potential for unintended consequences and the loss of human control over warfare.

- Lack of Transparency and Explainability: Some AI systems are complex and opaque, making it difficult to understand how they arrive at their decisions. This lack of transparency can hinder accountability and trust in AI systems.

Key Stakeholders in AI Governance, Ai governance critical trustworthy explainable ai

Various stakeholders play crucial roles in shaping AI governance frameworks. These include:

- Governments: Governments are responsible for establishing legal and regulatory frameworks to govern AI development and deployment. They can set standards, enforce regulations, and provide oversight to ensure responsible AI use.

- Industry Leaders: Industry leaders play a critical role in developing and implementing ethical AI practices within their organizations. They can adopt industry-specific guidelines, promote responsible AI development, and ensure compliance with regulations.

- Researchers: Researchers are at the forefront of AI development and have a responsibility to conduct research ethically and address potential risks. They can contribute to the development of AI governance frameworks by conducting research on ethical AI design, bias detection, and explainability.

- Civil Society: Civil society organizations play a vital role in advocating for ethical AI development and use. They can raise awareness about potential risks, engage in public dialogue, and hold stakeholders accountable for their actions.

Critical AI

Critical AI refers to AI systems deployed in sectors where failures can have significant consequences for human lives, property, or the environment. These sectors include healthcare, transportation, finance, and critical infrastructure.

The importance of critical AI lies in its potential to revolutionize these sectors by automating complex tasks, improving efficiency, and enhancing safety. However, deploying AI in such critical areas also presents unique challenges and risks that require careful consideration and mitigation.

Risks and Challenges of Critical AI

Deploying AI in critical sectors poses significant risks and challenges, demanding a robust approach to safety, reliability, and accountability.

- Safety and Reliability:AI systems are susceptible to errors, biases, and vulnerabilities that can lead to unintended consequences in critical applications. For example, a faulty AI system controlling autonomous vehicles could result in accidents, while an inaccurate AI-powered medical diagnosis could lead to misdiagnosis and inappropriate treatment.

- Transparency and Explainability:Understanding how AI systems reach their decisions is crucial for ensuring trust and accountability. However, many AI algorithms are complex and opaque, making it difficult to understand their reasoning and identify potential biases or errors.

- Data Quality and Bias:AI systems are trained on data, and biases present in the training data can be amplified and reflected in the system’s output. For example, AI systems used for loan applications may perpetuate existing biases if the training data reflects historical discriminatory practices.

- Security and Privacy:Critical AI systems often handle sensitive data, making them vulnerable to cyberattacks and data breaches. These breaches could compromise patient privacy in healthcare, disrupt financial transactions, or compromise critical infrastructure.

- Ethical Considerations:Deploying AI in critical sectors raises ethical questions about responsibility, accountability, and the potential for job displacement. It is crucial to develop ethical guidelines and frameworks to ensure that AI is used responsibly and benefits society as a whole.

Rigorous Testing, Validation, and Oversight

To address the risks and challenges associated with critical AI, rigorous testing, validation, and oversight mechanisms are essential. These mechanisms help ensure that AI systems are safe, reliable, and operate as intended.

- Testing and Validation:Thorough testing and validation of AI systems are crucial to identify and mitigate potential errors and biases. This includes simulating real-world scenarios, evaluating performance under various conditions, and verifying that the system meets predefined safety and reliability standards.

- Oversight and Governance:Robust oversight mechanisms are essential to ensure that AI systems are developed and deployed responsibly. This includes establishing clear guidelines for AI development, deployment, and use, as well as creating independent bodies to monitor and regulate AI systems in critical sectors.

- Transparency and Explainability:Efforts to improve transparency and explainability in AI systems are essential for building trust and accountability. This includes developing techniques to make AI decision-making processes more understandable and providing clear explanations for AI outputs.

- Continuous Monitoring and Evaluation:Ongoing monitoring and evaluation of AI systems in critical sectors are crucial to detect and address potential problems. This includes tracking system performance, identifying emerging risks, and adapting AI systems to changing conditions and new data.

Trustworthy AI

In a world increasingly reliant on artificial intelligence (AI), building trust in these systems is paramount. Trustworthy AI goes beyond mere functionality; it encompasses the ethical, responsible, and transparent development and deployment of AI systems.

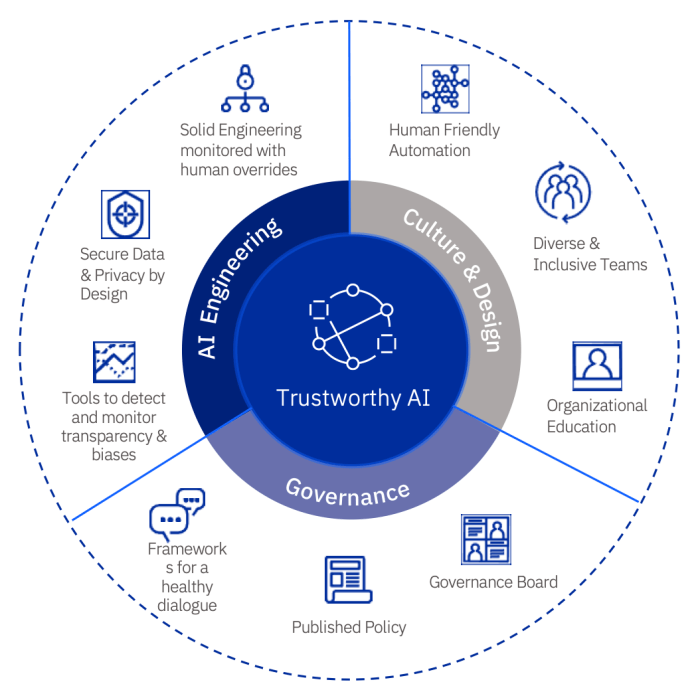

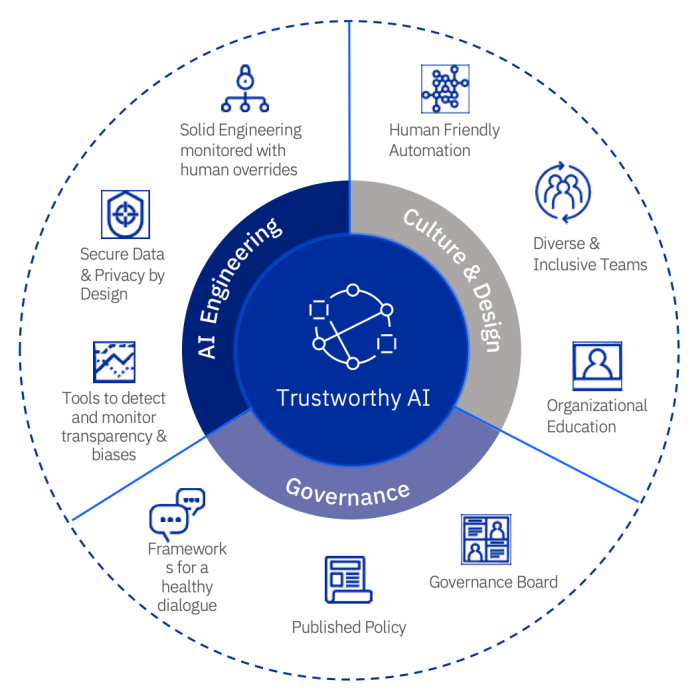

Core Principles of Trustworthy AI

Trustworthy AI rests on three fundamental principles: fairness, accountability, and transparency.

- Fairness: AI systems should be designed and deployed in a way that avoids discrimination and bias, ensuring equitable outcomes for all individuals.

- Accountability: There should be clear mechanisms for identifying and addressing potential harms or errors caused by AI systems. This involves establishing responsibility for the actions of AI and ensuring that individuals are held accountable for their development and deployment.

- Transparency: AI systems should be understandable and explainable, allowing users to comprehend how decisions are made and to challenge potential biases or errors. This fosters trust and allows for informed decision-making.

Factors Contributing to Trust in AI

Several factors contribute to building trust in AI systems:

- Data Privacy: Ensuring the secure and responsible handling of personal data used to train and operate AI systems is crucial. This includes obtaining informed consent, implementing robust data security measures, and adhering to privacy regulations.

- Algorithmic Bias Mitigation: AI systems can inherit and amplify biases present in the data they are trained on. Identifying and mitigating these biases through techniques like data augmentation, fairness-aware algorithms, and regular audits is essential for building trust.

- User Understanding: Providing users with clear and accessible information about how AI systems work, their limitations, and the potential impact of their decisions is vital for building trust and empowering users to interact with AI responsibly.

The Role of Explainability and Interpretability

Explainability and interpretability play a critical role in fostering trust and accountability in AI.

- Explainabilityrefers to the ability to provide clear and understandable explanations for the decisions made by AI systems. This allows users to understand the reasoning behind AI outputs and to identify potential biases or errors.

- Interpretabilityfocuses on understanding the internal workings of AI models, allowing developers to diagnose potential problems, identify areas for improvement, and ensure the system’s behavior aligns with ethical and societal values.

Explainable AI: Ai Governance Critical Trustworthy Explainable Ai

Imagine a self-driving car making a sudden stop, or a loan application being denied by an AI-powered system. Wouldn’t you want to know why these decisions were made? This is where Explainable AI (XAI) comes into play. XAI aims to demystify the inner workings of AI systems, making their decisions more transparent and understandable to humans.

The Importance of Explainable AI

XAI is crucial for building trust and confidence in AI systems. When we understand how an AI system arrives at its conclusions, we can better assess its reliability and fairness. This is especially important in high-stakes applications like healthcare, finance, and law enforcement, where decisions can have significant consequences.

Find out further about the benefits of uk conservative party launch spotify ad campaign that can provide significant benefits.

Explainability Techniques

There are various techniques for making AI systems more explainable. Here are a few examples:

Decision Trees

Decision trees are a simple yet powerful way to visualize the decision-making process of an AI system. They break down a complex decision into a series of simple rules, making it easy to understand how the system arrives at its conclusion.

Rule-Based Systems

Rule-based systems explicitly define the rules that an AI system uses to make decisions. These rules are often expressed in a human-readable format, making it easier to understand the system’s logic.

Feature Attribution Methods

These methods identify the key features that contributed most to an AI system’s decision. By understanding which features were most influential, we can gain insights into the system’s reasoning process. For example, in a loan application, feature attribution might reveal that the applicant’s credit score and income were the most significant factors in the decision.

Challenges and Limitations of Explainable AI

While XAI holds great promise, there are also challenges and limitations to consider.

Complexity of AI Models

Many AI models, especially deep neural networks, are highly complex and difficult to interpret. Extracting meaningful explanations from these models can be challenging.

Trade-off Between Accuracy and Explainability

Sometimes, simplifying an AI model to make it more explainable can lead to a decrease in accuracy. Finding the right balance between accuracy and explainability is an ongoing challenge.

Data Privacy Concerns

Explainable AI can sometimes reveal sensitive information about the data used to train the AI system. This raises concerns about data privacy and security.

Impact on User Trust and Decision-Making

XAI can significantly impact user trust and decision-making by:

Increasing Transparency and Accountability

When users understand how an AI system works, they are more likely to trust its decisions and hold it accountable for its actions.

Facilitating Human-AI Collaboration

By making AI systems more transparent, XAI can facilitate collaboration between humans and AI, allowing humans to better understand and guide AI decisions.

Improving User Experience

XAI can provide users with more meaningful feedback about AI decisions, leading to a more positive user experience.

Governance Mechanisms for Explainable AI

The development and deployment of explainable AI (XAI) systems necessitate specific governance mechanisms to address the unique challenges they present. These mechanisms aim to promote transparency, accountability, and responsible use of AI systems.

Regulations and Standards for Explainable AI

To foster trust and confidence in XAI systems, regulatory frameworks and industry standards are crucial. These guidelines provide clear expectations for the design, development, and deployment of explainable AI.

- Data Protection Regulations:Existing regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) can be leveraged to promote transparency and accountability in AI systems. These regulations require organizations to provide individuals with clear information about how their data is used, including how AI systems process their data and the rationale behind decisions made by these systems.

- AI Ethics Guidelines:Organizations like the OECD and the European Union have developed ethical guidelines for AI, emphasizing the importance of explainability, fairness, and non-discrimination. These guidelines provide a framework for responsible AI development and deployment, encouraging the use of XAI techniques to ensure that AI systems operate ethically and fairly.

- Industry Standards:Industry bodies like the IEEE and ISO are developing standards for explainable AI, focusing on specific aspects like model interpretability, data quality, and risk assessment. These standards can provide a common language and framework for assessing the explainability of AI systems, facilitating greater consistency and comparability across different applications.

Independent Audits and Assessments

Independent audits and assessments play a vital role in verifying the explainability of AI systems. These assessments ensure that the explanations provided by AI systems are accurate, reliable, and understandable to stakeholders.

- Third-Party Audits:Independent auditors can evaluate the explainability of AI systems by assessing the quality of the explanations provided, the methods used to generate explanations, and the overall transparency of the system. These audits can help identify potential biases, inconsistencies, or limitations in the explanations, providing valuable insights for improving the system’s explainability.

- Data and Model Validation:Independent assessments can also involve validating the data used to train the AI system and evaluating the performance of the model. This helps ensure that the explanations provided by the system are based on accurate and reliable data, and that the model’s performance is consistent with the explanations provided.

- User-Centric Evaluation:To ensure that explanations are truly understandable to human users, independent assessments should involve user-centric evaluations. This involves testing the explanations with representative users and gathering feedback on their clarity, comprehensibility, and usefulness. This feedback can help identify areas where the explanations need to be improved to better meet the needs of users.

Case Studies and Best Practices

Successfully implementing AI governance frameworks requires real-world examples and lessons learned. This section explores case studies showcasing the impact of explainable AI on decision-making and user trust, highlighting successful implementations and challenges faced.

Examples of Successful Implementations of AI Governance Frameworks

These examples demonstrate the practical application of AI governance principles, including specific regulations, standards, and best practices.

- The European Union’s General Data Protection Regulation (GDPR):This comprehensive data privacy law emphasizes transparency and accountability in the use of personal data, particularly by AI systems. It requires organizations to provide clear explanations of how AI decisions are made and to allow individuals to access, correct, and delete their data.

The GDPR has significantly influenced the development of explainable AI frameworks and promoted the use of techniques like data anonymization and differential privacy to enhance privacy and transparency.

- The California Consumer Privacy Act (CCPA):This US state law, similar to GDPR, grants consumers rights regarding their personal data, including the right to know how their data is being used by AI systems. It mandates organizations to provide clear explanations of AI algorithms and their decision-making processes, empowering consumers to understand and control their data.

- The UK’s National Institute of Standards and Technology (NIST) AI Risk Management Framework:This framework provides a comprehensive approach to managing risks associated with AI systems. It emphasizes the importance of explainability, transparency, and accountability throughout the AI lifecycle, from development to deployment and monitoring. The NIST framework offers practical guidance for organizations to implement robust AI governance mechanisms.

Case Studies Demonstrating the Impact of Explainable AI on Decision-Making and User Trust

These case studies showcase the real-world impact of explainable AI on various domains, highlighting how transparency and understanding contribute to improved decision-making and increased user trust.

- Healthcare:In healthcare, explainable AI is crucial for building trust in AI-powered diagnostic tools. For instance, a study by researchers at Stanford University developed an explainable AI system for diagnosing pneumonia from chest X-rays. The system not only provided accurate diagnoses but also generated explanations highlighting the key features in the X-ray images that contributed to the diagnosis.

This transparency allowed doctors to better understand the system’s reasoning and build trust in its recommendations.

- Finance:Explainable AI is essential for building trust in AI-powered credit scoring models. By providing explanations for credit decisions, lenders can demonstrate fairness and transparency, enhancing user trust and reducing the risk of bias. For example, a financial institution might use explainable AI to highlight factors like income stability, credit history, and debt-to-income ratio that contribute to a specific credit score.

This transparency allows individuals to understand the rationale behind their credit scores and take steps to improve their financial standing.

- Criminal Justice:Explainable AI is critical for promoting fairness and transparency in AI-powered risk assessment tools used in the criminal justice system. By providing explanations for risk scores, these tools can help reduce bias and ensure that decisions are based on relevant factors.

For instance, a study by researchers at the University of Chicago found that explainable AI can help identify and mitigate biases in risk assessment tools used to predict recidivism. This transparency can contribute to fairer sentencing and improve public trust in the criminal justice system.

Challenges and Lessons Learned from Real-World Applications of AI Governance

While significant progress has been made in AI governance, real-world applications present challenges and lessons learned that inform ongoing development and refinement.

- Complexity of Explainability Techniques:Developing effective explainable AI techniques is challenging, as different approaches have varying strengths and limitations. Selecting the appropriate technique for a specific application requires careful consideration of factors like the complexity of the AI model, the data used, and the intended audience for the explanations.

Additionally, balancing explainability with model accuracy and efficiency is crucial.

- Data Privacy and Security:Explainable AI can raise concerns about data privacy and security. Sharing insights from AI models might inadvertently reveal sensitive information about individuals or organizations. Balancing the need for transparency with data privacy and security requires careful consideration of data anonymization techniques, differential privacy, and other methods to protect sensitive information.

- Ethical Considerations:Implementing AI governance frameworks raises ethical considerations, particularly regarding bias and fairness. Explainable AI can help identify and mitigate biases in AI systems, but it’s essential to ensure that explanations are accurate, understandable, and unbiased. This requires careful consideration of ethical principles and the potential impact of AI systems on individuals and society.

Future Directions for AI Governance

The rapid evolution of artificial intelligence (AI) presents both extraordinary opportunities and significant challenges. As AI technologies continue to advance at an unprecedented pace, it becomes increasingly crucial to establish robust governance frameworks that ensure responsible development and deployment. This necessitates a forward-looking approach that anticipates emerging trends and adapts to evolving ethical considerations.

Emerging Trends and Challenges

The landscape of AI governance is constantly shifting, driven by the emergence of new technologies and applications. The rise of synthetic data, autonomous systems, and AI-driven decision-making presents both opportunities and challenges for governance frameworks.

- Synthetic Data:The increasing use of synthetic data, generated by AI algorithms to mimic real-world data, raises questions about data privacy, bias, and the potential for misuse. Governance frameworks must address the ethical implications of synthetic data and ensure its responsible use.

- Autonomous Systems:The development of autonomous systems, such as self-driving cars and robots, poses unique challenges for governance. Establishing clear accountability mechanisms for autonomous systems is essential to address potential risks and ensure public safety.

- AI-Driven Decision-Making:As AI increasingly influences decision-making in various sectors, from healthcare to finance, it becomes critical to ensure fairness, transparency, and accountability. Governance frameworks must address potential biases in AI algorithms and establish mechanisms for human oversight of AI-driven decisions.

Potential Future Directions for AI Governance Frameworks

Addressing the emerging trends and challenges requires a proactive approach to AI governance. Future frameworks should prioritize international cooperation, evolving ethical considerations, and the development of new technologies for AI oversight.

- International Cooperation:The global nature of AI necessitates international cooperation to establish common standards and principles for AI governance. This can involve sharing best practices, developing joint research initiatives, and coordinating regulatory efforts.

- Evolving Ethical Considerations:As AI technologies advance, ethical considerations will continue to evolve. Governance frameworks must be flexible and adaptable to address emerging ethical challenges, such as the impact of AI on employment, privacy, and social equity.

- New Technologies for AI Oversight:The development of new technologies for AI oversight, such as AI auditing tools and explainable AI techniques, can enhance transparency and accountability. These technologies can help ensure that AI systems are developed and deployed responsibly and that their decisions can be understood and challenged.

Vision for a Responsible AI Future

A future where AI is developed and deployed responsibly requires a collective effort. By prioritizing international cooperation, embracing evolving ethical considerations, and investing in new technologies for AI oversight, we can create a world where AI benefits all while mitigating potential risks.

This vision encompasses:

- AI for the Public Good:AI should be used to address pressing global challenges, such as climate change, poverty, and disease.

- Inclusive AI:AI technologies should be accessible to all, regardless of background or socioeconomic status.

- Human-Centered AI:AI should be designed to augment human capabilities and enhance human well-being, not replace humans.