Eu adopts ai act artificial intelligence rules – The EU Adopts AI Act: Artificial Intelligence Rules marks a pivotal moment in the global landscape of AI regulation. This landmark legislation, the first of its kind, aims to establish a comprehensive framework for the development, deployment, and use of AI systems within the European Union.

The Act is designed to ensure that AI technologies are developed and used responsibly, ethically, and in a manner that benefits society while mitigating potential risks.

The EU AI Act is a testament to the growing recognition of the transformative power of artificial intelligence and the need for robust regulatory measures to guide its responsible development. It aims to strike a balance between promoting innovation and safeguarding fundamental rights, including privacy, safety, and non-discrimination.

This ambitious initiative has the potential to influence AI regulations worldwide, setting a precedent for other countries and regions seeking to establish ethical and effective AI governance.

The EU AI Act

The EU AI Act stands as a landmark piece of legislation, representing the world’s first comprehensive regulatory framework for artificial intelligence. It signifies a pivotal moment in the global landscape of AI governance, setting a precedent for how AI systems are developed, deployed, and regulated.

The Act’s far-reaching impact extends beyond the EU, potentially influencing AI regulations worldwide.

The EU AI Act’s Global Impact

The EU AI Act’s significance lies in its potential to shape the global landscape of AI regulation. Its principles and guidelines are likely to inspire and influence similar regulatory efforts in other regions, particularly those that prioritize ethical and responsible AI development.

- The Act’s risk-based approach to AI regulation, categorizing AI systems based on their potential risks, provides a framework that other countries can adapt and implement.

- The Act’s focus on transparency, accountability, and human oversight in AI systems sets a high bar for ethical AI development, prompting similar standards in other jurisdictions.

- The EU’s position as a global leader in data privacy and data protection, as exemplified by the General Data Protection Regulation (GDPR), reinforces the importance of ethical and responsible AI development, influencing similar regulations in other regions.

Comparison with Other AI Regulations

While the EU AI Act stands as a global first, other major regions are also developing their own AI regulations.

- The United States:While lacking a comprehensive federal AI law, the US has focused on AI governance through sector-specific regulations and guidelines. The National Institute of Standards and Technology (NIST) has published guidelines on AI risk management, while agencies like the Federal Trade Commission (FTC) have investigated potential AI-related consumer protection issues.

- China:China has adopted a more proactive approach to AI regulation, implementing guidelines and standards for AI development and deployment. The “New Generation Artificial Intelligence Development Plan” Artikels a national strategy for AI development, while the “Regulations on the Administration of Artificial Intelligence Deep Synthesis” aim to regulate AI-generated content.

- Canada:Canada has adopted a multi-stakeholder approach to AI governance, with the “Directive on Artificial Intelligence and Data” providing guidance for public sector use of AI. The Canadian government has also established the “Pan-Canadian Artificial Intelligence Strategy” to promote AI research and development.

You also can understand valuable knowledge by exploring europe wants geothermal energy to replace natural gas.

Key Differences and Similarities

While the EU AI Act is a groundbreaking piece of legislation, it shares similarities and differences with existing or proposed AI regulations in other regions.

| Region | Key Features | Similarities | Differences |

|---|---|---|---|

| EU | Risk-based approach, focus on transparency, accountability, and human oversight | Emphasis on ethical AI development, transparency, and accountability | Comprehensive regulatory framework, specific provisions for high-risk AI systems |

| US | Sector-specific regulations, guidelines on AI risk management | Focus on AI risk management, consumer protection | Lack of a comprehensive federal AI law, reliance on sector-specific regulations |

| China | Guidelines and standards for AI development and deployment, national AI strategy | Emphasis on AI development and deployment, national AI strategy | More proactive approach to AI regulation, focus on national AI development |

| Canada | Multi-stakeholder approach, guidance for public sector use of AI, national AI strategy | Focus on ethical AI development, AI research and development | Multi-stakeholder approach, focus on public sector use of AI |

Key Provisions of the EU AI Act

The EU AI Act is a groundbreaking piece of legislation that aims to regulate the development and deployment of artificial intelligence (AI) systems in the European Union. This legislation categorizes AI systems based on their potential risks and establishes specific requirements for each category.

This framework promotes responsible AI development and deployment, ensuring ethical considerations and human oversight.

Risk Levels of AI Systems, Eu adopts ai act artificial intelligence rules

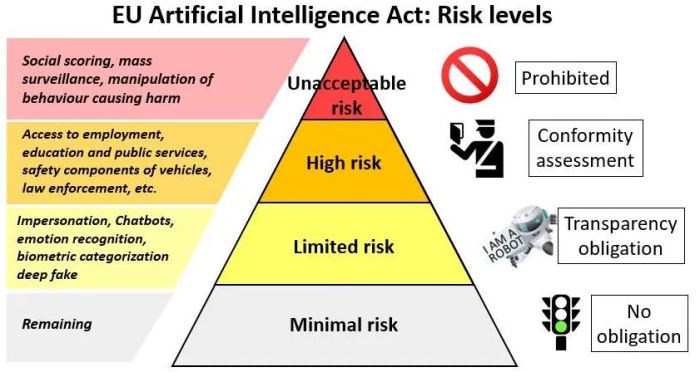

The EU AI Act categorizes AI systems into four risk levels based on their potential impact on safety, fundamental rights, and the environment. These risk levels determine the specific requirements and obligations for developers and deployers of AI systems.

- Unacceptable Risk AI Systems: These systems are considered to pose an unacceptable risk to safety, fundamental rights, or the environment. They are prohibited and will not be allowed to be developed or deployed within the EU. Examples include AI systems used for social scoring, real-time facial recognition in public spaces for law enforcement purposes, and AI-powered weapons systems that can operate autonomously.

- High-Risk AI Systems: These systems are identified as posing a high risk to safety, fundamental rights, or the environment. These systems require rigorous risk assessments, robust technical documentation, human oversight, and conformity assessments before they can be deployed. Examples include AI systems used in critical infrastructure, healthcare, education, and law enforcement, such as self-driving cars, medical diagnostic tools, and recruitment systems.

- Limited-Risk AI Systems: These systems are considered to pose a limited risk to safety, fundamental rights, or the environment. They are subject to less stringent requirements compared to high-risk systems but still require some transparency and documentation. Examples include AI systems used in chatbots, spam filters, and personalized recommendations.

- Minimal-Risk AI Systems: These systems are considered to pose minimal risk to safety, fundamental rights, or the environment. They are subject to minimal regulatory oversight and are not subject to specific requirements. Examples include AI systems used in games, entertainment, and marketing.

Requirements for Developers and Deployers of AI Systems

The EU AI Act Artikels specific requirements for developers and deployers of AI systems, depending on the risk level of the system. These requirements aim to ensure the safety, transparency, and ethical use of AI.

- High-Risk AI Systems: Developers and deployers of high-risk AI systems must comply with a comprehensive set of requirements, including:

- Risk Assessment: Conduct a thorough risk assessment to identify and mitigate potential risks to safety, fundamental rights, and the environment.

- Technical Documentation: Maintain detailed technical documentation outlining the system’s design, functionality, and intended use.

- Human Oversight: Implement robust human oversight mechanisms to ensure that the AI system operates within ethical and legal boundaries.

- Data Governance: Ensure the quality, integrity, and ethical sourcing of the data used to train and operate the AI system.

- Transparency and Explainability: Provide users with clear and concise information about the AI system’s functionality and decision-making process.

- Conformity Assessment: Submit the AI system for conformity assessment by a notified body to ensure compliance with the EU AI Act requirements.

- Limited-Risk AI Systems: Developers and deployers of limited-risk AI systems must comply with specific requirements, including:

- Transparency and Information: Provide users with clear and concise information about the AI system’s functionality and purpose.

- Documentation: Maintain adequate documentation outlining the system’s design, functionality, and intended use.

Transparency, Accountability, and Human Oversight

The EU AI Act places significant emphasis on transparency, accountability, and human oversight in the development and deployment of AI systems. These provisions aim to ensure that AI systems are used ethically and responsibly, and that human control is maintained over their operation.

- Transparency: The EU AI Act requires developers and deployers of AI systems to provide users with clear and concise information about the system’s functionality, purpose, and limitations. This includes explaining the decision-making process, the data used to train the system, and the potential risks associated with its use.

- Accountability: The EU AI Act establishes clear accountability mechanisms for developers and deployers of AI systems. This includes ensuring that they are responsible for the consequences of their systems’ actions and that they can be held accountable for any harm caused by their use.

- Human Oversight: The EU AI Act emphasizes the importance of human oversight in the development and deployment of AI systems. This means that humans must retain control over the system’s operation and be able to intervene in cases of malfunction or ethical concerns.

Impact on Businesses and Industries

The EU AI Act, with its wide-ranging regulations, will undoubtedly have a significant impact on businesses across various sectors. While the Act aims to foster responsible AI development and deployment, it also presents challenges for companies to navigate and adapt their AI strategies.

This section explores the potential implications of the EU AI Act for different industries, the challenges and opportunities it presents for businesses, and how companies can prepare to meet its requirements.

Impact on Healthcare

The healthcare sector is expected to be profoundly impacted by the EU AI Act. AI is increasingly used in healthcare for tasks like diagnosis, treatment planning, and drug discovery. The Act’s provisions regarding high-risk AI systems will be particularly relevant in healthcare, as these systems can have significant implications for patient safety and well-being.

For instance, AI-powered diagnostic tools used to identify diseases must meet stringent requirements regarding accuracy, reliability, and transparency. Healthcare providers will need to ensure that their AI systems comply with the Act’s provisions, which could involve implementing robust testing and validation procedures, documenting AI decision-making processes, and ensuring that patients are informed about the use of AI in their care.

Ethical Considerations and Societal Impact

The EU AI Act, aiming to regulate the development and deployment of AI systems, raises crucial ethical considerations and potential societal impacts. This legislation aims to ensure that AI is developed and used responsibly, fostering trust and minimizing risks. It addresses concerns regarding bias, transparency, accountability, and the potential displacement of human workers.

Impact on Employment

The EU AI Act’s impact on employment is a complex issue. While AI has the potential to automate tasks and create new jobs, it also raises concerns about job displacement. The Act encourages the development of AI systems that complement human work rather than replacing it.

It promotes upskilling and reskilling initiatives to equip workers with the skills needed to thrive in an AI-driven economy. For example, the Act encourages the development of AI-assisted training programs and educational initiatives to prepare workers for the changing job landscape.

Implementation and Enforcement: Eu Adopts Ai Act Artificial Intelligence Rules

The EU AI Act’s implementation will be a complex process involving multiple stakeholders and requiring a collaborative approach. This section explores the process of implementing the Act, including the roles of various actors, the mechanisms for enforcement, and the potential challenges and opportunities in ensuring effective enforcement.

The Implementation Process

The EU AI Act will be implemented through a combination of legislative and regulatory measures. The European Commission will play a key role in this process, working with member states, businesses, and other stakeholders to develop and implement the necessary regulations.

- The European Commission will be responsible for drafting and proposing implementing acts to clarify the provisions of the AI Act and adapt it to specific contexts.

- Member states will have a role in transposing the AI Act into their national laws, ensuring consistency across the EU.

- The European Data Protection Board (EDPB) will play a crucial role in ensuring the compliance of AI systems with data protection rules, particularly those related to high-risk AI systems.

- National authorities will be responsible for enforcing the AI Act within their jurisdictions, including monitoring compliance and imposing penalties on non-compliant entities.

- Businesses will need to adapt their operations to comply with the AI Act, including assessing the risks associated with their AI systems, implementing appropriate safeguards, and ensuring transparency in their AI systems.

- Civil society organizations will play a vital role in monitoring the implementation of the AI Act and advocating for its effective enforcement, ensuring that the Act’s goals of promoting ethical and responsible AI development are achieved.

Enforcement Mechanisms

The EU AI Act Artikels various mechanisms for enforcement, including:

- Market surveillance: National authorities will be responsible for monitoring the market for AI systems, ensuring compliance with the AI Act’s requirements. This may involve inspections, investigations, and testing of AI systems.

- Administrative sanctions: National authorities can impose administrative sanctions on entities that violate the AI Act, such as fines, orders to stop using non-compliant AI systems, or orders to rectify non-compliance.

- Judicial remedies: Individuals and organizations can bring legal action against entities that violate the AI Act, seeking compensation for damages or injunctive relief.

- Cooperation and information sharing: National authorities will be required to cooperate and share information with each other and with the European Commission to ensure effective enforcement of the AI Act across the EU.

Penalties for Non-Compliance

The AI Act Artikels a tiered system of penalties for non-compliance, with the severity of the penalty depending on the nature and severity of the violation. The maximum penalty for non-compliance with the AI Act is a fine of up to 30 million euros or 6% of the company’s global annual turnover, whichever is higher.

Challenges and Opportunities in Ensuring Effective Enforcement

Ensuring effective enforcement of the EU AI Act presents a number of challenges, including:

- Technical complexity: AI systems are often complex and rapidly evolving, making it challenging for authorities to monitor compliance and assess risks effectively.

- Cross-border nature of AI: AI systems are often developed and used across multiple jurisdictions, making it challenging to coordinate enforcement efforts and ensure consistency across the EU.

- Resource constraints: National authorities may face resource constraints in enforcing the AI Act effectively, particularly in terms of expertise and staffing.

Despite these challenges, the EU AI Act offers several opportunities for effective enforcement, including:

- Increased awareness: The AI Act will raise awareness of AI risks and compliance requirements among businesses and individuals, leading to greater compliance.

- Improved cooperation: The Act’s emphasis on cooperation between national authorities and the European Commission will facilitate effective enforcement and information sharing.

- New technologies: Emerging technologies, such as AI-powered monitoring tools, can be used to support enforcement efforts and improve efficiency.

Future Directions and Developments

The EU AI Act is a groundbreaking piece of legislation, but it is only the beginning of the journey toward responsible and ethical AI development and deployment. The rapid evolution of AI technologies, coupled with the emergence of new challenges, necessitates a dynamic and adaptable regulatory landscape.

This section explores potential future developments in AI regulation, both within the EU and globally, and examines how evolving AI technologies and emerging fields like quantum computing and synthetic biology will impact the regulatory framework.

Evolving AI Technologies and Their Implications for Regulatory Frameworks

The AI landscape is constantly evolving, with new technologies and applications emerging at a rapid pace. These advancements pose both opportunities and challenges for regulatory frameworks. The EU AI Act, while comprehensive, may need to be adapted to address the unique characteristics of emerging AI technologies.

- Generative AI:The rapid rise of generative AI models, such as large language models (LLMs) and image generators, has raised concerns about potential misuse, including the creation of deepfakes and the spread of misinformation. Regulators may need to develop specific guidelines for the development and deployment of these models, focusing on transparency, accountability, and mitigation of risks.

- AI-powered autonomous systems:The increasing autonomy of AI systems, particularly in domains like transportation, healthcare, and finance, presents complex challenges for regulatory frameworks. Regulators may need to consider issues like liability, safety standards, and the potential for bias in decision-making processes.

- AI in the workplace:The use of AI in recruitment, performance evaluation, and other aspects of the workplace raises concerns about fairness, discrimination, and the potential displacement of human workers. Regulators may need to establish guidelines to ensure that AI systems used in the workplace are transparent, fair, and do not violate human rights.